Stable max before was 960 (might be a bit more, but 980 definitely was not). GPU-z has been update lately to (more?) correctly detect HBM manufacturer. I have not tests mining efficiency at same clock speeds, but I am getting 38.5 MH/s as well at roughly the clocks you stated (995/1100 MHz, 71/77/81 °C for the three temps with HBM obviously being the hottest, and at 2,000-2,100 rpm fan speed in an rather small desktop case, GPU-z tells me 0,875v, though it might be higher, did not measure directly at card here at home). System pulls 205W at the wall, GPUz says GPU-only power draw is 116W, normal idle for this system is around 35W.

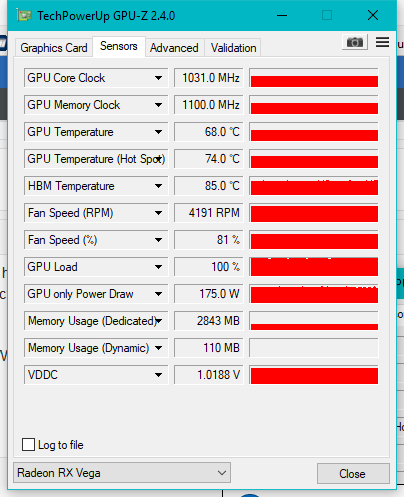

GPU-z 2.4.0 tells me my GPU power is 174W at 1.0188V where before it was 128W at 0.918V. This pretty much reflects what my Kill-A-Watt is showing, an increase by about 20W at the wall with total of 452W mining at 38.5+29.7MH/s (Vega 56 + Fury) compared to 430W mining at 34.7+29.7MH/s before flashing. Same GPU clocks, only difference is now HBM at 1100MHz versus 930MHz before and higher vGPU floor. It is still worth it as 3.5MH/s costs me around 25W of power.

The only problem I see on my side compared to your results are temps! I need 4200RPM fan to keep HBM2 below 85C throttling point as hashing drops to 2xMH/s as soon as it reaches 87C.

This is after 1h of mining:

I suspect bad assembly on my card and lack of proper contact between HBM modules and heatsink. Air temperature coming of the vent is at 50C. I have good gap between Fury and Vega, but will test later today without Fury as I'm selling it.

PS. which BIOS you've used? I loaded Powercolor (as my card's original manufacturer) BIOS from TPU, but there also is slightly newer revision for Sapphire on their site.

Last edited: