Was this really deleted? Or am I missing something?My first impressions:

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia GeForce RTX 4090 Reviews

- Thread starter DavidGraham

- Start date

Man from Atlantis

Veteran

Yeah mods over there deleted it apparently for breaking subreddit rules. Anyways i updated the post and moved everything here.Was this really deleted? Or am I missing something?

Last edited:

davis.anthony

Veteran

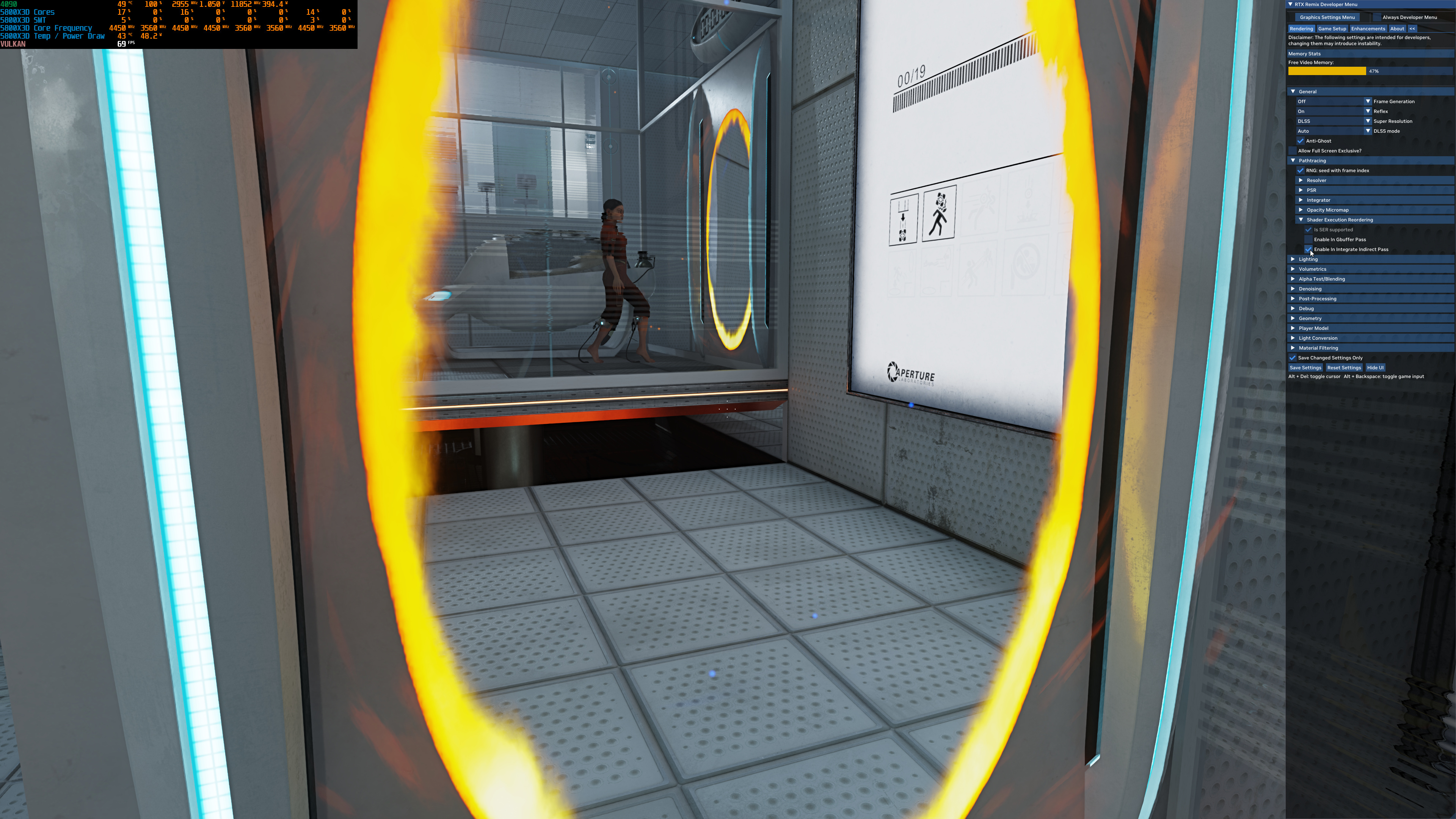

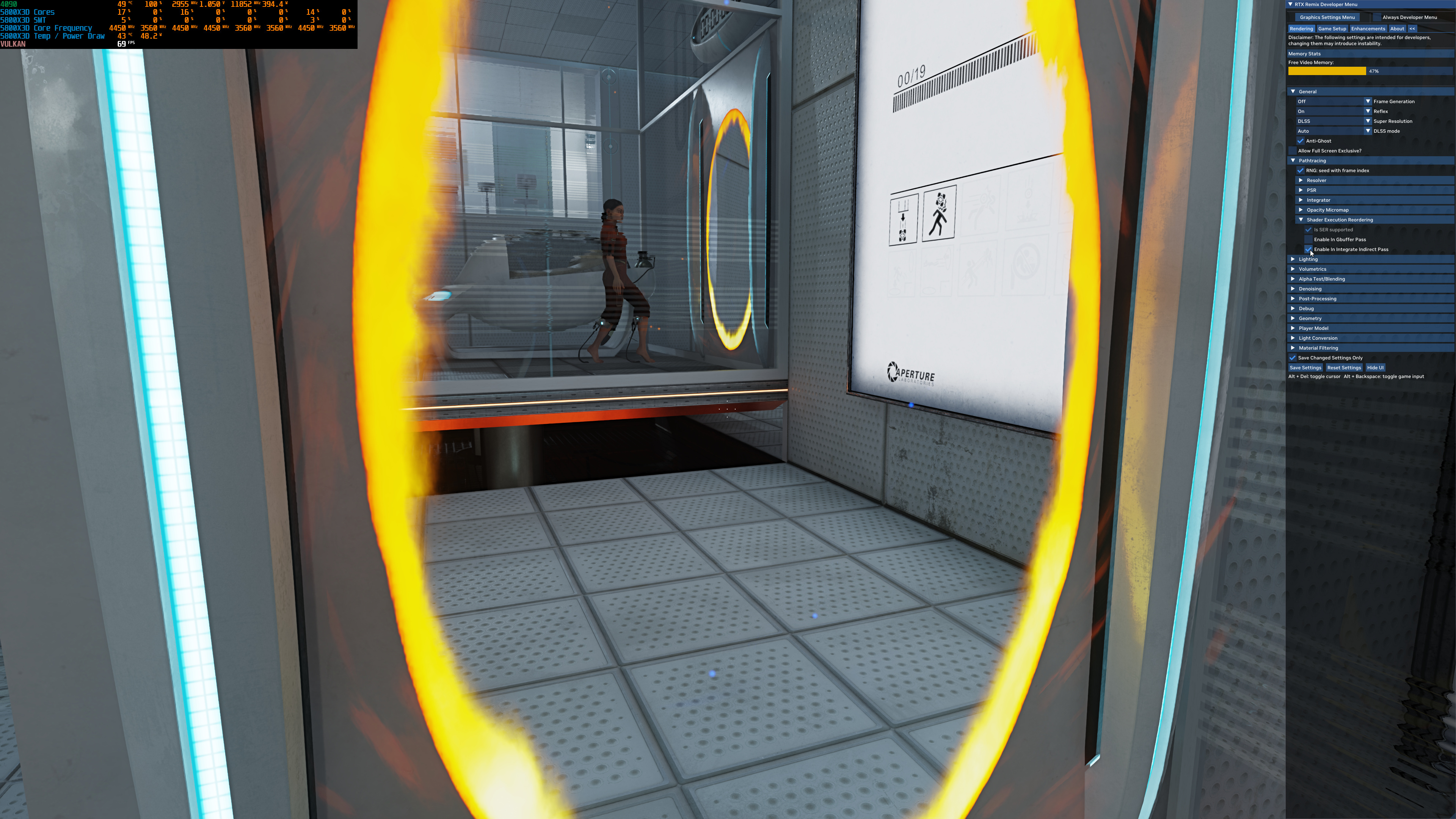

You can toggle SER from the dev settings, in this instance it gives about 22% uplift just by itself, it can vary greatly depending on the scene (makes sense)

22% is a huge uplift for just a single feature!

Henry swagger

Newcomer

Nvidia cheat code22% is a huge uplift for just a single feature!

22% is a huge uplift for just a single feature!

The delta can be much bigger depending on the scene, in this case the uplift is almost 40%

SER Disabled

SER Enabled

SER Enabled

i wonder thats with FG or not?

4090 delivering next gen rdr2 fidelity.

btw seems the filters they use broke the dynamic range / white level / black level / gamma and white balance / color balance?

GhostofWar

Regular

Wouldn't think so, the game doesn't support FG does it?i wonder thats with FG or not?

Yeah Ada is crazy efficient, TSMC 4nm definitely helps

I'm playing Elden Ring on PC and it barely draws 100W at 4K60!

It's "5nm" class and unknown whether it's more closer related to N5 or N4 family, let alone which specific process has been it's base.Yeah Ada is crazy efficient, TSMC 4nm definitely helps

Really hope this game will get a remaster with RTGI, RT reflections, higher quality assets and textures. It would look absolutely glorious.

4090 delivering next gen rdr2 fidelity.

Yep, loving this thing. Despite the craze about how much of a power-hungry beast it is, it consistently draws less power than my 2080 Ti at 3440x1440/120hz and maxes out the refresh rate of my monitor in pretty much every game.Yeah Ada is crazy efficient, TSMC 4nm definitely helps

I'm playing Elden Ring on PC and it barely draws 100W at 4K60!

Has the frequency/power curve changed with Ada or are they just pushing it faster because they can? Like if they’d kept the 4090 officially at 300W, how much slower would it be?

NVIDIA RTX 4090 Loses Almost no Gaming Performance at 4K with a Power Limit of 300W | Hardware Times

NVIDIA’s GeForce RTX 4090 is incredibly power efficient despite having a lofty TBP of 450W. The graphics card rarely comes close to its maximum board power. Testing by Quasarzone shows that the RTX 4090 loses almost no performance with its peak power budget cut to 300W. The GPU is 98% as fast at...

www.hardwaretimes.com

www.hardwaretimes.com

That’s what I suspected. It seems whack to me that they made the thing such a chungus for basically no reason.

NVIDIA RTX 4090 Loses Almost no Gaming Performance at 4K with a Power Limit of 300W | Hardware Times

NVIDIA’s GeForce RTX 4090 is incredibly power efficient despite having a lofty TBP of 450W. The graphics card rarely comes close to its maximum board power. Testing by Quasarzone shows that the RTX 4090 loses almost no performance with its peak power budget cut to 300W. The GPU is 98% as fast at...www.hardwaretimes.com

It's been the norm on higher end cards for a while, 4090s extreme TDP just exaggerates the effectThat’s what I suspected. It seems whack to me that they made the thing such a chungus for basically no reason.

But is it necessary for the TDP to be so high? Seems they could have aimed for ~300W with hardly any noticeable performance loss. Let the AIBs go crazy with the 450W triple/quad slot absolute units.It's been the norm on higher end cards for a while, 4090s extreme TDP just exaggerates the effect

Last edited:

I think Nvidia want to make AIBs irrelevant, so they made the FE a bit crazy, huge cooler + insane max power draw to avoid some variants like the Strix and HOF outshining it. The main difference between FE and AIB 3090s was the higher TDP that allowed slightly higher boost clocks, which gave them the edge over FE. It also makes sure that all benchmark graphs have good numbers from the FE when comparing against AMD/Intel, I think most reviewers use the FE numbers for performance comparisons.But is it necessary for the TDP to be so high? Seems they could have aimed for ~300W with hardly any noticeable performance loss. Let the AIBs go crazy with the 450W triple/quad slot absolute units.

The TDP for the 4090 (and perhaps the 4080 as well) is a data point that inadequately describes the diverse power characteristics of the card. We've come to expect over the past several generations that GPUs will run up against their TDP more often than not. The 4090 does not do that for, I believe, three main reasons. One, the GPU is so damn wide that the typical gaming load doesn't nearly saturate it (either due to game engine/software limitations or hardware bottlenecks present in the CPU or other system hardware). Two, the TSMC process has tremendous voltage /clock scaling, and Nvidia appears to have tapped into the process's full potential. Three, Nvidia's driver smartly throttles down the 4090 when a workload cannot exploit the GPU fully (e.g. low load or frame cap), guiding the GPU to a more efficient position on the v/f curve.But is it necessary for the TDP to be so high? Seems they could have aimed for ~300W with hardly any noticeable performance loss. Let the AIBs go crazy with the 450W triple/quad slot absolute units.

Why the 450W TDP, then, when a 300W or 350W, for most modern gaming work loads, would yield practically the same performance (which is certainly what I've seen in my personal gaming use of my 4090)? I expect the answer is multi-faceted and, among other things, involves the economics of the competitive scene and Nvidia paving the way for changes in PC infrastructure down the road. But, one part of the answer is that a lower TDP would not allow future, more demanding games or software to make use of the 4090 to its fullest. There's no way around it, you need a bunch of power to light-up all those transistors at once. As CPUs and system memory get faster and games evolve to make more use of the 4090, I expect you'll see its power consumption trend upward--but so will performance or, at least, performance won't buckle as it would if the card became power limited at 300W or 350W.

Similar threads

- Replies

- 7

- Views

- 945

- Replies

- 374

- Views

- 51K

- Replies

- 66

- Views

- 7K