Well, that is an interesting idea - I don't mind the portable stuff, but scalable hardware seems to be interesting.

Would it make sense to construct a console, which can be hardware updated - to some extend in the sense of a PC?

I wouldn't go with hardware upgradability. At least, not in terms of replaceable CPU and GPU parts. For economies you want a tight, perfectly designed mobo. However, if the hardware design is upgradeable, you can do like Apple and release generational hardware. The problem is fragmenting the user base, where if you want to target 3rd gen features, you alienate first and 2nd gen owners; a majot headache with PCs. But a closed-hardware, yet uniform upgradeable design, would solve a lot of that. You'd basically just want static cores (don't change the design for 100% compatibility) and add more of them in future iterations, not changing anything, with improved performance coming from number of cores. The same workload distribution mechanisms in place in all devices, the code will work across all scales, and the developers would just need to factor in scaling of their methods to add more stuff/effects/eye-candy for those with better hardware. I suppose a simple hardware polling system to info the game/app how many cores are available would solve that.

And if you do that, it makes sense to be able to sell people a mobo replacement so they can upgrade and keep everything else, instead of throwing away the whole old machine to get a whole new one that's fundamentally the same collection of USB ports and cases and PSU and cooling and stuff. Then again, replacing old machines means passing the old one onto friends and family, which, as they all run the same code, means increasing the install base. Unlike conventional console generations where passing down a console oftem means giving them your old library and that old gen gaining no software growth.

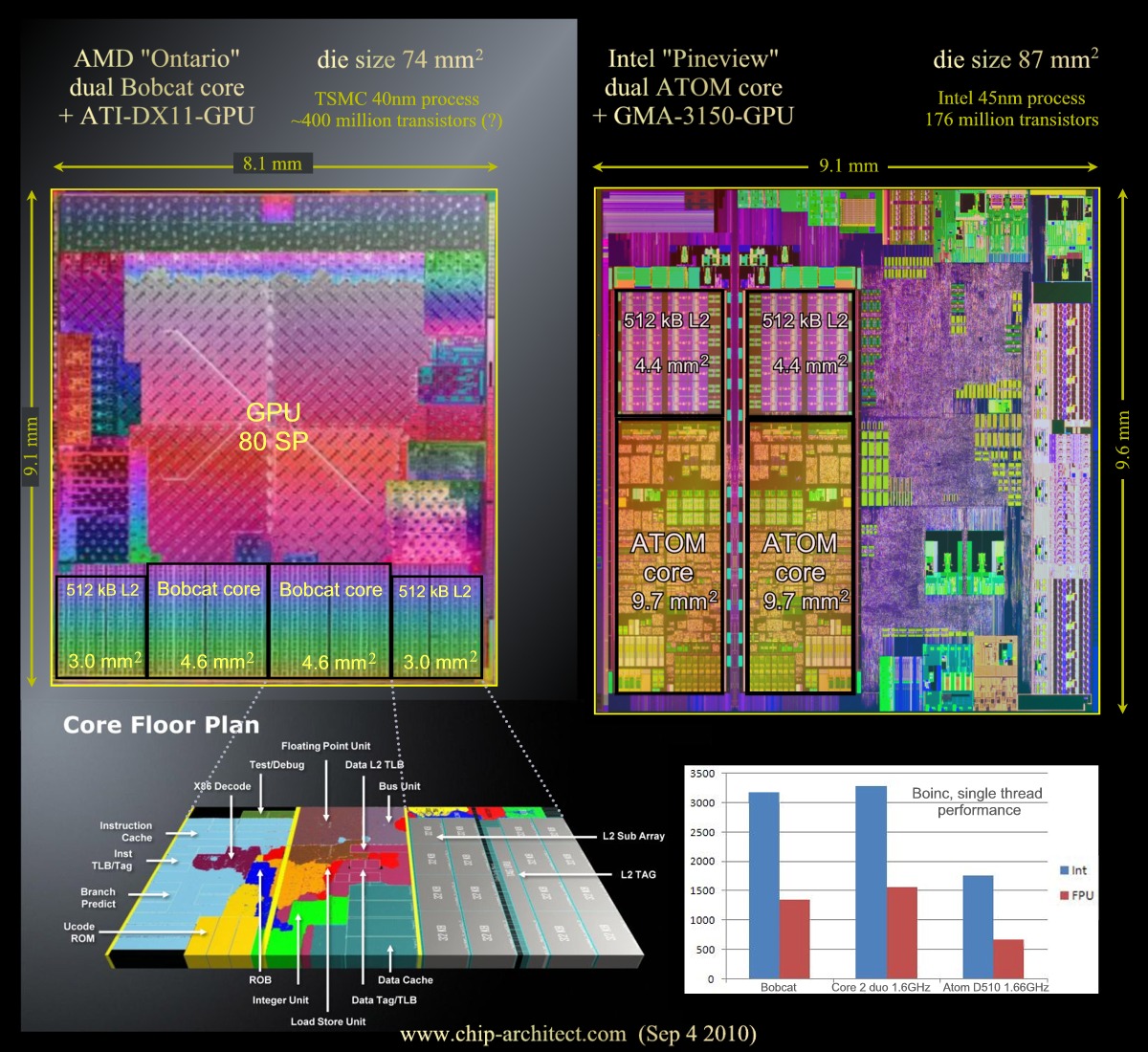

So, my slightly revised, Billy Idol friendly vision of the future, is a core architecture (hell, let's go unified single-core solution for graphics and CPU, meaning the most flexible, scalable solution possible) that can be a few cores for a portable, lots of cores for a console, running the same code that scales according to number of cores meaning your games and apps are playable on all Core Architecture devices. There's a many-core home console version implementation of the Core Architecture for bestest graphics to appease the hardcore. There's a multi-core CorePad tablet for portabililty. All games, save files, data and apps are shareable between tablet and home console. Tablet can be plugged into TVs and use console peripherals, working as Console Lite on the move. With inbuilt camera to provide motion interfacing, perhaps a depth camera. And as chip manufacturing improves enabling more cores in the same space, maybe the innards of both the console and CorePad can be upgraded. Probably not actually. I like the idea of increasing the user base, even if the hand-me-down buyers only buy cheap apps and minigames! Developers still target the same hardware architecture so they don't have to differentiate between the existing 300 million users of Core Architecture or the new buyers of 18nm Core Architecture Gen 2 or Gen 3, but the upgraders get prettier stuff.

All it needs is someone to create a single-core, all-purpose, fully scalable architecture, and we can go into production. At all of $2000 a system it's bound to do well!