We've been through this, but here's another well-researched article. I doubt evidence will cause you to change your imaginary outrage-fueled narrative, but whatever.

It Isn't Transistory

www.fabricatedknowledge.com

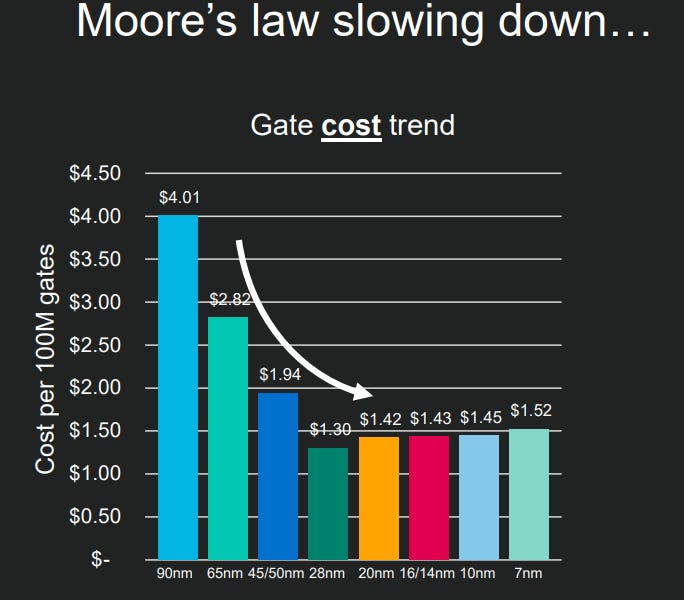

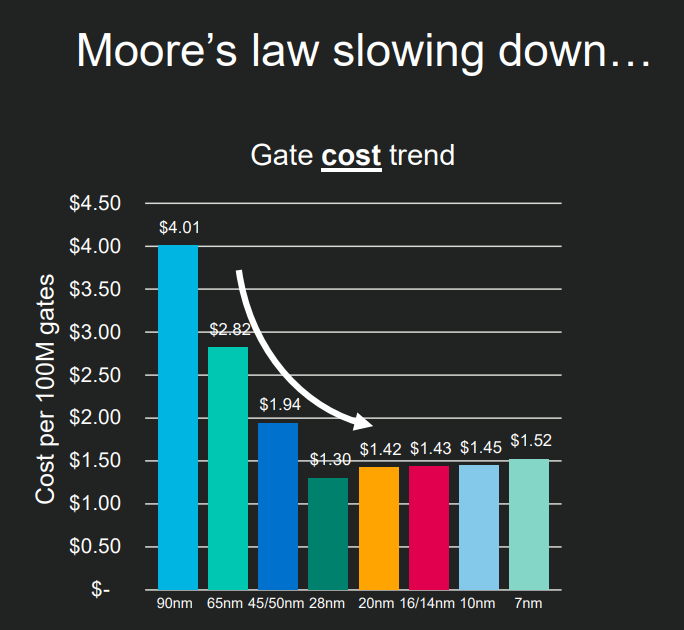

Here's a slide from Marvel's investor presentation linked in that article:

Note that every fabless IHV negotiates different pricing for itself from a foundry, so this data may not be exactly representative for what, for example, Nvidia would have had to pay. That chart above likely plots the cost/gate at a similar relative point in time in each technology's development timeline. Foundries will reduce the price of a tech node over time, and yields improve as well. And so if an IHV thinks they can make a competitive product with an older/cheaper node, they will do so. But they can only buck the trend for so long.

You can find tens of articles and papers on what's happening. Here are some:

There’s a new world order coming for the semiconductor industry, said A.B. Kahng. We’re racing to the end of Moore’s Law, and the race will now be won by sheer capex (capital expenditures) and size…

www.eejournal.com

With cost per transistor on the rise and leading-edge foundry partners dwindling to a few, Qualcomm may face economic difficulties staying at the leading edge of semiconductor technology.

www.fool.com

They have leaked what the price of each TSMC wafer is, costing the 7nm wafer more than $ 9.000 and almost $ 17.000 for the 5nm

hardwaresfera.com

The numbers will be slightly different (again, the exact numbers are buried in contracts), but the trends are the same. In the academic computer architecture community, nearly every paper mentions this trend as part of their introduction. It's well-known, and it's very irritating to see uninformed "opinions" to the contrary.

Without the foundries providing a generational $/transistor improvement, the only scaling fabless IHVs can provide are from clock speed, architectural improvements and clever algorithms.

Yes, that's because they used an older Samsung node that was way cheaper. That's why we got a xx102-based xx80 (non-ti).

For the Ampere->Ada move we're seeing the multiplicative effect of the fact that they went from a hungry competitor (Samsung) to the king of the hill (TSMC), AND that they went from an old, cheap node to a leading edge node. Everything we're seeing is the net impact of these cost increases. So even though the process node gives massively increased transistors-per-mm^2, that comes with a massively increased price-per-mm^2. So again, the only scaling that's possible came from clock speed, architectural improvements and clever algorithms.

If there's a silver lining here, it's that based on what I've seen so far in public documents TSMC isn't increasing 3nm price _that_ dramatically. Hopefully Samsung ups their foundry game at some point, and maybe Intel will become a viable foundry as well. Some competition will help, but let's not jump on the corporate vilification bandwagon and start attacking TSMC here. They are solving amazingly hard problems that everyone else seems to be struggling to do.