Where is it stated that Starfield uses VRS?I think the information we have shows the game using Series X's extra potentially pretty well. Series X has over the Series S:

- 2.56x the base resolution, plus:

- higher quality shadows

- higher environment LOD

- higher vegetation LOD

- higher texture LOD bias

- 4x res of cubemap reflections (and generated dynamically rather than baked)

That'll account for differences in memory quantity, bandwidth, fillrate between the machines. Use of VRS probably indicates that they're pushing hard on the shader front too.

To me, Starfield seems to be a game that's been very well tuned for both the Series S and the Series X. It seems to be exactly the kind of outcome that MS had in mind when they were envisioning the Series S.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry Article Technical Discussion [2023]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

Where is it stated that Starfield uses VRS?

Starfield PC performance: best settings, FSR 2, benchmarks, and more

Starfield is finally in our hands, and we've put it through a series of tests on PC to bring you optimized settings and performance.

Last edited:

I disagree. The game varies from mediocre to good looking but the variance between Series S and X has to be the smallest I've seen from any game released this gen, whether cross-gen or current gen only.

Really? A resolution of 2.56x higher, plus other setting increases, is the smallest difference for any game released this gen?

Also, the term "higher" is subjective and I expected a difference of PC min vs PC Ultra between S and X. I'm just not seeing that here.

Series X Starfield having higher resolution shadows, higher res dynamic cubemaps, higher foliage settings for grass (which munches fillrate), environmental fine detail pushed further out, doesn't seem subjective as DF have shown places where it's definitely the case.

Anyway, how is the Series X going to do a jump from PC Min equivalent settings to PC Ultra while also maintaining a huge and very much demanded jump in resolution?

Series X's biggest area of advantage over the Series S is, going by the numbers, probably compute. It's about 3x higher. Everything else is more like 2.4 or 2.5 times higher (BW, fillrate, cache size, cache BW) or lower. The 3D pipeline gets a bit more efficient as you increase resolution, but even so 2.56x base resolution jump plus other increased settings is pretty good.

If you'd preferred them to keep the same res and bump up some other settings fair enough, but this is what I'd expect to see for a game tuned to the strengths of the two machines. I think it's perfectly legitimate for the primary difference between the two to be resolution (and resolution of e.g. reflections) - this is what pretty much every other game does and it's what MS marketed the consoles on.

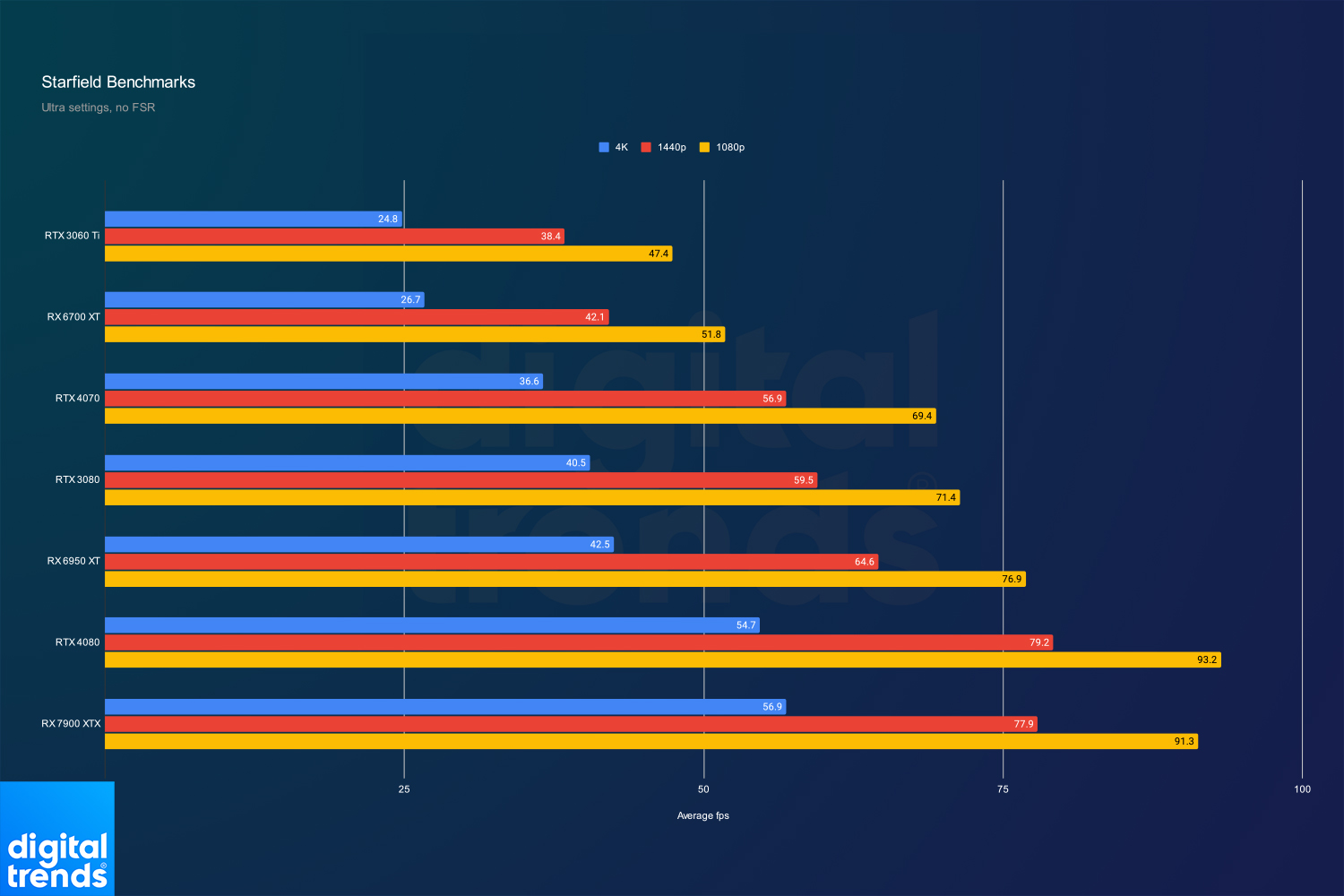

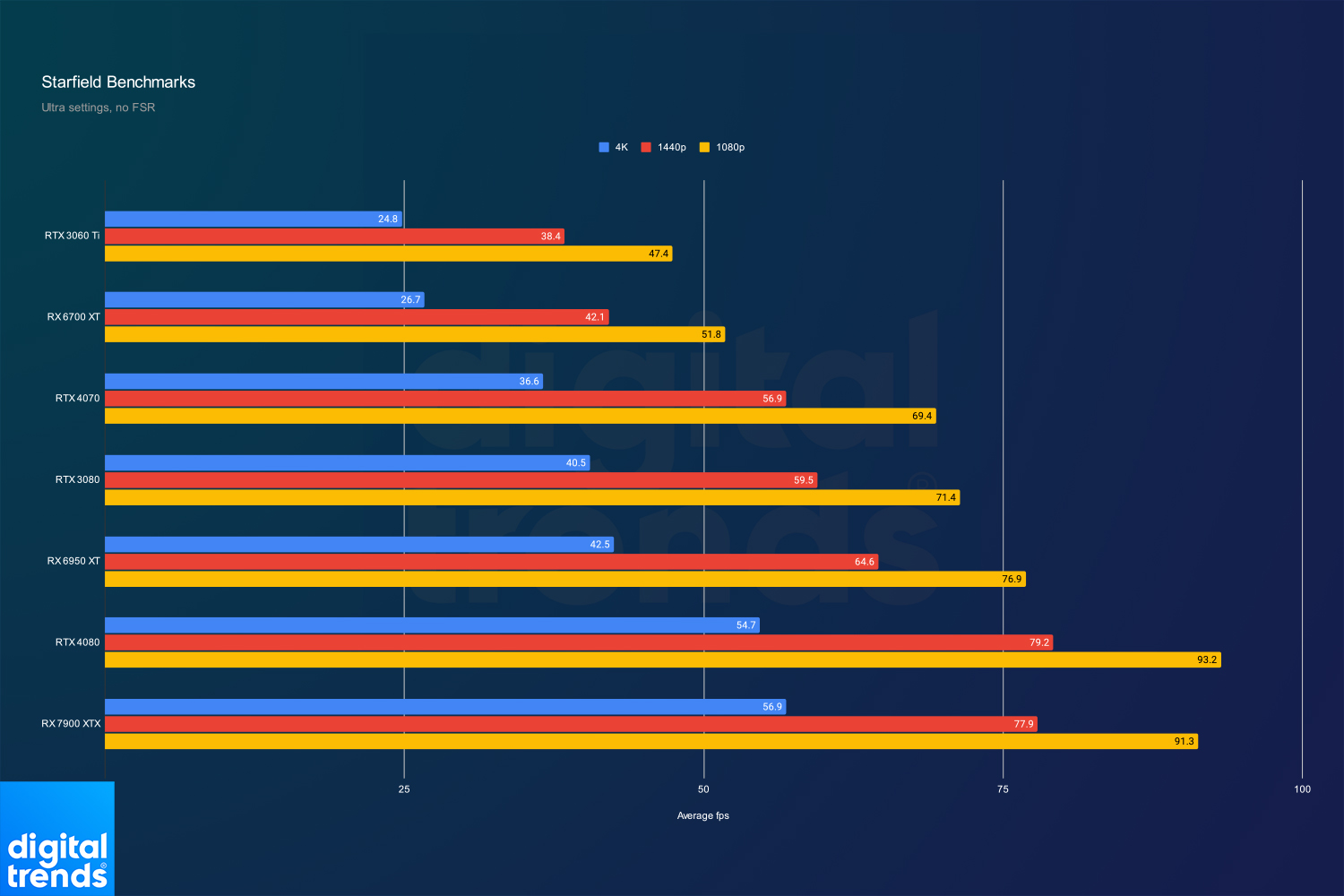

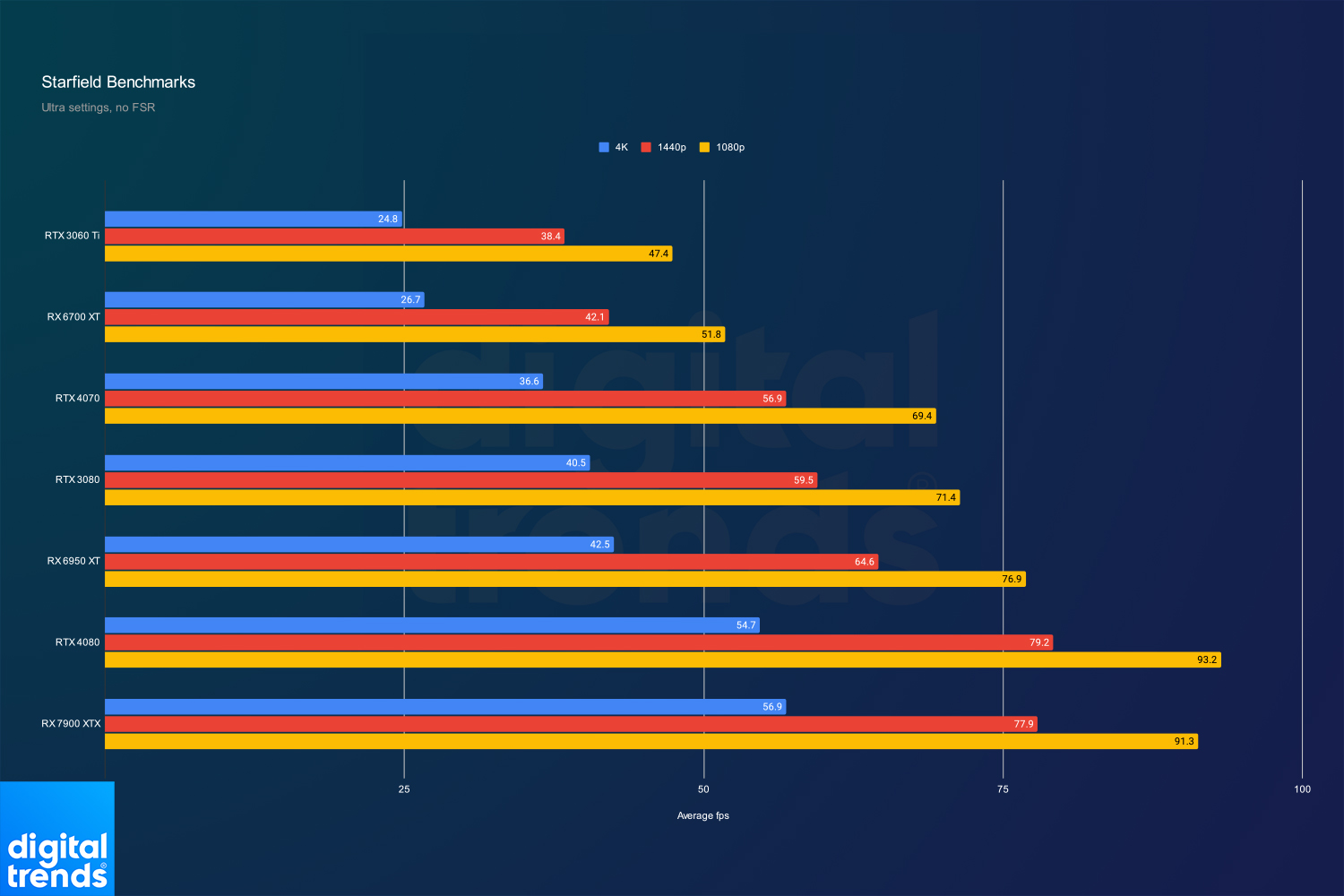

The GPU benchmarks follow a fairly direct path that more compute power is equating to more performance.I think the information we have shows the game using Series X's extra potentially pretty well. Series X has over the Series S:

- 2.56x the base resolution, plus:

- higher quality shadows

- higher environment LOD

- higher vegetation LOD

- higher texture LOD bias

- 4x res of cubemap reflections (and generated dynamically rather than baked)

That'll account for differences in memory quantity, bandwidth, fillrate between the machines. Use of VRS probably indicates that they're pushing hard on the shader front too.

To me, Starfield seems to be a game that's been very well tuned for both the Series S and the Series X. It seems to be exactly the kind of outcome that MS had in mind when they were envisioning the Series S.

It's a heavy game, RTGI is likely sucking up a lot of compute. The results are more apparent as you push to higher resolutions.

Overstander

Newcomer

That benchmark gives me hope to reach 60fps with my Ryzen5 5600x / RTX 3060 combo in 1080p.The GPU benchmarks follow a fairly direct path that more compute power is equating to more performance.

It's a heavy game, RTGI is likely sucking up a lot of compute. The results are more apparent as you push to higher resolutions.

Maybe not in high/ ultra settings but maybe a mix of med/ high.. well see .

So far i heard nobody talk about I/O and SSDs..

Is it a big deal ? One would think so as a current gen only release right?

I'm a little dreading a 3070 + 3700X combo on a ultrawidescreen. So far no talk of it yea. It does a lot of loads, having a faster IO will help reduce the time between loading screens.That benchmark gives me hope to reach 60fps with my Ryzen5 5600x / RTX 3060 combo in 1080p.

Maybe not in high/ ultra settings but maybe a mix of med/ high.. well see .

So far i heard nobody talk about I/O and SSDs..

Is it a big deal ? One would think so as a current gen only release right?

PCGamesHardware.de, who's normally pretty great on the technical front, gave some preliminary performance figures and it's interesting, to say the least.I'm a little dreading a 3070 + 3700X combo on a ultrawidescreen. So far no talk of it yea. It does a lot of loads, having a faster IO will help reduce the time between loading screens.

(not to derail the DF content in this thread, but since they don't have their PC analysis up yet...)

Starfield fordert GPU: RTX 3080 mit 30 Fps - Hardware beim Ersteindruck am Limit

In Kooperation mit der PC Games konnten wir erste Technik-Eindrücke von Starfield gewinnen. Die Anforderungen an die GPU sind dabei ausgesprochen hoch.

They claim the engine's quite well threaded, but in some of the more demanding scenes (sounds like this is probably the worst case scenario), on a 3080:

1080p native, no upscaling, ultra settings - 30fps (!)

4k native, no upscaling, ultra settings - 20fps

If only Starfield had DLSS support...

if you had any good memories from a game in that era, don't play it again on a non CRT monitor, i.e. when I played Forza 1 -a game that I liked very much, specially the Blue Mountains track- via emulation on the X360 I almost cried at how bad it looked when playing the game at the original resolution 480p -and this was on a CRT, but to no avail-

memmory is funny thing. I remember all old games looking much better than they actually did. PS2 had turds not textures.

I don't think it helps too much? Nanite has its geo data packed in BVH trees and applies several software compression methods. It's different from loading textures.I wonder if there is a way to make nanite streaming make use of the the SFS support on the Series consoles?

Like stuffing bit of the nanite geometry into mip maps or something?

I dont know enough about how SFS works, but it does strike me that SFS is a solution for a very similar problem.

ie. maximizing the efficiency of bandwidth that gets used in transfers to the GPU.

How much of SFS is linked specifcally to mip-maps and could it be modified to work with the streaming geomtry of nanite?

do you have the original link? does it state Ultra settings have RT effects turned on? Cuz I haven't seen anyone else mention it nor do I see it from the console footage -- GI looks pretty plain to me, likely some basic probe systemThe GPU benchmarks follow a fairly direct path that more compute power is equating to more performance.

It's a heavy game, RTGI is likely sucking up a lot of compute. The results are more apparent as you push to higher resolutions.

there is no ray tracing in this gamedo you have the original link? does it state Ultra settings have RT effects turned on? Cuz I haven't seen anyone else mention it nor do I see it from the console footage -- GI looks pretty plain to me, likely some basic probe system

Ya I'm really confused why RTGI is brought up, especially for a AMD partnership titlethere is no ray tracing in this game

Real Time Global Illumination. R T G IYa I'm really confused why RTGI is brought up, especially for a AMD partnership title

GhostofWar

Regular

Was kind of expecting cod like results with rdna3 laying the law down but it seems ada is standing it's ground.The GPU benchmarks follow a fairly direct path that more compute power is equating to more performance.

It's a heavy game, RTGI is likely sucking up a lot of compute. The results are more apparent as you push to higher resolutions.

On a seperate note before I go try and recover my nexusmods login does the dlss/xess mod work on the gamepass version?

Real-time global illumination. My bad. You only just made me realize that there is no acronym I could have wrote to reduce the mix up.do you have the original link? does it state Ultra settings have RT effects turned on? Cuz I haven't seen anyone else mention it nor do I see it from the console footage -- GI looks pretty plain to me, likely some basic probe system

There is a whole list of real-time functions as well as shadows and volumetric fog in play. DF covers it here:

The Starfield tech breakdown: why it runs at 30 frames per second

John Linneman and Alex Battaglia share their notes on the Starfield Direct, discuss why the game is 30fps on consoles a…

I haven’t seen anyone try this yet and post about it. I suspect once it gets out of early access we will see a lot of movement in that space as most people in early access are just hammering contentWas kind of expecting cod like results with rdna3 laying the law down but it seems ada is standing it's ground.

On a seperate note before I go try and recover my nexusmods login does the dlss/xess mod work on the gamepass version?

Last edited:

So which is the problem -- games that aren't scaleable to low end, like IoA, or games that scale too well to low end, like starfield? You seem to freak out about both.I disagree. The game varies from mediocre to good looking but the variance between Series S and X has to be the smallest I've seen from any game released this gen, whether cross-gen or current gen only. Also, the term "higher" is subjective and I expected a difference of PC min vs PC Ultra between S and X. I'm just not seeing that here.

I'mDudditz!

Newcomer

So which is the problem -- games that aren't scaleable to low end, like IoA, or games that scale too well to low end, like starfield? You seem to freak out about both.

Where are these supposed freak outs you're talking about? As I said before, IoA runs and looks terrible on all platforms. The engine is unnecessarily heavy. With Starfield, the gap between Series S and Series X is so minimal, it looks like Series X could have received much better graphical optimizations at minimum if framerate boost was out of the question due to CPU.

Where are these supposed freak outs you're talking about? As I said before, IoA runs and looks terrible on all platforms. The engine is unnecessarily heavy. With Starfield, the gap between Series S and Series X is so minimal, it looks like Series X could have received much better graphical optimizations at minimum if framerate boost was out of the question due to CPU.

But there isn't the power to both do a > 2.5x bump in base resolution, plus shadow and cubemap and grass bumps etc, and also go from PC equivalent min to PC equivalent max settings. I don't think any game has pulled this off.

What are you thinking they should have done instead?

It's not impossible that a slightly higher FSR resolution base could be used than 1440p. Something like 1600p or so. Usually does make a difference when talking about FSR.But there isn't the power to both do a > 2.5x bump in base resolution, plus shadow and cubemap and grass bumps etc, and also go from PC equivalent min to PC equivalent max settings. I don't think any game has pulled this off.

What are you thinking they should have done instead?

- Status

- Not open for further replies.

Similar threads

- Locked

- Replies

- 3K

- Views

- 311K

- Replies

- 453

- Views

- 33K

- Replies

- 3K

- Views

- 409K