Yup, well, both Series X|S are afflicted with the same issues in terms of what functionalities are available in the GDK. It's unfortunate that MS got blind sided by COVID here (perhaps their deadlines too ambitious and didn't see it the possibility of a massive slowdown) and didn't build their tools out enough to have a good launch. I think a proper fix would have been to eat the cost of just doubling the remaining memory to be symmetric and not deal with the tooling issues around controlling the split pools. There are other issues, but this isn't an ideal launch considering their marketing.

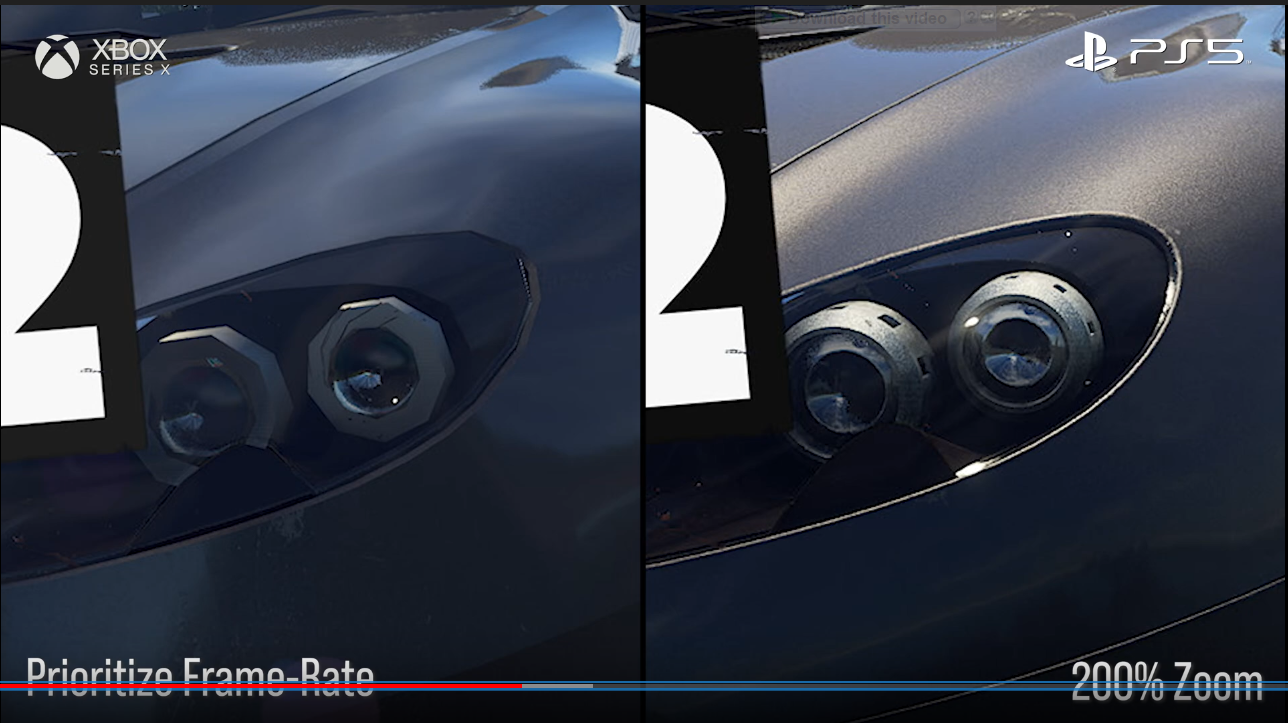

Yeah, that's where this REALLY stings hardest. They've spent almost a full year talking specs, touting their perceived advantages etc., but now we've got multiple 3P games just running better on PS5 outright. I'm already seeing some shots for DiRT5 on both platforms and there's spots where the two games literally look a generation apart between Series X and PS5, in favor of the latter. Like, entire levels of geometry detail, texture detail etc. are just missing on MS's platform in some of those spots (at least from screengrabs I've seen posted elsewhere).

Now I don't even necessarily know if the entire Gamecore stuff is 100% accurate, going from the interview with the DiRT 5 technical director. Apparently for them, the GDK is "just fine" (or something like that), but they did specify that's in relation for what they were trying to do. Some of these results for DiRT 5 on Series X say otherwise, though; I don't see how GDK can be "just fine" if results between platforms are producing almost generations-apart levels of detail and texture quality on two next-gen peers.

Really hoping this at the very least, convinces MS to not rely too much anymore on 3P games to showcase their system's capabilities off, it's a bit clear that they can't do such if the tools aren't there yet. Cyberpunk might be their best shot to start turning some of these unfortunate optics around, but even that has a good chance of looking & running better on PS5 now going by these trends. It's time they start relying on their 1P to show what their design is actually capable of, they gotta take a page from Sega here (where it was literally 1P titles like VF2 that helped start curbing back some of the bad optics the Saturn was having at that point from a technical POV among some gamers and large parts of the gaming press of the day).

Truly I hope you're right about the Gamecore stuff just being functional enough to get the games out the door for launch and there's a large amount of improvement/optimization that can be had; MS have so many other things going well for them regarding Xbox (studio acquisitions, a few nice surprise 1P games this year of very high quality, Gamepass, Xcloud etc.); I'd hate to see bad optics forming around Series X being an underperformer, hurt their long-term plans. But the early adopters, who traditionally drive console sales at the start of a gen, they DO care about these performance metrics and where they go, the casual/mainstream usually tend to follow. That's why more than anything MS needs to start reversing course on this.

Doubling of memory was straight forward way to ease the development and have upper hand in one dept, but by doing that they would have to do the same with XSS and that would I guess result in considerably higher loss.

What they should have done, if they wanted absolute power crown besides spec sheet, is gone with 48CUs (44 active) and 256bit bus with 16Gbps chips. Clock it similarly to PS5, retain rasterization parity and gain shader advantage while not having to deal with virtual memory pools (+have 15mm² bigger chip instead of 50mm²)

I really like PS5 design, its very nice and straightforward.

Yeah but then if they took this route, it wouldn't fit in as well with their dual-purpose use of Series X APU for Azure. Thinking on it now it does seem like it's pretty true that for as much as BC may've limited Sony's design choices, it's also limited MS's. They wanted 4 XBO S instances on a single APU so they needed to go relatively wide on the CU count. They knew they'd be using the APU in Azure servers so it has to maintain constant levels of guaranteed performance, that means lower GPU clocks (relative to PS5's; it's likely they would've stuck with 14 Gbps chips too because server systems tend to use slower main memory than consumer PCs, for example). They knew they wanted to make a smaller, cheaper variant and so storage had to be developed to accommodate both models while hitting a nice, simple TDP hence (on-paper) more conservative raw SSD I/O specs relative Sony and the proprietary expansion card design.

I'm curious if in the future it will ever be possible for GPUs (not just AMD; Nvidia and Intel, too) to allow for a sort of variable frequency approach that is just specific to portions or "blocks" of the GPU. It's something I've been thinking about the past few days; this way if you did go for a wider and slower design, but certain workloads benefit from faster clock and you can't actually clock the entire GPU higher or else you kill your power budget, why not just significantly reduce the clock rate on some of the GPU "blocks" and significantly increase the clock rate on other parts of the GPU "blocks" while gating off compute to just the parts with the higher clocks?

This should be theoretically possible in some way, right? Maybe in the future? And in a way that's dynamically adjustable in real-time on a per-cycle basis. I guess power delivery to the GPU would have to be redesigned but maybe there is a future in this sort of design, hopefully. Because I think if it were possible for Series X, it would help out a lot with the way some of these early games are seemingly being designed.

120fps mode:

Image quality 60fps mode:

The latter is basically 900p vs 1080p (relative perceptible resolution difference, the res is higher on both machines, from 1440p to 4K)

Yep, these are the shots I saw earlier, and it hurts pretty hard. They almost look a generation apart.

We might have a good understanding to why this is happening in terms of the circumstances producing these results, but most of the people watching this stuff on YT, and most gamers for the most part, don't know and don't care.

They're just going to see stuff like this and assume it's down to one (Series X) having a poorer architecture and being weaker outright, and it stings even harder if they are aware of some of MS's messaging over the year. So I'm really hoping 3P performance on Series X starts to iron out the kinks.

But moreso than that, I'm ready to see some of that next-gen 1P content, MS. You gotta make something happen at TGA, and that Halo Infinite update? It better be amazing. Can't afford any "meh, it's alright" responses from at large, not anymore.

Last edited: