Ultimately, what I think Intel is missing are compelling features to be standardized. When do you think was it the last time Intel has made as good of a case for features like the others did with RT or Work Graphs ?Intel has zero market share so their immediate priority should be sales volumes and not margin. They can get there if they keep costs under control while delivering decent performance. That gives them some leeway to undercut Nvidia and AMD pricing. But that’s a big if. Without a cost efficient design they’ll be right back here next generation.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel ARC GPUs, Xe Architecture for dGPUs [2022-]

- Thread starter BRiT

- Start date

This is kind of condescending tone needs to go away.most of y'all will even agree in your rare moments of lucidity ,

Yes, if indeed the majority here would really be so obtuse, why even discuss which such people? Pretty sure the discussion between a lucid person and others who have just very few moments of lucidity cannot be about sharing information and will rarely be in good faith.

So maybe you're exaggerating whith statements like this or there's no point in you continuing to post here as the people are so far bellow you. Which is it?

You don't have to, it's your choice wether to repeat or not. You can remind from time to time that "my position is.."/"i feel that this..." to restate your opinion about prices.I literally keep saying it over and over

But this is just your opionion, it's not that releavant in the end on a tech forum. It's useful to understand from where you are coming when interpreting a post of yours , but that's about it, in my view.

You'd have to address the strawmen and miss interpretation of your words when they occur.literally keep saying it over and over and y'all seem to just keep ignoring it everytime, making a strawman out of my arguments, as arguing against what I'm really saying is much harder to push against. It's becoming quite obnoxious, honestly.

This is not fair to say, and is not believable. Again, such people would not be worth your time; if we really would spin around or ignore everything you have to say.

I dont know HOW MANY TIMES I have to keep saying that I'd have been ok with some level of price increase for the very real cost pressure increases that have happened.

But this just means you don’t like paying more for stuff. None of us does. Nobody here is celebrating price increases. That’s simply ridiculous.

You’re railing against “greedy corporations” because you think they can and should sell us stuff for less money. You’re not saying “why” they should sell us stuff for less money. There’s no ethical argument to make here for graphics card prices. You need to make an economic argument.

Ultimately, what I think Intel is missing are compelling features to be standardized. When do you think was it the last time Intel has made as good of a case for features like the others did with RT or Work Graphs ?

Compelling to devs or consumers? Intel has a decent marketing machine and they currently check most of the boxes consumers care about aside from the big one - performance. If they address that then it’s game on.

They don’t need to become a developer darling overnight and there’s lots of existing features and APIs for them to flex their stuff. They’re already showing signs of savvy marketing with the Tom Peterson roadshows.

Ideally both and it's harder to reach feature implementation parity among the differing vendors since some features may not necessarily map well for all hardware designs which is a function of performance and you as an architect can't easily workaround it by duplicating all sorts of logic circuits with a limited transistor budget ...Compelling to devs or consumers? Intel has a decent marketing machine and they currently check most of the boxes consumers care about aside from the big one - performance. If they address that then it’s game on.

Sure but it's much easier to undercut their other competitors if they were the ones solely making up the new API rules instead of having them do it ...They don’t need to become a developer darling overnight and there’s lots of existing features and APIs for them to flex their stuff. They’re already showing signs of savvy marketing with the Tom Peterson roadshows.

You’re railing against “greedy corporations” because you think they can and should sell us stuff for less money. You’re not saying “why” they should sell us stuff for less money. There’s no ethical argument to make here for graphics card prices. You need to make an economic argument.

It also needs to be backed by a legal argument how the corporation(s) can route around the lawsuits from the shareholders if they wilfully choose to make less profit that they could in normal market conditions. Opportunity costs I believe they're called.

Cheap graphics cards are not a human right, they are not going to be air-dropped from the back of a C-130 by the United Nations.

OH MY GOD. lolYou’re railing against “greedy corporations” because you think they can and should sell us stuff for less money. You’re not saying “why” they should sell us stuff for less money.

I have very explicitly described in detail WHY this should be happening, but y'all also told me in doing so that such things shouldn't be talked about for some bizarre reason. I was literally told off for doing so in like the past week. I brought up fact-based arguments and was told to stop it.

It's wild. It's just so absurdly wild I'm near at loss for words. Y'all have done everything possible to ensure that complaining about GPU prices is basically not even allowed.

And here we literally have somebody trying to argue that GPU prices SHOULD be priced literally as high as humanly possible.It also needs to be backed by a legal argument how the corporation(s) can route around the lawsuits from the shareholders if they wilfully choose to make less profit that they could in normal market conditions. Opportunity costs I believe they're called.

Cheap graphics cards are not a human right, they are not going to be air-dropped from the back of a C-130 by the United Nations.

So please dont tell me I'm crazy for saying that people are defending high GPU prices. And this post is getting upvoted by many people as well. It's literally THE popular opinion here that GPU prices should be as exploitative as possible for the sake of shareholders, and at the expense of consumers. And yet I'm the crazy one for saying that GPU prices are unreasonable. smh

I honestly feel like I'm living in crazy world.

The bigger problem is this argument is consistently derailing many threads that are supposed to be about something else. Some posts make good points, others are just pointless rants, but *none* of them belong in the architecture forum or in an Intel-specific thread.

At this point it's not going away so we'll probably just have to create a new dedicated thread in the Graphics & Semiconductor Industry forum and make sure the discussion stays contained there... I'm not sure how best to describe the scope of that thread or whether all the other moderators agree (or what rules if any to apply) so I'm not going to do it right now, but hopefully tomorrow or Monday.

It'd be great if we could stop overfocusing on this until then...

At this point it's not going away so we'll probably just have to create a new dedicated thread in the Graphics & Semiconductor Industry forum and make sure the discussion stays contained there... I'm not sure how best to describe the scope of that thread or whether all the other moderators agree (or what rules if any to apply) so I'm not going to do it right now, but hopefully tomorrow or Monday.

It'd be great if we could stop overfocusing on this until then...

I have very explicitly described in detail WHY this should be happening.

I must have missed that. A dedicated thread on the economics of consumer gpu pricing is a good idea.

Man from Atlantis

Veteran

link is 2 months old but haven't seen this discussed here.

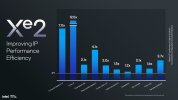

Intel Xe2 GPUs Official: 50% Performance Uplift, New Ray Tracing Cores, Coming To Lunar Lake First & Battlemage Discrete Cards Later

Intel's Xe2 is official and will be coming to Lunar Lake CPUs and the next-gen Arc discrete graphics lineup codenamed "Battlemage".

Please be good, please be good, please be good, please be good, please be good...View attachment 11905View attachment 11904

link is 2 months old but haven't seen this discussed here.

Intel Xe2 GPUs Official: 50% Performance Uplift, New Ray Tracing Cores, Coming To Lunar Lake First & Battlemage Discrete Cards Later

Intel's Xe2 is official and will be coming to Lunar Lake CPUs and the next-gen Arc discrete graphics lineup codenamed "Battlemage".wccftech.com

Even though it doesn't really help the GPU, I think there's a big opportunity for offloading XeSS to the NPU. Would be a marketing win at the very least.Ultimately, what I think Intel is missing are compelling features to be standardized. When do you think was it the last time Intel has made as good of a case for features like the others did with RT or Work Graphs ?

Question is, to what extent NVIDIA allows the modern hooks they have into game companies and their engines to be used by Intel. In and of itself it shouldn't be too hard to develop XeSS for "ray reconstruction" ... but depending on contracts and patents, it might be impossible.

Please be good, please be good, please be good, please be good, please be good...

Given the large IP and node improvements (25-30% for N3B vs N5 which Meteor Lake's GPU tile is on), Xe2 should be fairly competent, especially at lower power levels. And given how underwhelming Strix Point's GPU performance is, it should be competitive.

They have added Execute Indirect support in h/w , which if I understand correctly will help in dynamic parallelism in OpenCL parlance.

Hopefully they add OpenCL 2.0 support to this and Xe GPUs.

24 % more performance at 45 % lower consumption at the same node is underwhelming?Given the large IP and node improvements (25-30% for N3B vs N5 which Meteor Lake's GPU tile is on), Xe2 should be fairly competent, especially at lower power levels. And given how underwhelming Strix Point's GPU performance is, it should be competitive.

Frenetic Pony

Veteran

Please be good, please be good, please be good, please be good, please be good...

Supposedly equivalent to a less power efficient RDNA 3.5 (slightly lower benchmarks than that AI 370 chip at higher power). But hey that's still a major improvement. I'd consider buying one if they manage to release even a mid range card this year, but as it is next year will see a lot of competition.

Have they actually demonstrated an XeSS implementation for NPUs for this to be true ?Even though it doesn't really help the GPU, I think there's a big opportunity for offloading XeSS to the NPU. Would be a marketing win at the very least.

Why would Intel try to reverse engineer any of Nvidia's interfaces when it means playing by their rules ? Attempting graphical feature 'replacements' per-game will quickly lead to an unmaintainable driver/software stack ...Question is, to what extent NVIDIA allows the modern hooks they have into game companies and their engines to be used by Intel. In and of itself it shouldn't be too hard to develop XeSS for "ray reconstruction" ... but depending on contracts and patents, it might be impossible.

Intel may not remain for much longer in the discrete graphics hardware space if they don't show any semblance of leadership in major API feature implementations. Just as how there exists both slow and fast paths in the driver/hardware, performance is also ultimately a function of API features too ...

ExecuteIndirect really isn't related to dynamic parallelism. ExecuteIndirect is conceptually about the device/GPU self-generating draw/dispatch commands without host/CPU intervention. Dynamic/nested parallelism is about chaining together between the different device/GPU kernel dispatches which are conceptually closer to how Work Graphs operate where nodes (representing shader programs) can launch other nodes ...They have added Execute Indirect support in h/w , which if I understand correctly will help in dynamic parallelism in OpenCL parlance.

Hopefully they add OpenCL 2.0 support to this and Xe GPUs.

Intel dropped dynamic parallelism because they thought that the feature was overly complex to implement and maintain ...

Similar threads

- Replies

- 90

- Views

- 16K

- Locked

- Replies

- 1K

- Views

- 221K

- Replies

- 70

- Views

- 20K

- Sticky

- Replies

- 1K

- Views

- 366K