But in first benchmarks Kepler looks quite capablethat was like a rip from nvidia

http://videocardz.com/31010/geforce-gtx-680-benchmark-leaks-out-leaves-hd-7970-behind

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

But in first benchmarks Kepler looks quite capablethat was like a rip from nvidia

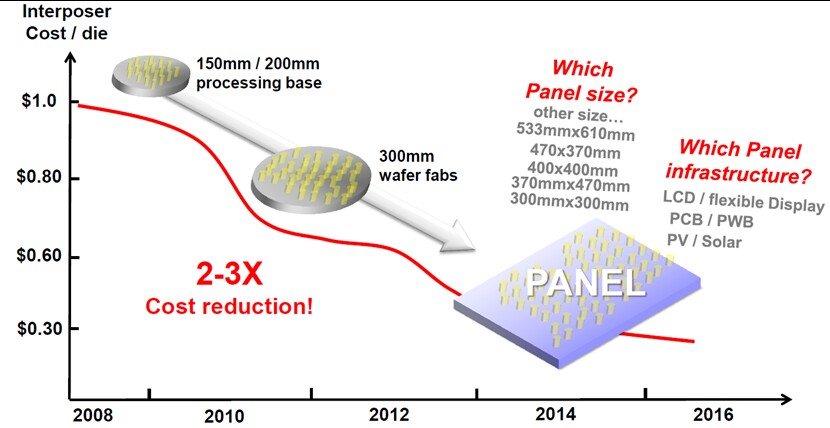

This graph is based on a 300mm interposer

Even though it fairly cheap, it get really cheap once you go to glass (if you can for your process).

Remember also, you can use both sides of an interposer so a chase to dram could be as short as 90um which is shorter than some L2 chases and a huge power saving(10x or more).

Is cost still an issue? Hmmm, it could be. SemiMD and Ultratech say a 300mm 65nm interposer wafer costs $10,000, which is several times larger than the cost of a 28nm high-k/metal gate wafer! The high cost is apparently because economies of scale haven't kicked in, and few interposer suppliers exist. Its not surprising that Xilinx is targeting the 2.5D FPGA towards cost-insensitive applications, such as ASIC prototyping, military equipment as well as high-end computing and communications.

The next question to ask is: will cost go down when economies of scale kick in? Yole Developpement, an analyst firm, claims interposers built with depreciated 300mm equipment could cost as low as $615 per wafer. They mention this is below the $700 per wafer (1 cent per sq. mm) required for large scale adoption. Another analysis on interposer cost from eSilicon reached similar conclusions.

Where did you find that picture? Flexible LCD displays?

I hardly believe that is silicon interposers meant for 3D-stacking.

I´ve seen one reference to the price here.

Silicon interposers don't allow 3D stacking (you could in theory do the bottom mount thing, but it would be easier to just flip chip mount the ICs together without an interposer in between ... and you can't get a deeper stack than 2 either way). Mostly it just allows a higher I/O density than traditional MCM substrates.

Was just reading a fairly new review on 60GB SSD drives. At about $100 (retail) 60GB "Boot Drive" SSD drives have some really great performance (here here and here) with Random 4KB reads and writes up to over 300MB/s and 128KB sequential reads over 500MB/s and come with a laughably low seek time (e.g. 0.05ms which is over 200x faster than a HDD and about 3000x faster than an optical drive) oh and they clock in at about a whole ~ 1W of power draw--but still, even at volume pricing too expensive for a console unless it was a major part of the platform. And even then you still are going to need another chunk of change for a HDD (unless you go with a Arcade/Pro model where the Arcade has a SSD and the Pro a SSD and HDD). It is really too bad they are so expensive but kind of puts into perspective that while a huge amount of RAM, even if there is a secondary pool for such, is a much cheaper solution. Which is too bad because a SSD is the best single upgrade I have seen for a PC in a decade imo. I guess you could argue going for a smaller SSD but the only cost isn't the chips but the memory interface as well.

Memory configuration, both at the chip level (caches) and for the system are going to define next gen as much as the GPU/CPU "cores" imo. I think the most palatable and cheap solution for system memory is going to be Optical for the Games Distribution and Storage (cheap, large storage, media use), a large HDD for installs and to blunt the transfer and seek times of the optical drive, and a large amount of RAM so games are a "load once" and then background stream & cache the game. RAM is going to be faster than any SSD and while no solution is ideal the cost of the optical drive/HDD is already "budgets" so the only big investment is RAM. RAM, compared to the cost of some sort of SSD storage, is much cheaper and once you get the content into the RAM it outperforms everything else. I wonder if large chunks of game data can be aligned for optimal reading (e.g. large sequential blocks of data to be streamed in chunks). There would have to be some good tools for such because data all over the place is going to kill performance and make filling 8GB of RAM painful. I guess it is lucky Blu Ray can be quite large as to allow for such inefficient but speed-friendly "packing" of content where you may have 2-4GB blocks for gaming segments that have a lot of repeated data from other segments but you keep them as such to maximize transfer.

I cannot really think of a "cheaper" solution that hits as many of the problem points. It will be interesting how this one plays out.

But in first benchmarks Kepler looks quite capable

http://videocardz.com/31010/geforce-gtx-680-benchmark-leaks-out-leaves-hd-7970-behind

For 3D stacks of more than 2 chips with high I/O density you need TSVs through the ICs, if you have TSVs through the ICs there is no need for an interposer. You can just build a stack of ICs connected directly to each other (potentially with organic substrates to bridge power/low-density IO across a level of the stack).I'm not following you, what do you mean by doesn't allow 3D stacking? You don't use an interposer for 3D stacking but you use it between 3D stacks.

at 28 nm and less cash memory transistors for the processors, I dont see why the configuration would be any hotter than the first models of ps3 and xbox 360....

It is puzzling to me the pessimistic nature of a lot of contributions to this thread assuming the next xbox and ps4 will have less silicon budget, less TDP and run cooler than the first ps3 and xbox 360 models.

XB360 and PS3 cost their companies a lot money for being hot. More heat means more cooling or more failures. Add in an uncertainty regards how well 2013/2014 fabrication tech will scale with manufacturing advancements, and the choice to maintain PS360's silicon budget and hear becomes something of a risk. So why aim for that instead of a simpler system with a shorter lifespan, where the future fabrication technologies will be better understood and plans for a good next-console (if there is one. Maybe a five year console is considered all that's needed before we go streaming a la OnLive)?It is puzzling to me the pessimistic nature of a lot of contributions to this thread assuming the next xbox and ps4 will have less silicon budget, less TDP and run cooler than the first ps3 and xbox 360 models.

Some people are expecting to have a 100-150watt 28nm GPU when even the PS3's RSX was probably around ~70watt and Cell ~50watt at 90nm.

That is, because the system had an overall power usage of 200 Watts, under load. Assuming 80% efficiency of the PSU, that comes down to 160 Watts. And that's lowballing it, I think. I'd argue that 40 Watts for "misc" is a bit much. The hdd is a 2.5'' laptop drive, which probably uses less than 2.5 Watts, the chipset isn't too huge either and the BDROM doesn't make up the rest. Though, something between 140/150 Watts overall for CELL and RSX and RAM is probably correct.

NVIDIA GeForce GT 640M 1GB DDR3

(384 CUDA cores, 625/1800MHz core/memory clocks, 128-bit memory bus)

8 geometry units?

AMD achieves quite a punch with 2 in GCN.

We have no precedence to assume that they want to retain the same power budget as current consoles did initially.

8 geometry units?

AMD achieves quite a punch with 2 in GCN.

8 billion poly walls are in high demand.