GhostofWar

Regular

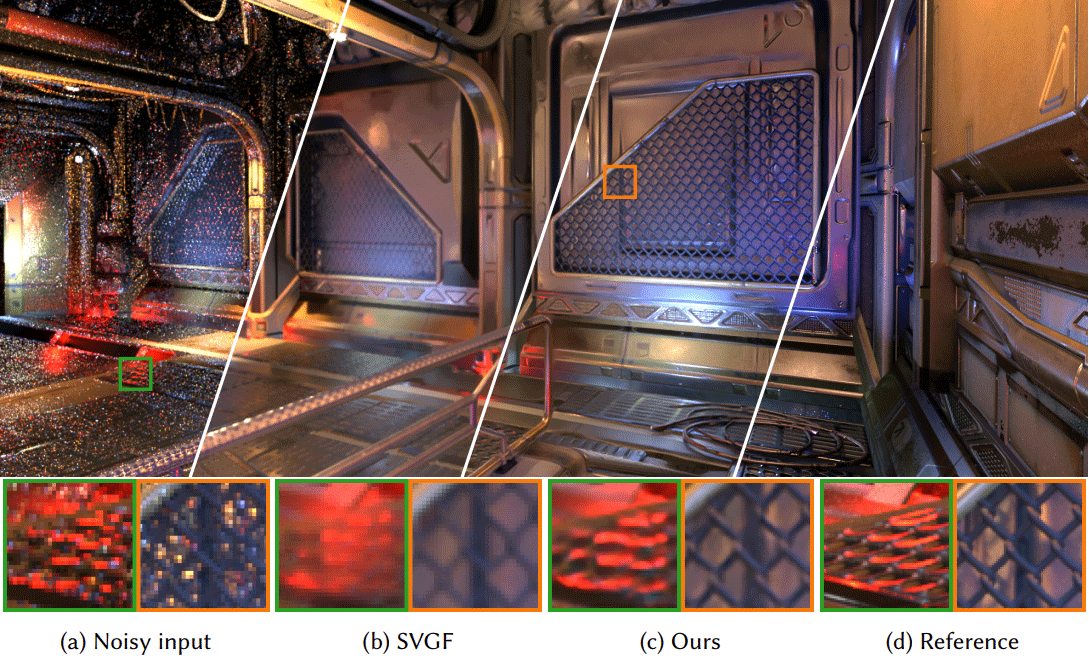

Interesting. Looking at the example is there any reason why this seems to make the gi look so much more vibrant? Could the accumulation errors mentioned really make that much of a difference? The 4th slice in that first image almost looks like it's on a different detail setting like more ray bounces or something.

NVIDIA announces DLSS 3.5 with Ray Reconstruction, launches this fall - VideoCardz.com

NVIDIA DLSS 3.5 announced A new upscaling technology has emerged, which is essentially an enhancement to NVIDIA’s existing DLSS3. NVIDIA DLSS 3.5, Source: NVIDIA While there haven’t been any updates regarding AMD FSR3, and XeSS going open-source, NVIDIA is determined to make headlines with the...videocardz.com

I wonder if it will be enough of an image quality improvement that you could say drop from quality to balanced and get similar image quality to the older dlss but get a bit of a performance bump instead.