FIFA 23: Good. 4K60 maxed out. 190W power consumption.

NBA 2k23: Good. 4K 60fps with 16x MSAA Antialiasing (CMAA off) all maxed out. If you set CMAA on, at 16X AA during the presentation, the entire screen looks like covered in a depth of field effect, you see nothing. When the game starts it looks fine, although at 16x AA with CMAA on sometimes there is some odd ghosting. CMAA doesn't appear to be compatible with MSAA x16. It works perfect with MSAAx2, x4 and x8 though.

Need for Speed Hot Pursuit. Perfect. 4K 60fps all maxed out.

Grim Dawn (x64, DX11), great performance at max settings 4K. Main menu looks strange but everything is perfectly legible, the typical -for Intel it seems- diagonal line with corrupted pixels appears in the menu but it disappears in game.

Grim Dawn DX9 renderer. It looks good at 4K maxed out settings, although being DX9 it looks nothing like the DX11 version, it lacks many effects -it's not the GPU fault-. Performance is not good though. 1440p improves things, but it's not perfect performance either. 1080p is fine. Fully playable in any case.

Garfield Kart Racing. Native 4K works fine. Badly optimized game that at 4K did not run well on the GTX 1080, and you had to set graphics to medium, with the Intel you can set everything at max and 4K, 100W of consumption and it runs super smooth.

Shadows Awakening. 4K max settings. Perfect performance. 185W consumption.

Tell Me Why. Spectacular. 4K max settings. Smooth like silk.

Alien Isolation. 4K max settings. Runs like a dream, this was impossible on the GTX 1080 at 4K. 85W consumption.

Diablo 3. Runs well but it's not perfect. It's smooth but it's kind of twitchy. 4K, 75W consumption.

Sonic All Stars Racing Transformed. Solid but not perfect 4K performance. 60fps. Very low energy consumption.

Star Wars Battlefront II. 4K 60fps, super smooth in DirectX 11. Awesome looking game. If you set the game to run in DirectX 12 it renders nothing but a blue background and you can't do anything, you see icons of other players and a landscape as if you were floating on a cloud. A rare rare case. Because in DX12 is where the A770 stands out, but it's the other way around in this case. DX11 wins.

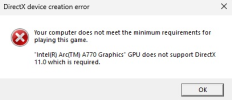

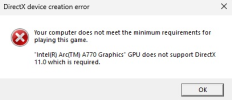

Mass Effect Andromeda. Meh. 4K 60fps very good performance at max settings. But it has a problem, this little screen appears:

Apparently this has also happened with nVidia although they fixed it and that's why I found a solution here:

https://answers.ea.com/t5/Mass-Effe...load-DirectX-error-on-Windows-10/td-p/6021461

In general, most games are running fine for me. Old games can be a hit and a miss though, 'cos some of them run at 30-40fps at 4K for no apparent reason, seems like the GPU prefers big chunks of data being fed.

techgage.com

techgage.com