You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel ARC GPUs, Xe Architecture for dGPUs [2022-]

- Thread starter BRiT

- Start date

from the looks of it, it sounds like it has half the power of the Arc A770, which makes me think that's a mobile GPU instead of a full-fledged desktop GPU. If not, it would be a bit of a boomer

from the looks of it, it sounds like it has half the power of the Arc A770, which makes me think that's a mobile GPU instead of a full-fledged desktop GPU. If not, it would be a bit of a boomer

Nope, it's as fast as the fastest A750 there with only 192VE instead of 448VE. Maybe lower clock speed as well, base speed is reported as 1.8 Ghz. Unknown turbo if there is though. It runs on a desktop board and desktop CPU and driver string reports desktop chip, there is no doubt it's a desktop card. Mobile Battlemage is reportedly cancelled.

“Meteor Lake gets a formidable iGPU. Besides going wider and faster, Meteor Lake’s iGPU architecture shares a lot in common with Arc A770’s. Intel is signaling that they’re serious about competing in the iGPU space”

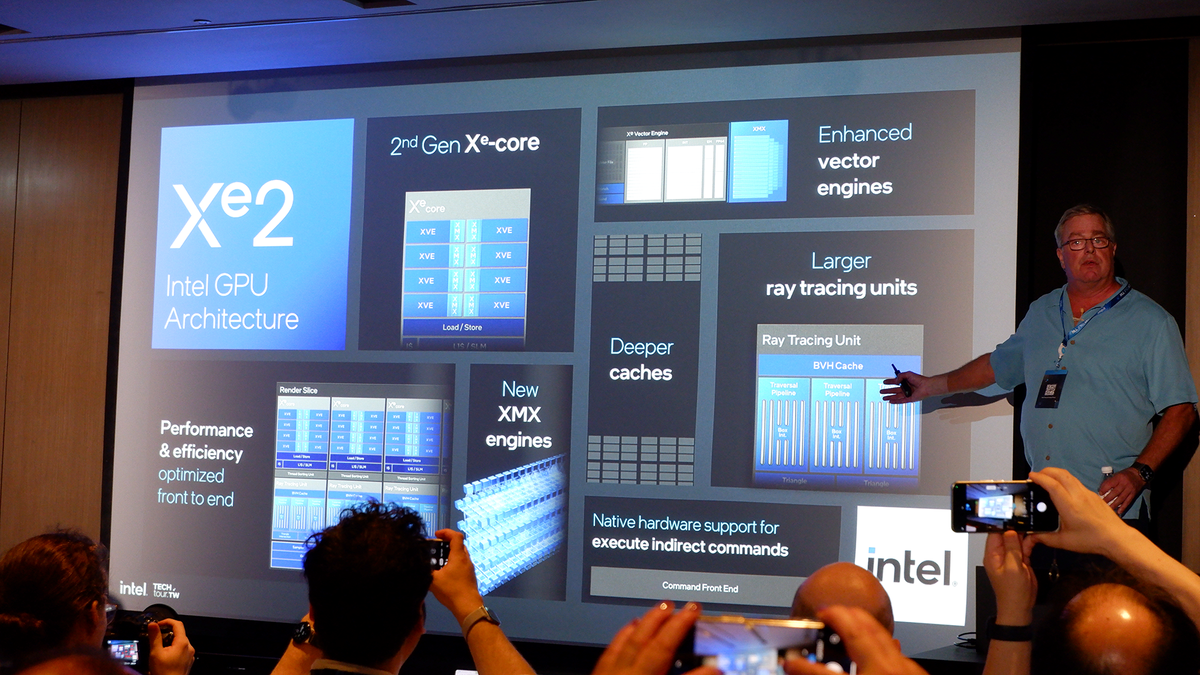

It looks like Battlemage reorganizes the shader core to 8 x 16-wide execution units instead of the previous 16 x 8-wide. That should save some transistors on scheduling hardware for the same peak throughput. L2 cache is also doubled from Meteor Lake's 4MB to 8MB.

Interestingly AMD is claiming that Strix Point is ~36% faster than Meteor Lake while Intel is claiming Lunar Lake is ~50% faster than Meteor Lake. Both are 1024 shader units wide which makes for a nice head-to-head comparison. Fun times ahead.

www.techpowerup.com

www.techpowerup.com

Interestingly AMD is claiming that Strix Point is ~36% faster than Meteor Lake while Intel is claiming Lunar Lake is ~50% faster than Meteor Lake. Both are 1024 shader units wide which makes for a nice head-to-head comparison. Fun times ahead.

Intel Lunar Lake Technical Deep Dive - So many Revolutions in One Chip

Intel today unveiled its ambitious Lunar Lake microarchitecture, which takes the fight to the likes of the Apple M3, the Snapdragon X Elite, and more, bringing high end AI PC experiences within an ultraportable footprint, with smartphone-like battery life and availability. We have all the...

Supporting native 64-bit r/w atomics for textures was severely long overdue since Arc emulating that feature on UE5 titles w/ virtualized geometry was a source of major performance overhead. With advanced GPU-driven rendering on the verge of breaking out, it's good to see better support for the ExecuteIndirect API especially given that games like Starfield served as a painful reminder to them of how lacking they were in that area ...

Supporting native 64-bit r/w atomics for textures was severely long overdue since Arc emulating that feature on UE5 titles w/ virtualized geometry was a source of major performance overhead. With advanced GPU-driven rendering on the verge of breaking out, it's good to see better support for the ExecuteIndirect API especially given that games like Starfield served as a painful reminder to them of how lacking they were in that area ...

It’s kinda crazy that Alchemist was designed without 64-bit atomics as much as Epic has been transparent about its importance to their renderer.

To be fair they started out with overly complex hardware designs (robust ROVs/geometry shaders/tiled resources, ASTC!, multiple wave/threadgroup sizes per-instruction granularity!, 64-bit floats, backwards ISA) so it's not super hard to hit slow paths on their HW/driver ...It’s kinda crazy that Alchemist was designed without 64-bit atomics as much as Epic has been transparent about its importance to their renderer.

They do good quality research (that visibility buffer paper was gold) but they have idiosyncratic ideas (much like those older console systems) when it comes to graphics technology implementation ...

https://www.anandtech.com/show/2143...4-intel-powering-up-intel-18a-wafer-next-week

Panther Lake is Intel's first client platform using its Intel 18A node. Aside from once again affirming that things are on track for a 2026 launch, Pat Gelsinger, Intel's CEO, also confirmed that they will be powering on the first 18A wafer for Panther Lake as early as next week.

Panther Lake is Intel's first client platform using its Intel 18A node. Aside from once again affirming that things are on track for a 2026 launch, Pat Gelsinger, Intel's CEO, also confirmed that they will be powering on the first 18A wafer for Panther Lake as early as next week.

Intel's Sierra Forest are super efficient, their E-Cores only need less than 2W of power per core.

Should be 2025. I think '2026' was a typo.https://www.anandtech.com/show/2143...4-intel-powering-up-intel-18a-wafer-next-week

Panther Lake is Intel's first client platform using its Intel 18A node. Aside from once again affirming that things are on track for a 2026 launch, Pat Gelsinger, Intel's CEO, also confirmed that they will be powering on the first 18A wafer for Panther Lake as early as next week.

Should be 2025. I think '2026' was a typo.

Yeah he said 2025 but I think volume availability is expected to be in Q1'26. Even Meteor Lake "powered on" in Q1'22, and even though it launched in Dec'23, the ramp was slow and realistic availability was Q1'24.

Regardless this is the Xe architecture thread so unless there's info on which GPU arch is in Panther Lake, neither that nor Sierra forest belong here.

Intel has made 'a tremendous amount of fixes for compatibility' in its Battlemage GPU architecture to ensure games run as they should

There's more to a good graphics card than DirectX compatibility, says Intel's Tom Petersen.

"We've made a tremendous amount of fixes for compatibility all across the architecture. So by its nature, we are more, let's call it, compliant with the dominant expectation."

There's hardware support for commonly used commands, such as execute indirect, which causes headaches and slows performance on Alchemist. Another command, Fast Clear, is now supported in the Xe2 hardware, rather than having to be emulated in software as it was on Alchemist.

There's also been a shift from SIMD8 to SIMD16 for the Vector Engine—the core block of Intel's GPU—which should help improve efficiency. Alongside further improvements for bandwidth and, importantly, utilisation.

The dominant expectation Petersen talks about is a sort-of graphics programming zeitgeist—it's whatever the done thing. As Petersen later explains, the done thing in graphics is whatever "the dominant architecture" does, i.e. Nvidia.

That’s an interesting admission. I wonder what specifically about Nvidia’s architecture they found it necessary to match. Should we expect SIMD32 from Celestial?

I wonder if that means they're going to get rid of "cross-shader stage" optimizations too despite the current monolithic PSO model in modern APIs being very accommodating to them and implement specialized RT shader stages in their RT pipeline instead of doing callable shaders everywhere ...

Intel has made 'a tremendous amount of fixes for compatibility' in its Battlemage GPU architecture to ensure games run as they should

There's more to a good graphics card than DirectX compatibility, says Intel's Tom Petersen.www.pcgamer.com

That’s an interesting admission. I wonder what specifically about Nvidia’s architecture they found it necessary to match. Should we expect SIMD32 from Celestial?

They support SIMD32 warps so it's possible. Nvidia though is SIMD16 in h/w so it can go either way really.Should we expect SIMD32 from Celestial?

They support SIMD32 warps so it's possible. Nvidia though is SIMD16 in h/w so it can go either way really.

Oh yeah I forgot Nvidia is SIMD16. Battlemage looks a bit like Turing.

Similar threads

- Replies

- 90

- Views

- 15K

- Locked

- Replies

- 1K

- Views

- 216K

- Replies

- 70

- Views

- 19K

- Sticky

- Replies

- 1K

- Views

- 358K