Season pass is now £5 - yes - FIVE English Pounds.

https://store.playstation.com/#!/en...pass/cid=EP9000-CUSA00003_00-DRIVECLUBSEASONP

https://store.playstation.com/#!/en...pass/cid=EP9000-CUSA00003_00-DRIVECLUBSEASONP

Imagine drawing a line right down the centre of your TV; the left section will be for your left eye, the right section for your right. So you look forwards. If you had the complete screen for each eye, your eyeballs will have to go crosseyed to see at screen that close to your face.

That's not how 3d works. You need to see the same object from both eyes (although at a different angle) for it to have depth. If you divide the TV screen straight through the middle, you don't have any overlapping information, thus no 3d. See the image that was posted right after your post and note that most of the objects/scene are present in both pictures but from a slightly different angle:

ThePissartist said:From what I understand both the left and right lens only see half of the screen each; that's why we have 960x1080/eye. The complete screen refreshes at 120hz, each individual refresh updates both the left and the right eye (i.e., both "screens"), so it's not doing one and then the other like a TV does.

we only need 60Hz per lens for a 60fps game. Internally however, the engine would still be rendering the equivalent of 120fps, as each 1/60th frame would be rendered from two slightly different view points at the same time (and not alternating every 1/120th). That's my guess.

if we have two separate screens, each as 960x1080, we only need 60Hz per lens for a 60fps game. Internally however, the engine would still be rendering the equivalent of 120fps, as each 1/60th frame would be rendered from two slightly different view points at the same time (and not alternating every 1/120th)

Phil means 120 camera projections and frame draws per second, 60 per second for each eye.The engine is unlikely to be rendering at 120fps.

Phil means 120 camera projections and frame draws per second, 60 per second for each eye.

No, its more like a 1280x1080 at 60hz buffer with left aligned and right aligned 960x1080 slices taken from it.The engine is unlikely to be rendering at 120fps, unless the game is native 120hz, so most often it'll be frame interpolation to bring the framerate up to the standard of the display.

I would say that two simultaneous 960x1080 refreshes at 60hz are the not equivalent to 120hz, they're actually equivalent to 1920x1080 at 60hz, even if the system is essentially showing 120 separate frames per second (i.e., 60/eye). Otherwise Goldeneye on the N64 would be considered to be rendering 120fps (30x4 players). Which of course, it isn't.

You're right in that they're definitely not alternating the views.

That's probably closer to the truth considering the actual windows displayed.No, its more like a 1280x1080 at 60hz buffer with left aligned and right aligned 960x1080 slices taken from it.

So you start out with a 1280x1080 rendered display buffer and take 2 slices from it. For the left eye slice you start at pixel column 41 and take 960 pixel columns to the right or pixel columns 41 to 1000. For the right eye slice you start at pixel column 1240 and take 960 pixel columns to the left or pixel columns 1240 to 281. This is all done in the breakout box that has received the 1280x1080 rendered buffer from the ps4. Why the offset/overscan? That's used by the interpolator for head trajectory offset every other frame.That's probably closer to the truth considering the actual windows displayed.

Edit: actually I'm not sure about that...

What do you mean?

So you start out with a 1280x1080 rendered display buffer and take 2 slices from it. For the left eye slice you start at pixel column 41 and take 960 pixel columns to the right or pixel columns 41 to 1000. For the right eye slice you start at pixel column 1240 and take 960 pixel columns to the left or pixel columns 1240 to 281. This is all done in the breakout box that has received the 1280x1080 rendered buffer from the ps4. Why the offset/overscan? That's used by the interpolator for head trajectory offset every other frame.

So you start out with a 1280x1080 rendered display buffer and take 2 slices from it. For the left eye slice you start at pixel column 41 and take 960 pixel columns to the right or pixel columns 41 to 1000. For the right eye slice you start at pixel column 1240 and take 960 pixel columns to the left or pixel columns 1240 to 281. This is all done in the breakout box that has received the 1280x1080 rendered buffer from the ps4. Why the offset/overscan? That's used by the interpolator for head trajectory offset every other frame.

So you start out with a 1280x1080 rendered display buffer and take 2 slices from it. For the left eye slice you start at pixel column 41 and take 960 pixel columns to the right or pixel columns 41 to 1000. For the right eye slice you start at pixel column 1240 and take 960 pixel columns to the left or pixel columns 1240 to 281. This is all done in the breakout box that has received the 1280x1080 rendered buffer from the ps4. Why the offset/overscan? That's used by the interpolator for head trajectory offset every other frame.

Yes 281-1000 are present on both the left and right eye but I didn't mention it to avoid confusion.I'm confused, it sounds like you're suggesting that both the left and the right eyes are reusing the same pixel columns? i.e., 281 - 1000 are present on both, with only 1-280 reserved for the left and 1001-1240 for the right? I can understand the need to save some pixel columns to help with the interpolated frames, but surely it wouldn't be with how you've described it... Maybe I'm not understanding you correctly.

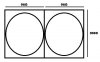

The top diagram is from the screen perspective and the bottom diagram from the render buffer perspective.I create a quick couple of images in Paint (don't judge me), one being how I understand the VR headsets work and the other being how I interpret what you're saying. Feel free to correct me if I'm being stupid here.

View attachment 1017

Yes, I know all the proportions are wrong, but I think this shows that's happening. Your left eye is looking at the left circle and your right is looking at the right circle. Your brain then merges those two images to give the perception of 3D.

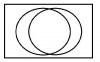

What you're describing seems more like this:

View attachment 1018

Where the left and right eyes are sharing some pixel columns. Similar to how a 3D TV works.

Is this what you're saying?