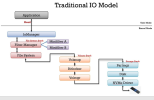

Authorization (auth-z) and authentication (auth-n) is one such requirement, permissions checking of the file itself after handling authN+Z is another, tracking of last file access metadata which can't be bypassed except for at an entire volume level, management of the NTFS file caching hierarchy is yet another, and every single part of that is a cakewalk compared to disentangling file-level fragmentation that absolutely exists and must be drilled back into the underyling non-linear sectors of the physical storage.

I honestly still don't get why the end result of this isn't supposed to be cachable? I mean the real mapping from a file handle's address space straight to the tuple of disk, sectors and offsets within?

Both authorization and authentication don't look like there would be any need to do it repeatedly.

Metatada constraints should be possible to relax safely with an exclusive lock in place without further side effects, just update on open and close.

Bypassing the NTFS cache hierarchy appears trivial after a flush + locking the file so it can't be changed or moved.

Resolving the file-level fragmentation in a single run for the entire file rather than on-demand doesn't exactly look impossible either, the prerequisite only the file record being frozen so that the regular invalidation can't happen.

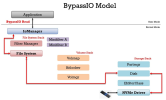

All the truly nasty parts are in the volume stack (and the PartMgr), which is being bypassed entirely. All under the prerequisite "you did not configure anything exotic". Bitlocker counting as exotic in this case is somewhat ironic. Raid (other than Raid 1) etc. is impossible to support as well, naturally. Also effectively prevents any funny details in this stack to change stuff on the fly.

Even though "bypassed" is maybe not the correct term - the aggregated offsets introduced by the skipped stack have to be fed back

once to the file system stack in order to be applied there.

Leaves you just with a handful of NTFS features (compression, EFS) which may render the individual file incompatible with raw access. Except with the filter manager bypassed, those were already ruled out to be in use.

Now, what are we left with? The

naked file system driver, with a file in a frozen state, and the entire additional addressing introduced with the entire lower stack being also temporarily being applied straight to the cached mapping?

Sure, the GPU still can't directly request storage access. But it doesn't need to, does it? Unless I completely misunderstood, and the NVMe protocol does not allow the CPU to post a read request to the NVMe device pointing to the GPUs address space, due to some security feature I couldn't find documented?

Scheduling for the GPU is still (mostly) CPU driven, so why wouldn't you just issue the read from there? Everything expensive is already eliminated from the stack. A solitary syscall remains, but maybe not even that depending on where it's dispatched from. Best case scenario is now closer to <250 cycles in user space, and <1000 cycles in kernel space assuming hot caches (extrapolated from what a comparable, trimmed down stack achieves under Linux).

The expectation is not that you would get to eliminate the CPU from the render feedback -> read -> dispatch loop yet! That's even from an security aspect just straight out horrifying, and just begs for exploits to happen. The GPU speaking NVMe is a pipe dream for now. Maybe Nvidia has enough firmware space left to implement that for their embedded RISCV core, but that still won't end up in supporting shared access between GPU and CPU. As you correctly said: the stack on the OS side is quite fragile during regular operation.

You

do need to get the synchronization of queued transfers to dependent dispatches on the GPU right though.

Unless I'm missing a feature, there are details to consider when you have a non-trivial PCIe topology due to store-n-forward behavior of involved PCIe switches. The CPU might have been able to fetch the completion event from the NVMe device prior to the data having reached the GPU, and even get to do the actual dispatch with some messages still being stalled.

PCIe switches where you don't know how they will behave in your system are a nightmare to work with when orchestrating a protocol with more than 2 peers. That's actually where I suspect "standard features" won't cut it, why AMD requires you to use a fully known PCIe topology only, with guarantees outside the specification for all involved peers.

How did AMD solve that? My best guess would be that they foremost tweaked the spanning tree to always include the CPUs PCIe root complex (i.e. a direct route between GPU and NVMe is forbidden, even if they happen to hang on the same switch!), so that

the CPU is guaranteed to observe an absolute order of events.

Requiring transfer to main system memory is the "backward compatible" method; it only must happen if the GPU cannot directly pull storage assets physically off the NVMe drive into the dGPU memory pool directly.

There are several alternatives in between.

- GPU speaking NVMe is the least likely to happen. You don't just pull, you have to schedule commands and implement a full protocol over PCIe. Also NVMe isn't designed for multi-master access with a single completion queue.

- In between you have the CPU issuing NVMe control commands, and the GPUs memory being the direct target of read commands. Like I said above, bus topology can get you into trouble with order of events. Also as you've stated repeatedly, plenty of work had to go into making the storage stack efficient enough to make this even worthwhile.

- That still leaves you with a bounce via main memory as the default route. DMA write from NVMe to main memory followed by DMA read initiated by GPU from main memory. Memory bandwidth successfully burned, but at least no CPU time wasted and no conceptional issues. Drivers/APIs required some tweaks to get there and be able to share a single buffer, in any case. Also still dependent on file system optimizations.

- The truly "backwards" method of the CPU doing the transfer, which is where we had gotten to after GPUs made their memory host-visible. As this point file system performance didn't even matter any more that much.... And yet this is still the route to go if the CPU needs to touch the data for any reason (SATA, CPU-based Bitlocker, software raids, file system compression etc.)

- And lastly the ancient way of having the GPUs driver stack forcibly allocating and requiring you to copy to the staging buffer (while still performing a DMA read), with the double-buffering of a caching file system, giving you the worst of all worlds.