This doesn't make any sense though. There are no changes in 1.2 in comparison to 1.1 which would lead to such gains on an NVMe SSD.

from the vid:

"But the most interesting one is a performance improvement that comes by way of moving the copy after GPU decompression onto the compute queue. This will provide a fairly big performance boost to applications that support GPU decompression.

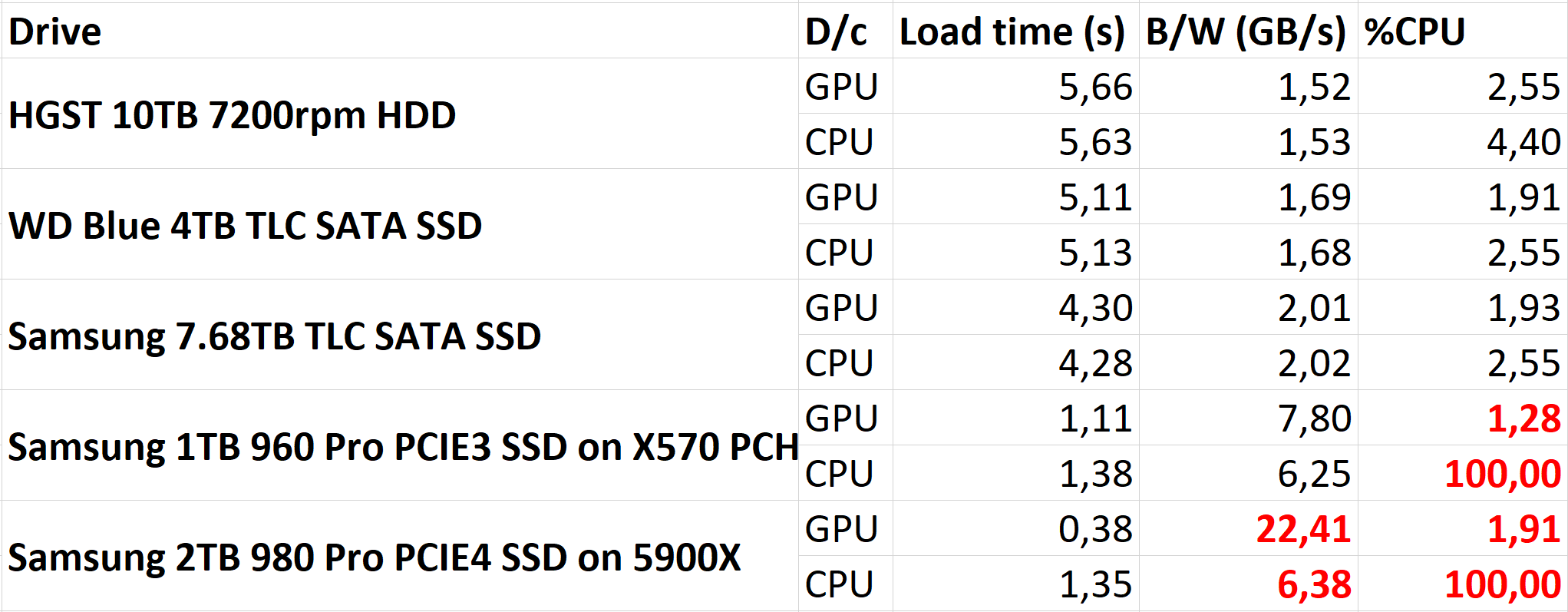

As can be seen from the video, the Gen5 SSD in particular benefits greatly from this change. In DirectStorage 1.1, it was being held back and its performance was virtually identical to the Gen4 drive. Now, the Gen5 SSD is able to flex its muscles a bit more. There are still more improvements coming to DirectStorage. We know that Direct Memory Access support is on the roadmap, according to Microsoft, which should increase performance even further."