I've read this more like that you can use FF h/w for this, including already existing h/w if needed, but you're likely to have better results on a GPU anyway.

"Existing IP" refers to ready-to-license building blocks. Unfortunately not to already existing or even integrated FF H/W.

It goes via system RAM. But there is basically no CPU impact of that.

Actually that's only the fallback solution.

Unless I'm entirely mistaken, then AMD has already demonstrated that they can stream directly to BAR from NVMe, for a specific combination of own chipset, CPU generation, GPU and qualified NVMe devices which work with their own (not the MS one) NVMe driver, but still with standard NTFS.

It's not as tricky as people tend to make it for a vendor which has control over all the involved components. Just requires clean tracking of resources over multiple driver stacks. The file system overhead some are so afraid of are not that bad when you can just build lookup tables in RAM as a one-time cost per application. Even alignment issues and alike don't make much of a difference for typical asset sizes - just something the GPU driver needs to mask.

Not expecting it from Intel any time soon though, their driver stack looks ... fractured. And NVidia will likely struggle until this is eventually properly standardized.

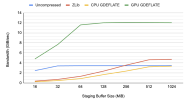

Going to system RAM does have a huge impact on the CPU after all. With PCIe 4.0 4x on the storage side, that's still 16GB/s of memory bandwidth (half-duplex!) burnt. Accounting for some inefficiencies, that's almost one DDR4 memory channels bandwidth worth lost. One of these nasty details you won't see in a synthetic benchmark (due to lack of easily accessible load statistics!), but which will bite you later on.

I.e use gDeflate inside BCPack, kraken inside BCPack also?

BC itself doesn't exactly have a place in the

storage formats any more. It doesn't produce a bitstream suitable for further packing.

You would be using any of the image formats suitable for (respectively their compression ends in) deflate compression (there are many!). Requires some additional post-processing on the GPU side, in order to reverse the additonal transformations which were enabling the image to achieve an actually decent compression rate in the first place.

What we are seeing showcased right now - GDeflate being applied directly to an RGB bitmap or vertex buffers - represents still a very early stage of development. Expect compression rates to get much better as people figure that out.

Chaining a BC encoder on the GPU, after the decompression, would be more viable to get the VRAM impact back down to where you originally had it when using BC family compression throughout the entire pipeline.

CPU decompression can (ans should really in case of streaming) certainly be implemented without locking rendering thread(s).

Without locking - yes. But don't forget about the size of the working set for the read-only lookup tables. Easy to run parallel decompression (from the same parameter set), but likely to trash L2/L3 for other workloads.

There is actually one elephant in the room:

What to do with all the target systems not getting an update with official DirectStorage support? Windows 10 is still going to stay for a long, long time.

The way it looks there are some constraints you simply can't work around without it, specifically the reduction of GPU uploads from 4 memory transfers down to 2 or 0 (AMD only).

But you are also facing the need to maintain a common format for your assets. With the current tendency that MS will recommend you to use the IHVs proprietary implementation of GDeflate - that's looking like something you can't even consider targeting for a very long time. The "software fallback" - for now - isn't provided for a significant portion of your target audience either.

So you will be reliant on shipping your own kernels for GDeflate support (and whatever future extensions you will need) as a fallback solution for the foreseeable future.

Using CPU decompression for the fallback path would be a huge mistake, after all at that point you have already dropped at least the previously used texture compressions schemes, now causing a vastly higher load (CPU and caches for decompression, memory and PCIe bandwidth for the now doubled data rates).