This is something I already have expressed concerns about earlier. That tweet is not entirely right btw, is does use mip-maps but it just point samples them instead of linear/tri-linear. It does use a sampling pattern that converges to cubic interpolation with dlss which in theory is better than linear so that is nice. But dlss does not get rid of all noise, especially with magnification. If you question is if you can introduce linear sampling like with normal texture sampling. Then the answer is yes but you have to decode multiple texel values before you interpolate because you can't interpolate the parameters before they go into the neural network. So that will increase the cost significantly and probably making it unusable. But IMHO the noise makes it unusable also. I think the original paper downplays the noise you need to get rid off. Yes it works with in these simple scenes but especially in engines like UE where artists can make custom materials you get situations with material aliasing which will add even more noise.The tweet could have done with expressing. These posts are showing noise from no texture mip filtering, and the sampling for mips uses dithering. This is now introducing noise to textures where textures to date have had none. As pointed out in some comments, we are taking scenes that are already noisy from low sampling and now adding noise to the textures too. There won't be a stable pixel on the screen!

The question is how to get around this. Can mip levels be introduced to the encoding?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DirectStorage GPU Decompression, RTX IO, Smart Access Storage

- Thread starter DavidGraham

- Start date

Ext3h

Regular

It's sampling the latent feature mip-maps at two different levels, 4 points each.does use mip-maps but it just point samples them instead of linear/tri-linear

That does permit the network to effectively do tri-linear / bicubic filtering filtering, but it's not using the mip-maps in the way they're intended, which is to provide a cutoff in frequency space as close to required frequency as possibly. Instead it's only using it as a reference point to ensure that features 2-3 octaves lower don't get completely missed. It's also only using that as network inputs, so the chance that the trained network happens to actually doing proper filtering is low.

All good if you can properly batch that. The cost is not in the arithmetic (neither tensor evaluation, let alone the activation function), nor the register pressure from the hidden layers. It's primarily in streaming the tensor in between layers.Then the answer is yes but you have to decode multiple texel values before you interpolate because you can't interpolate the parameters before they go into the neural network. So that will increase the cost significantly and probably making it unusable.

So if you can reuse the tensor before it's gone from the cache, the overhead is acceptable.

It would not help though. If you look closely, the noise dominates so badly in the upper 1-2 octaves, and only at the point at which it's close to being supported by the "latent feature" mip-map it turns stable enough that a reasonably low sample count could produce an improvement.

The high frequency features appear to be incorrect in both frequency and phase, only the amplitude is somewhat correct. Only the features up to one octave above the support points from the mip-map appear to have been reconstructed reasonably well.

Last edited:

The tweet could have done with expressing. These posts are showing noise from no texture mip filtering, and the sampling for mips uses dithering. This is now introducing noise to textures where textures to date have had none. As pointed out in some comments, we are taking scenes that are already noisy from low sampling and now adding noise to the textures too. There won't be a stable pixel on the screen!

The question is how to get around this. Can mip levels be introduced to the encoding?

Ext3h

Regular

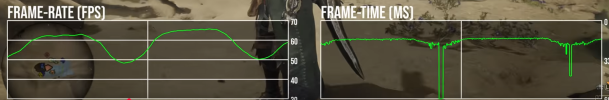

Monster Hunter Wilds came, used DirectStorage aggressively for all for asset streaming - and to no-ones surprise it introduced frame time spikes during asset streaming on NVidias entire GPU lineup, up to and including the 5090.

Don't get hung up on the video mixing up two distinct issues though - you got minor frame rate drops from the decompression overhead on all GPUs, but NVidia GPUs get hit by a pretty unique spike pattern where the GPU ends up non-responsive for 1-2 frames at a time:

For this one part, it actually doesn't look like MH:Wilds did anything wrong, the asset streaming was implemented fully asynchronously with assets remaining disabled until loaded, including a soft fade-in to avoid the popping artifacts. There is still a couple of delayed frames though whenever the copy engines get to work for a whole batch of textures in a row.

And it's not the decompression kernel that's hurting - at least not on more potent GPUs. Not plausibly, when the decompression is running at 90GB/s+ on pretty much every GPU.

That communication stall you get from fully saturating the PCIe bus was clearly underestimated.

Last edited:

DavidGraham

Veteran

Not just NVIDIA, also AMD and Intel GPUs as shown in the video.used DirectStorage aggressively for all for asset streaming - and to no-ones surprise it introduced frame time spikes during asset streaming on NVidias entire GPU lineup, up to and including the 5090

Ext3h

Regular

It's just a scheduling problem, so there are plenty of solutions:I really hope there is a solution here

- NVidia could use the designated PCIe protocol features for ensuring that command streams have priority over data streams. Even though that's IMHO close to impossible to happen for the Blackwell family.

- NVidia could force the Copy Engine to back-off every couple of consecutive MB transferred - either by driver or firmware.

- The game engine can use a semaphore to throttle asset streaming such that it may only transfer a set amount per frame rendered. You can easily know the PCIe link speed and effective transfer rate, and can therefor also target a set transfer rate limit. Only catch here is the interface of the DirectStorage API, that is hiding the command buffers as an implementation detail, and therefor you can't control the scheduling as a developer...

Not quite... all the GPUs have a increase in frame times from the concurrently running decompression, getting an increase in frame times of up to 10ms, with less impact on faster GPUs. But those hard stutters where you got 1-2 frames with almost tripple-digit frame times? That's unique to NVidia-GPUs. And also backed by a lot of user reports on Steam and other forums.Not just NVIDIA, also AMD and Intel GPUs as shown in the video.

I can confirm firsthand that even with the High Resolution texture pack, this issue doesn't show on AMD GPUs at all. Textures pop in, and framerate goes down slightly, but no matter how fast you turn, back-to-back asset streaming doesn't result in a noticeable stutter.

Last edited:

DegustatoR

Legend

Does this GPU have 8GBs of VRAM?I can confirm firsthand that even with the High Resolution texture pack, this issue doesn't show on AMD GPUs at all. Textures pop in, and framerate goes down slightly, but no matter how fast you turn, back-to-back asset streaming doesn't result in a noticeable stutter.

DavidGraham

Veteran

Anecdotal evidence doesn't amount to much, the game is buggy as hell and inexperienced players are experiencing a butt load of issues they don't know the culprit behind. Here is the 9800X3D with 4090 running rock solid 90fps at 1440p ultra wide with max textures and DLAA. No stutters whatsoever.But those hard stutters where you got 1-2 frames with almost tripple-digit frame times? That's unique to NVidia-GPUs. And also backed by a lot of user reports on Steam and other forums

Why would DirectStorage hog the PCIe bus? Isn't the entire point of it to be to send compressed textures into VRAM to be decompressed? Wouldn't that utilize less bandwidth?So if I'm understanding this correctly, DirectStorage is hogging the PCIe bus causing stalls?

Looking at Spider-Man 2.. PCIe Rx and Tx never exceed 8GB/s. There's plenty of bandwidth there.

IMO the issue is simply the decompression happening on the GPU cores stalling the engine.

Ext3h

Regular

DirectStorage does two things. What you refer to is the GDeflate decompression that's happening on the GPU.Why would DirectStorage hog the PCIe bus? Isn't the entire point of it to be to send compressed textures into VRAM to be decompressed? Wouldn't that utilize less bandwidth?

The other half is shifting the uploads from the 3D/Compute queues to the copy queue as a hidden implementation detail. That's not just semantic sugar to keep them out of the other queues, but the copy queue is actually backed by an ASIC that is capable and designed to perform a transfer at exactly the full available PCIe bandwidth. Unlike transfers scheduled on the other two engine types that the leads to executing as a low thread count kernel instead, which coincidentally also leaves a lot of "scheduling bubbles" in the whole involved memory system on the GPU, which coincidentally prevents the issue entirely. Anything that introduces bubbles safes your ass.

It's not that hard to try it for yourself in a small synthetic benchmark. Just go and schedule 1GB+ of uploads to the copy engine in parallel to your regular 3D load, and see what it does in terms of perceived execution latency. Don't even bother with monitoring GPU utilization during that experiment, you will most likely encounter a blind spot there too.

Unexpected information, but not surprising. Indicates that asset streaming was deliberately throttled. One of the legit workarounds.Looking at Spider-Man 2.. PCIe Rx and Tx never exceed 8GB/s. There's plenty of bandwidth there.

But also possibly constrained by level/engine design. You only hit PCIe limits for an extended duration if a whole bulk of assets are already resident in RAM. If streaming is constrained by the disk instead, all is fine...

Tested on an 7900XT with 20GB of VRAM. Additional VRAM size didn't really change much about the frequency of (usually too late) asset streaming.Does this GPU have 8GBs of VRAM?

Quite unlikely. Even the DirectStorage demo did never result in the utilization of effectively more than one concurrent decompression kernel, that scheduling detail is locked away in the implementation of DirectStorage. And the API design of DirectStorage doesn't really support that assumption either - even though you do have the option to await a GPU side fence, that one spans the entire duration from disk activity to decompression. Visibly delayed assetss pretty much rule out that this has happened.IMO the issue is simply the decompression happening on the GPU cores stalling the engine.

If you rather refer to shader utilization introducing a stall - yes, that's happening. But that only introduces a gradual slowdown. Not a full stall for >100ms.

Last edited:

I get what you're saying. Thanks for the explanation!DirectStorage does two things. What you refer to is the GDeflate decompression that's happening on the GPU.

The other half is shifting the uploads from the 3D/Compute queues to the copy queue as a hidden implementation detail. That's not just semantic sugar to keep them out of the other queues, but the copy queue is actually backed by an ASIC that is capable and designed to perform a transfer at exactly the full available PCIe bandwidth. Unlike transfers scheduled on the other two engine types that the leads to executing as a low thread count kernel instead, which coincidentally also leaves a lot of "scheduling bubbles" in the whole involved memory system on the GPU, which coincidentally prevents the issue entirely. Anything that introduces bubbles safes your ass.

It's not that hard to try it for yourself in a small synthetic benchmark. Just go and schedule 1GB+ of uploads to the copy engine in parallel to your regular 3D load, and see what it does in terms of perceived execution latency. Don't even bother with monitoring GPU utilization during that experiment, you will most likely encounter a blind spot there too.

Unexpected information, but not surprising. Indicates that asset streaming was deliberately throttled. One of the legit workarounds.

But also possibly constrained by level/engine design. You only hit PCIe limits for an extended duration if a whole bulk of assets are already resident in RAM. If streaming is constrained by the disk instead, all is fine...

Tested on an 7900XT with 20GB of VRAM. Additional VRAM size didn't really change much about the frequency of (usually too late) asset streaming.

Quite unlikely. Even the DirectStorage demo did never result in the utilization of effectively more than one concurrent decompression kernel, that scheduling detail is locked away in the implementation of DirectStorage. And the API design of DirectStorage doesn't really support that assumption either - even though you do have the option to await a GPU side fence, that one spans the entire duration from disk activity to decompression. Visibly delayed assetss pretty much rule out that this has happened.

If you rather refer to shader utilization introducing a stall - yes, that's happening. But that only introduces a gradual slowdown. Not a full stall for >100ms.

DegustatoR

Legend

My point is that you may see VRAM related hitching - which is why you don't see it on cards with more VRAM. Comparing a 8GB 4060 to a 20GB 7900XT isn't telling me much.Tested on an 7900XT with 20GB of VRAM. Additional VRAM size didn't really change much about the frequency of (usually too late) asset streaming.

If your theory is correct then why do we only see these hitches on a 4060? A faster GPU should handle decompression faster which to me suggests that the PCIE transfer would be even less limited by the decompression part and should thus result in an even higher bandwidth saturation.

Also the internet said that AMD cards aren't affected by the GPU decompression and yet here the pattern of it is exactly the same on both Nvidia and AMD GPUs.

Ext3h

Regular

We don't. In the linked video he simply switched texture settings to "medium" prior to testing all the other cards. All of the following tests in that video merely demonstrate the (expected) overhead of decompression, but not those hard spikes. But there's plenty of unique reports from users who suffer the same on 4090/5090 cards as well. (Yes, also plenty where it works. But this is a type of bug is quite situational, and you there's all sorts of possible interference that can let you avoid the expected worst case.)If your theory is correct then why do we only see these hitches on a 4060?

The only limit between those two goes in the opposite direction. PCIe transfer can stall decompression, but not the other way around. Two different engines, only asynchronously loosely coupled by a fence, command buffers eagerly submitted. Work starts as soon as the CPU lifts the fence to indicate IO completion. And it's not just one or two tasks in that queue, but dozens. So plenty of chances for the IO (just view the disk IO as yet another sequential queue, tied in with raw events), transfer and decompression queues to shift against each other. Realistically, all it needs is to have one asset that was depending on a real disk read followed by a bunch of assets that all happen to be cached in RAM already, and suddenly you got an abnormal burst of transfers coming in.the PCIE transfer would be even less limited by the decompression part

There is zero chances for moderation with the current DirectStorage API, once a load has been requested.

DegustatoR

Legend

@Dictator Hey Alex, did you try testing the other cards besides 4060 with "high" settings so see if they have the same hitches as 4060 does on that texture quality?We don't. In the linked video he simply switched texture settings to "medium" prior to testing all the other cards.

If some monitoring software reports a peak of 8GB/s on the PCIe bus, on what timescale is that referring to? For instance if it checks every 200ms how much data has been transferred, couldn't it theoretically be trying to push 7GB in 50ms and 1GB in the following 150ms and you'd still see 8GB/s reported as the max?

The DF video shows he tested a 4070 and had the same issues, just not quite as pronounced.@Dictator Hey Alex, did you try testing the other cards besides 4060 with "high" settings so see if they have the same hitches as 4060 does on that texture quality?

DegustatoR

Legend

No, it didn't. There's seemingly just one hitch at the first turn of the camera which isn't happening again after that. Which could be a sign of data loading and staying in VRAM - while on an 8GB 4060 is has to unload and load again on each camera turn.The DF video shows he tested a 4070 and had the same issues, just not quite as pronounced.

To be fair... Alex isn't even turning the camera quickly in either example... if he was turning it quicker.. it would stutter on the 4070 as well.No, it didn't. There's seemingly just one hitch at the first turn of the camera which isn't happening again after that. Which could be a sign of data loading and staying in VRAM - while on an 8GB 4060 is has to unload and load again on each camera turn.

Similar threads

- Replies

- 2K

- Views

- 240K

- Replies

- 3

- Views

- 4K

- Replies

- 13

- Views

- 6K

- Replies

- 204

- Views

- 34K

- Replies

- 43

- Views

- 11K