One assumes they can copy-paste from the "Using AF" example in the online manual...If it's one additional step, how hard is it to take that step? This is getting ridiculous. Even if it's a bit convoluted to set AF flags...

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry Article Technical Discussion Archive [2015]

- Thread starter Deleted member 11852

- Start date

-

- Tags

- digital foundry

- Status

- Not open for further replies.

If it's one additional step, how hard is it to take that step?

It's not hard, but it means that you need a "PS4 person" in your team, and no multiplatform studio seems to have one. Why bother, if it runs ok anyway?

It's not hard, but it means that you need a "PS4 person" in your team, and no multiplatform studio seems to have one. Why bother, if it runs ok anyway?

Need a PS4 person? Multiplatform studios don't have people experienced with PS4 development?

don'tNeed a PS4 person? Multiplatform studios don't have people experienced with PS4 development?

Multiplatform studios don't have people experienced with PS4 development?

They miss AF, they use d3d registers. I would say they probably have experienced people, but do not have people who are dedicated to PS4 version.

http://www.eurogamer.net/articles/d...am-knight-on-ps4-is-a-technical-tour-de-force

Batman is great on PS4 but bad PC port

Edit: Not the same team it was outsourced for PC version. I hope it will be better after PC patch.

Batman is great on PS4 but bad PC port

Edit: Not the same team it was outsourced for PC version. I hope it will be better after PC patch.

Last edited:

http://www.eurogamer.net/articles/d...am-knight-on-ps4-is-a-technical-tour-de-force

Batman is great on PS4 but bad PC port

Edit: Not the same team it was outsourced for PC version. I hope it will be better after PC port.

I'll probably get the PS4 version now... then double dip later for the PC version once all the

D

Deleted member 11852

Guest

Digital Foundry: It gets worse - Batman: Arkham Knight on PC lacks console visual features

And here we go again: "lurches to as low as 38fps .... reaching a nadir of 410ms"

1000 / 410 = 2.44 fps, that's the real "minimum fps".

And somebody told me here that they don't measure average fps and call it "minimal". Now it's a fact - they do.

All rates have to be measured over a period of time, that period being arbitrary. Digital Foundry use a trailing average (the only reasonable way of doing it, though they should say in their methodology for each article over what period).

I've argued before that update intervals - which you would measure in Hz or ms (same thing) - are interesting to look at, but not everyone agrees. As a single takeaway figure for a minimum frame rate the single longest update interval is useless though.

Frame time graphs are where it's at. The frame-time-graph-revolution that hit PC reviewing a couple of years back is something that DF should look to get in on.

I've argued before that update intervals - which you would measure in Hz or ms (same thing) - are interesting to look at, but not everyone agrees. As a single takeaway figure for a minimum frame rate the single longest update interval is useless though.

Frame time graphs are where it's at. The frame-time-graph-revolution that hit PC reviewing a couple of years back is something that DF should look to get in on.

Frame time graphs are where it's at. The frame-time-graph-revolution that hit PC reviewing a couple of years back is something that DF should look to get in on.

Their performance analysis video's effctively give you that in real time. Interestingly the frame times (while unreasonably high for a 780Ti) didn't look all that uneven in the recent Batman article. Presumably that wasn't giving us the whole story, otherwise with e 30fps lock applied the game should be pretty smooth on that level of hardware - and I hear that it isn't.

hesido

Regular

Their performance analysis video's effctively give you that in real time. Interestingly the frame times (while unreasonably high for a 780Ti) didn't look all that uneven in the recent Batman article. Presumably that wasn't giving us the whole story, otherwise with e 30fps lock applied the game should be pretty smooth on that level of hardware - and I hear that it isn't.

It does give it to you in real time but doesn't let you assess the whole video. Gamersyde began putting up a nice performance graph that keeps the frame rate graph as it draws it, so by the end of the video, you get the whole picture.

All rates have to be measured over a period of time, that period being arbitrary.

I agree. Which means the "fps" measure is useless nowdays. It was useful when fram times were not so wildly changing.

the single longest update interval is useless though

It's not and obviously it's not useless if it is 401ms, if you set your moving average to 500ms it will give you min 3fps (which everybody would understand is a problem).

The big question here is how perception of frame-pacing works? I would argue that difference between longest and shortest frame time in sane visual reaction interval (120-150ms for average human) would provide us with the answer. But I have no hard evidence to prove it. And some people would argue that long frame times are worse (which I think is a complete bs, unless it's above 50ms). Probably somebody can conduct an experiment on a focus group (caveat: it cannot be done on any modern game, because these have uneven frame pacing by definition, probably using some old fast shooter like Queak3 is a best bet).

I agree. Which means the "fps" measure is useless nowdays. It was useful when fram times were not so wildly changing.

Yeah, we need a better approach. Unfortunately that means an approach that's more difficult for the average gamer to get their head around. At the very least, more emphasis on how averages are calculated would be useful and help people to understand what the figure were showing (and missing).

It's not and obviously it's not useless if it is 401ms, if you set your moving average to 500ms it will give you min 3fps (which everybody would understand is a problem).

I said it was useless as a single takeaway figure, as far more important information is completely discarded.

I actually think a shorter rolling average for frame rates would be good, if you're going to have them, as there's a better chance they'll represent volatile frame rates. Too fast, however, and you lose the ability to make out what you're seeing on a real time frame rate meter.

The big question here is how perception of frame-pacing works? I would argue that difference between longest and shortest frame time in sane visual reaction interval (120-150ms for average human) would provide us with the answer. But I have no hard evidence to prove it. And some people would argue that long frame times are worse (which I think is a complete bs, unless it's above 50ms). Probably somebody can conduct an experiment on a focus group (caveat: it cannot be done on any modern game, because these have uneven frame pacing by definition, probably using some old fast shooter like Queak3 is a best bet).

I think this is a good question. Reaction times (which can be affected by multiples of frame time) are one issue that some people are acutely attuned to. "Smoothness" would be another. Consistency would be another again (also badly affected by uneven frame times that make time pass in a none linear fashion, and this is a fucker).

Regular interval glitches are a weird one, as they have a minimal impact on "frame rate" and a relatively small representation on frame time graphs, but once I notice them I can't stop looking for them and then I always see them and then I get ANGST.

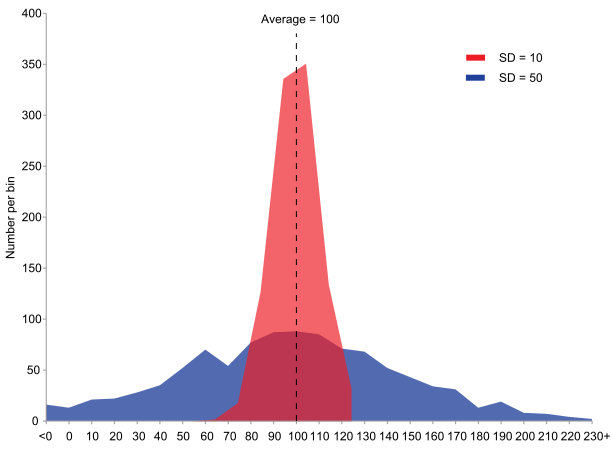

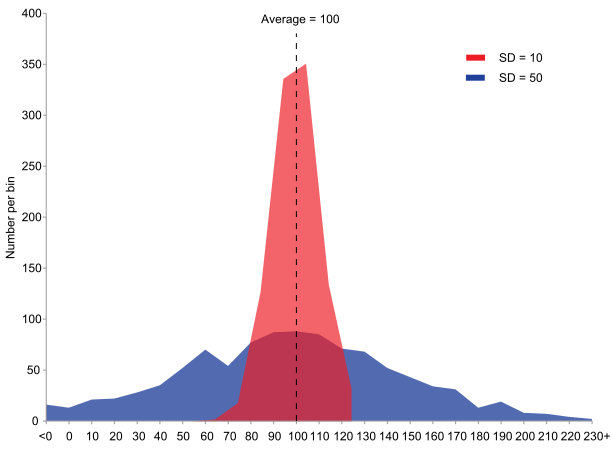

Standard Deviation of frame-time values would be a good indication of big frame-time variance, for instance 2 samples can have the same average but with very different dispersion of values, and it's easy to understand like this:

Standard Deviation of frame-time values would be a good indication of big frame-time variance

It's kind of useless, because frame-time distribution is not normal. I would say that using FT to move it into frequency domain can be beneficial (lower frequencies in signal - better frame-pacing), but not regular random variable stuff.

It's kind of useless, because frame-time distribution is not normal. I would say that using FT to move it into frequency domain can be beneficial (lower frequencies in signal - better frame-pacing), but not regular random variable stuff.

This is getting beyond me, but are you saying there would be a way to represent various types of frame rate variation as different magnitudes of frequency on a frequency graph?

If so, the shape of such a graph could be very useful in at-a-glance indicating the kind of experience the game presented.

D

Deleted member 11852

Guest

Maybe they have.. TWO FACES!They are taking an age to do the Batman face-off, what gives?

- Status

- Not open for further replies.

Similar threads

- Locked

- Replies

- 3K

- Views

- 311K

- Replies

- 453

- Views

- 33K

- Replies

- 5K

- Views

- 557K

- Replies

- 3K

- Views

- 409K