Nvidia isn't a Grapes of Wrath land owner and PC gamers aren't the Joads.

I agree with

@Dictator that in terms of

realistic pricing asks of Nvidia, expecting the 4070 to be say, $400 is being naive. AMD will not be offering a competitive product to the 4070, 16GB or not, at that price point. $500? Sure, that's reasonable in terms of value and something that would not significantly impact Nvidia's margins, and would likely receive a very positive reception from consumers. Most reviewers are reasonable.

But, your post is a rather defensive reply to someone simply trying to rebut the argument - given by Nvidia - that they

must price their consumer products as they currently are due to market conditions outside of their control. What is it exactly that you're objecting so strongly to here - the use of the word 'greed'?

In the context of essentials for life, nothing we argue about here matters - no shit. The vast majority of consumer products are not essential to survival. And yet, we argue about their supposed value all the time. Something can be considered a 'rip-off' outside of the context of baby formula. Nvidia arguing they have no choice but to price their products as they are is probably based on some degree of truth, but also PR. Every company during this inflationary period, when asked, have responded that they must raise the prices to the what they have - while also making record profits.

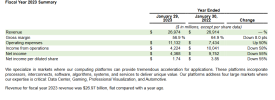

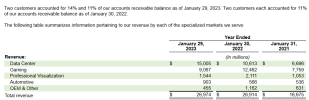

Noting that, and in this case, noting the

exceptional gross margins of Nvidia in this supposed time of economic turmoil is absolutely fair game. What would make the criticism fairer of course, would be if Nvidia segregated out their margins from their consumer and datacenter sales, so we would have an idea of how much of the pie TMSC is taking. But they don't want to do that.