What? no. Visually it looks quite similar, yes, even if all assets are new ones, higher resolution on PS4, but the architecture is too different. They had to redo basically everything: from single threaded engine to multithreaded, from using CELL + GPU to use only GPU, from 2 memory pools to unified memory etc. I'd say there are more architectural changes from PS3 -> PS4 than PS4 -> PS5.TLOU Remastered on PS4 is essentially the PS3 game.

TLOU Part I on PS5 has been completely redone.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry Article Technical Discussion [2023]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

What? no. Visually it looks quite similar, yes, even if all assets are new ones, higher resolution on PS4, but the architecture is too different. They had to redo basically everything: from single threaded engine to multithreaded, from using CELL + GPU to use only GPU, from 2 memory pools to unified memory etc. I'd say there are more architectural changes from PS3 -> PS4 than PS4 -> PS5.

By that I mean it's the exact same game. It's a remaster. It's not like this one where they redid all the assets. It's an enhanced port, not a remake. Obviously, they had to adapt to the PS4's architecture but this is not different from God of War III and God of War III Remastered on PS4 or any other remaster we saw during the 8th generation.From an asset quality, kind of - Joel and Ellie's in-game models were substantially improved, but nothing drastic. But it sounds like quite a lot had to change:

Porting the Last of Us Was Hell

Overstander

Newcomer

the fact alone that like cerny stated that all of the I/O Shenanigens are hidden to the Dev if they work on PS5. It is all abstracted. They handle their data as if its is uncompressed. Not even need to think about decompression because all is done automaticly by the I/O Stack. PS5s CPU "sees" only uncompressed raw data to work with .In a way, I can't help but be impressed.

Combined with these devs inexperience with PC platform, the PS5s deeper systems are a far cry from PS4 which was relatively simplistic in how it did things. This paradoxically forced devs to worker harder and get far more creative to eke more cycles out of the machine. Whereas with PS5 it probably allows them to do things relatively fast and without worry in a lot of ways regarding optimization of many things.

Even though horizon had early PC issues, I doubt they were anything close to what tlou part 1 is going through now

D

Deleted member 2197

Guest

Can we please have some moderation regarding OT discussions that obviously belong in other forum sections?

So i still believe that Direct Storage had no real test - even Forespoken was not a real test ( in not much data was requested ). The real test will be if we see how it fares against PS5 with the hopefully soon coming port of R&C Rift Apart. Even though that game did not fully utilize PS5s bandwith entirely (visible when people run the game of Gen 4 SSDs with actuall less bandwidth than Sony officially asks for), but anyway it would be the best test we could have for now.

I also simply dont believe any figures that tel the GPU usage would be only small, so no real burden parallel to rendering the game. I happily repeat that i simply DONT BELIEVE that a cobbled together Software solution is on par with PS5s very well thought out hardware array. Period. Dont harrass me with further questioning - i dont believe it and you going to accept that - are we clear?! If you continiue you wander to the blocklist until we have a final good test at hand. Oh and btw - when i speak "Direct Storage is not on par with PS5" then i mean mainly the RTX 20xx cards and their AMD Counterparts. Those are the cards that everyone tests against PS5. A RTX 30xx or even 40xx cards will have most likley enough GPU ressources to handle decompression while rendering / raytracing a game . But i stand to what i proclaimed often - that RTX 20xx cards will see no light against later Gen PS5 Exclusives.

That i happily post here to be later quoted.

So what you're saying is that when heavily used for in game streaming, the PS5's decompression unit will result in a lower load on its CPU and GPU than on the equivalent PC components even when DS1.1 is being used.

On that we agree.

And based on the above post it seems you also agree that a PC with some level of higher CPU and GPU performance than the PS5 can achieve higher data throughput than the PS5 while still performing as well or better in the other aspects of the game.

And I hope you can also agree that GPU based decompression should also significantly reduce the the additional CPU requirement for the PC in the above scenario.

I guess the only area of potential disagreement then is what level of additional hardware on the PC side is required to match the PS5 under the different scenarios of GPU and CPU decompression. And since we have no games upon which to draw a conclusion on the former at this time, it seems premature to make assumptions about this.

Overstander

Newcomer

Like i already said - i believe the RTX 20xx cards will not cut it against PS5 in heavy streaming scenario. Forespoken was not a good test. I wonder what they are streaming anyway , given how barren the world of Forespoken looks.So what you're saying is that when heavily used for in game streaming, the PS5's decompression unit will result in a lower load on its CPU and GPU than on the equivalent PC components even when DS1.1 is being used.

On that we agree.

And based on the above post it seems you also agree that a PC with some level of higher CPU and GPU performance than the PS5 can achieve higher data throughput than the PS5 while still performing as well or better in the other aspects of the game.

And I hope you can also agree that GPU based decompression should also significantly reduce the the additional CPU requirement for the PC in the above scenario.

I guess the only area of potential disagreement then is what level of additional hardware on the PC side is required to match the PS5 under the different scenarios of GPU and CPU decompression. And since we have no games upon which to draw a conclusion on the former at this time, it seems premature to make assumptions about this.

PS5 dont need to be on par with any RTX 40xx card or the higher tier 30xx cards.

If the PS5 stays ahead of a equivalent CPU and a RTX 2080 / ti PC the Cerny I/O will have already proven itself as the superior way of doing I/O. And alot of people will eat crow for telling that "A RTX 2070" will be enough to play at or better settings than PS5 ...

I would not be suprised if latency ramifications on PC would sour the milk even with high end hardware in terms of streaming.

Lets hope they finaly port R&C Rift Apart so we have an exact enough comparison between PS5 I/O and DS.

Although given that the game does not represent a major Sony brand and is already an older PS5 game , i wonder what happened to the port.

It was part of the nvidia leak wich has proven to be true until now.

Maybe they ran into hurdles with that one .. I/O hurdles perhaps?!

D

Deleted member 11852

Guest

Whoosh?Like a seasoned pc developer already, releasing launch day garbage.

Inuhanyou

Veteran

As interesting as it is scary. Current human society is not advanced enough to handle what AI will bring.

Like i already said - i believe the RTX 20xx cards will not cut it against PS5 in heavy streaming scenario. Forespoken was not a good test. I wonder what they are streaming anyway , given how barren the world of Forespoken looks.

PS5 dont need to be on par with any RTX 40xx card or the higher tier 30xx cards.

If the PS5 stays ahead of a equivalent CPU and a RTX 2080 / ti PC the Cerny I/O will have already proven itself as the superior way of doing I/O. And alot of people will eat crow for telling that "A RTX 2070" will be enough to play at or better settings than PS5 ...

I would not be suprised if latency ramifications on PC would sour the milk even with high end hardware in terms of streaming.

Lets hope they finaly port R&C Rift Apart so we have an exact enough comparison between PS5 I/O and DS.

Although given that the game does not represent a major Sony brand and is already an older PS5 game , i wonder what happened to the port.

It was part of the nvidia leak wich has proven to be true until now.

Maybe they ran into hurdles with that one .. I/O hurdles perhaps?!

That's a lot of 'if's' 'maybe's' and 'we'll see in the future's'.

But since this is a technical forum I'll focus on the one point you raised which leaned in that direction:

"I would not be suprised if latency ramifications on PC would sour the milk even with high end hardware in terms of streaming."

What exactly do you mean by this? What latency? From where? And "sour the milk" how exactly? Let's hear the scenario with some more technical detail to support your theory.

Overstander

Newcomer

what i would likley mean with that - of course adding up of capoing data back and forth between the oh so many diffrent memory systems. Way more complicated than PS5s solution. Way more. But you can tell what you want - in the end it stands _ i dont believe that jensen flipping a switch will enable just every GPU to overtake PS5s storage solution. I expaleined already many times why i think it is.That's a lot of 'if's' 'maybe's' and 'we'll see in the future's'.

But since this is a technical forum I'll focus on the one point you raised which leaned in that direction:

"I would not be suprised if latency ramifications on PC would sour the milk even with high end hardware in terms of streaming."

What exactly do you mean by this? What latency? From where? And "sour the milk" how exactly? Let's hear the scenario with some more technical detail to support your theory.

I will not go into more detailed explanation. I will here and there state the same but no explanation will be given. You believe in DS - i dont . Simple. I can life with that , can you?

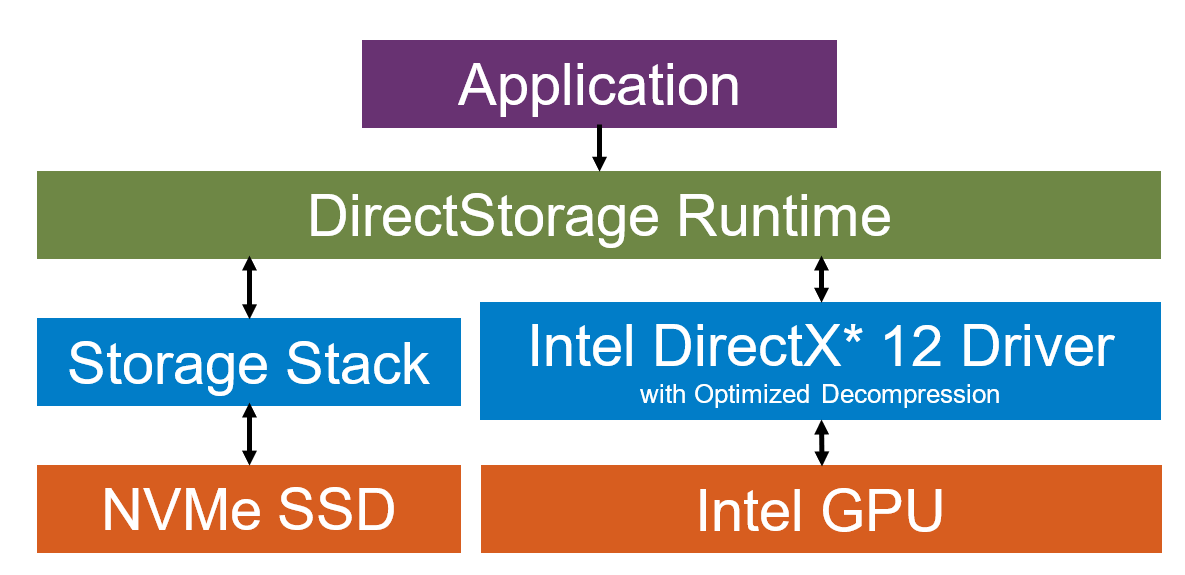

“Loading all the assets of a game level with DirectStorage is simple enough, but emerging graphics workloads load assets constantly, treating high-speed storage as a massive read-only, last-level cache. Streaming technology enables a scene containing hundreds of gigabytes of assets to be supported by 1/1000th as much physical memory. To explore this usage, and understand techniques to optimize performance, Intel built Expanse. It’s a simple demo of a virtual texturing system that now supports GPU decompression.”

“The profiling results for a high-end platform containing an Intel® Core™ i9-12900 CPU and Intel® Arc™ A770 GPU show that Expanse (in benchmark mode) can average hundreds of tiles uploaded per frame, and sometimes thousands of requests for a single submit. With compressed assets, it is possible for the uncompressed bandwidth to exceed the theoretical performance of the disk, or the PCIe interface.”

If you think pc can’t compete with ps io then you live in fantasy land. I admire patience of pjbliverpool and other posters becouse this conversation is pure bullshit. It’s all based on fairy tales wishful thinking and ignoring facts and math.

Overstander

Newcomer

i corrected that for you hahaI admire ... pjbliverpool

what i would likley mean with that - of course adding up of capoing data back and forth between the oh so many diffrent memory systems. Way more complicated than PS5s solution. Way more.

"Oh so many different memory systems".... I assume by that you mean it's 3 memory systems (SSD, RAM, VRAM) vs the PS5's 2 memory systems (SSD, unified RAM). We obviously have quite different definitions of "way more". You know, I'm starting to get the feeling you don't really understand the systems you're trying to speak authoritatively about.

Additionally, streaming data does not need to be copied "back and forth" between the memory pools. If you require a texture for example, on the PC you copy it from the SSD into the system RAM, and then from the system RAM into the VRAM. On the PS5 you copy it from the SSD to the shared memory directly. So that's one extra copy. But by far (i.e. a couple orders of magnitude at least) the highest latency operation in that sequence is the copy from SSD since that is a far higher latency device than DRAM. Therefore the additional latency added by the copy from RAM to VRAM is almost entirely irrelevant to the full end to end operation.

So with the above in mind, I'm sure you can understand that if you were to pre-cache data in system memory and then call it from there into VRAM as needed - essentially using RAM as an additional cache between VRAM and SSD, you can actually get data into VRAM on PC much, much faster than you can on the PS5, both in terms of raw bandwidth, and latency.

But you can tell what you want - in the end it stands _ i dont believe that jensen flipping a switch will enable just every GPU to overtake PS5s storage solution. I expaleined already many times why i think it is.

I will not go into more detailed explanation. I will here and there state the same but no explanation will be given. You believe in DS - i dont . Simple. I can life with that , can you?

But this is a technical forum where we discuss technical details. What you want to believe isn't really relevant, details are. Jensen isn't "flipping a switch" here, Direct Storage isn't even an Nvidia technology, it's a Microsoft technology developed in collaboration with Nvidia, Intel and AMD. They have literally had to invent a new GPU decompressable compression scheme to make it work as well as developing an entirely new storage API to replace the existing decades old one. It's a pretty major technical achievement which has already been implemented in a real game (without GPU decompression) with corresponding benchmarks showing the reduced CPU load, and while there are no games using GPU decompression yet, there are real world benchmarks that anyone can download and run which show very clear data throughput speedups with corresponding CPU overhead reductions. So simply "not believing in it" isn't really a rational option.

Overstander

Newcomer

All tests so far are not valid ones since we had no real stress test of a Game who would demand continuously streaming of high GB/s numbers as PS5 is capable of.

We need to wait until Rift Apart lands on PC.

Or now that we have with the TLOU PC Port a PS5 Exclusive ignoring (for now) your Holy Grail Direct Storage and therefore fails to even come close to PS5 in terms of Streaming, we could have the phenomenal Chance that they maybe include DS support with a patch. Then we would see what kind of difference it can make. If that scenario actually happens , then the ignorant PC port was actually worth something, if for nothing else then at least supplying us with an additional ( and by then still best) Datapoint.

Btw - is it known already how much data is actually streamed at all times on PC? Did someone measure this?

Would be interesting to know.

We need to wait until Rift Apart lands on PC.

Or now that we have with the TLOU PC Port a PS5 Exclusive ignoring (for now) your Holy Grail Direct Storage and therefore fails to even come close to PS5 in terms of Streaming, we could have the phenomenal Chance that they maybe include DS support with a patch. Then we would see what kind of difference it can make. If that scenario actually happens , then the ignorant PC port was actually worth something, if for nothing else then at least supplying us with an additional ( and by then still best) Datapoint.

Btw - is it known already how much data is actually streamed at all times on PC? Did someone measure this?

Would be interesting to know.

Silent_Buddha

Legend

As I said, I understand not using DirectStorage 2 with GPU decompression. That’s much harder to implement but the guys at Square managed to get 1.1 in Forspoken and it came out like 2 months before their game shipped. Surely, Naughty Dog could have used it for this game considering the awful load times.

Something to keep in mind, the Square-Enix team that was working on that had much earlier access to DirectStorage due to MS using them to help develop and test it. Basically the Forspoken team had greater a year lead time over pretty much another other developer WRT implementing it into their game. Thus it's implementation was already in a relatively mature state in their engine even before DS was officially released.

Regards,

SB

I'm kind of annoyed at the lack of GDC talks about some of these spectacular PS5 exclusives which are stressing so much bandwidth every second. I'd love to read about how they're only possible on PS5 and nothing else.

In fact, I'm surprised Rift Apart is being ported to PC... because that seems to imply that the developers believe PC is capable of it! Which it's clear that it's not!

/s

In fact, I'm surprised Rift Apart is being ported to PC... because that seems to imply that the developers believe PC is capable of it! Which it's clear that it's not!

/s

Wasn't aware of that. Thanks for the information.Something to keep in mind, the Square-Enix team that was working on that had much earlier access to DirectStorage due to MS using them to help develop and test it. Basically the Forspoken team had greater a year lead time over pretty much another other developer WRT implementing it into their game. Thus it's implementation was already in a relatively mature state in their engine even before DS was officially released.

Regards,

SB

GhostofWar

Regular

Didn't digital foundry manage to do something like tape a pin on an ssd drive to hamstring the performance to below 2.5GB/s and it didn't change rift apart loading or performance at all? I can't for the life of me find the video and thinking it may have been a small talking point in a DF direct. I remember watching Rich talking about it and how a patreon member bought it to his attention. Someone with some younger grey matter might be able to remember where exactly this was from.

- Status

- Not open for further replies.

Similar threads

- Locked

- Replies

- 3K

- Views

- 311K

- Replies

- 453

- Views

- 33K

- Replies

- 3K

- Views

- 409K