Why would data be struggling to get to where it needs to be on a properly equipped PC and application? Like I'm seriously asking you to explain the bottleneck here in terms of interfaces, bandwidth and processing capability vs workload because all I'm hearing are wild claims about PS5 superiority without any technical details to back them up.

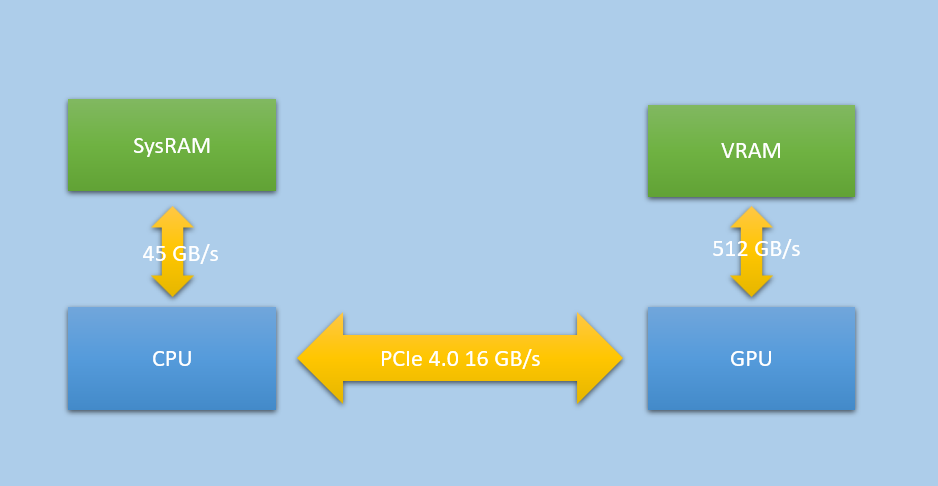

Obviously a PS5 is going to outperform an ill-equipped PC in this respect, (and as we've seen, if the application isn't properly utliising a correctly equipped PC, the result will be the same), but lets take an PCIe 4.0 NVMe equipped system utilising Direct Storage 1.1 with GPU decompression as a baseline along with a CPU and GPU that is at least a match for those in the console. And as a preview I will say that a little more CPU power on the PC side should be required for a similar result, but I'll leave you to explain the detail of why...

Why would Direct Storage be unable to keep up? As with my above point, please explain this in terms of interfaces, bandwidth and processing capability vs workload. We already have benchmarks showing decompression throughput far in excess of the known limits of the hardware decompressor in the PS5, so what is it that you think will not be able to keep up, and why?

Perhaps it's the GPU's ability to keep up with the decompression workload at the same time as the rendering. Which begs the question of how much data are you expecting to be streamed in parallel to actual gameplay?

Even a modest GPU of around PS5 capability can decompress enough data to fill the entire VRAM of a standard 8GB GPU in less than 1 second. And you're never going to completely refresh you're VRAM like that mid gameplay. If I recall the Matrix awakens demo was streaming less then 150MB/s and I think

@HolySmoke presented some details for Rifts Apart here before which show even that has modest streaming requirements on average.

Of course there will be full scene changes which including load screens or animations (which includes ultra short loads animations like the rift transitions in Rifts Apart) where a relatively large amount of data will be loaded from disk in a very short period, but you aren't actually rendering much of anything on the GPU at those points and so the entire GPU resources can be dedicated to the decompression much in the same way that the hardware block on the PS5 is used.

For normal, much more modest streaming requirements, async compute is used targeting the spare compute resources on these GPU's as a GPU is very rarely 100% compute limited.