Up front I must admit I've hesitated to comment here because of the potential quagmire, but if there's any forum I trust to navigate that carefully I'll give here a shot. Also please note the usual disclaimer that I'm speaking primarily as a lifelong PC gamer but providing a bit of perspective from a few sides of the equation.

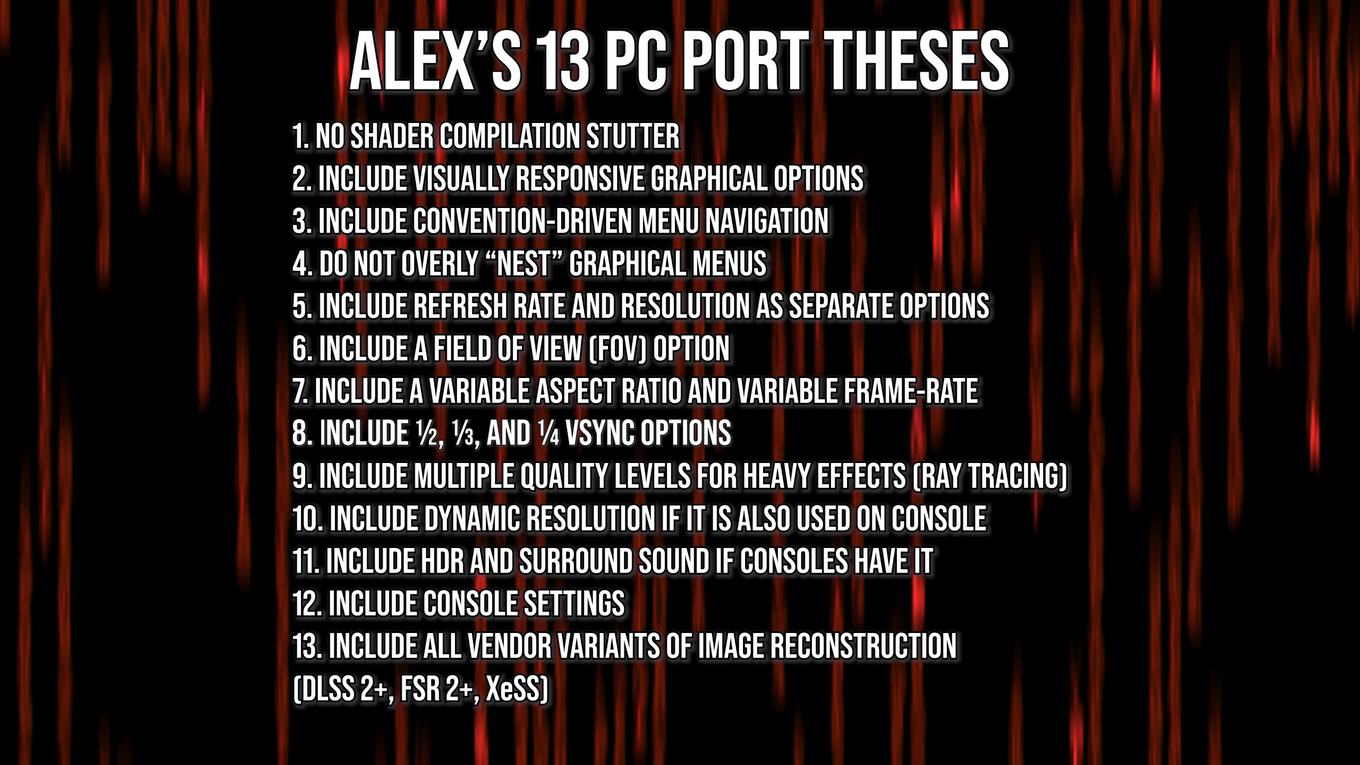

I won't touch most of these points as they are good, fairly obvious and uncontentious stuff. Different priorities for different folks (ex. some of these matter a lot if you are a tech reviewer but aren't exactly a big deal if you are going to set the settings once or twice then playing through the whole game) but for the most part when these don't happen it's for a lack of time/resources. In the odd case there's some design reason why certain options aren't provided or are limited (ex. FOV/aspect ratio in competitive games). Some of them can be deceptively complicated or far more tricky in some games than others.

There are a few I do want to comment slightly further on specifically though if I may.

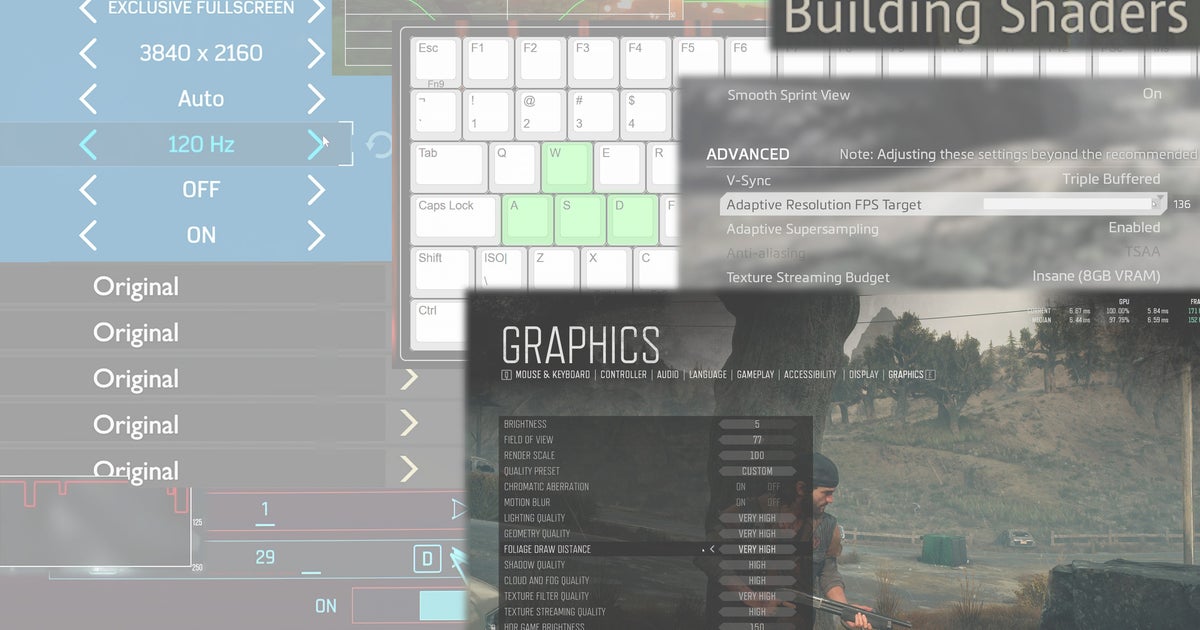

5) I'm gonna take a slightly contentious stance here, but one based on interactions with core Windows OS/WDDM stuff while I was at Intel. Games should not have "mode changes" at all. This is a job for the operating system, not a game. "Exclusive fullscreen" has been a lie since Windows 10 and built on a bunch of hackery that tends to produce finicky and unexpected behavior. "Display output" stuff belongs in the operating system and no user space application should be able to mess with it. If you want to change output resolution or display refresh rate when running a game, that should be a feature of the OS, not each and every game. That said, I would also argue that changing the actual display output should not really be necessary in modern games, and is mostly a legacy thing. Smart (or even dumb...) upscaling and variable refresh can handle all the same cases without requiring the monitor to do some heavy-weight mode switch or making anything incompatible with alt-tab, non-hacky overlays and so on.

12) I don't disagree with the ask and in some cases it makes sense, but I do want to say that's not universally true for two reasons. First, in some games the specific way that things are done just doesn't really apply in the same way on PCs. Decisions on the console settings aren't just in terms of things like tradeoffs for a given performance budget, but also things like "this path is cheaper/more expensive on console because of this detail that isn't possible on PC." Async compute is a common case where things can be scheduled pretty carefully to overlap specific passes on console, whereas that isn't really possible on PC. Raytracing, mesh shaders and other "newish" things also have different paths and quirks on the different platforms that can have a major impact on tradeoffs. Thus even in cases where it's possible to have "settings with similar output" on PC as console, it sometimes doesn't make sense. I know the argument here will be "sure, but more settings the better and we can just tweak it further ourselves!", but sometimes certain concepts or tradeoffs just don't apply in the same way on PC, and other ones don't apply on console. Thus I agree it's a nice thing where possible to have but I don't think we should ding games for not having some console setting *specifically*. We should evaluate the games based on how well they cover the scalability spectrum with the settings they do provide, as holding them to some arbitrary standard about "comparable console settings" starts to feel a bit more like we're primarily concerned about PC/console comparisons and fanboying than the experience on PC itself.

13) On one level, sure. If you're going to provide one it's great to provide all. Certainly there should be *a* modern AA/upsampling solution available on all GPUs, even future ones that don't yet exist. That said, the amount of resources the three companies throw at implementing these into games is vastly different as you might imagine, and some of them have been around longer than others, so it's not really surprising to see the results. Still good aspirational point of course given the constraints. THAT SAID, I have a related rant here so buckle up

These vendor specific libraries should be AT MOST considered to be a stop gap "solution" until standard, cross-vendor implementations are possible to whatever level specific games require. Don't be fooled by the IHVs here, this is absolutely no different than GameWorks, tessellation hackery, MSAA hackery and other vendor-specific lock-in in the past. These algorithms are not some "separate little post effect", they are key parts of the rendering engine with tendrils and constraints that heavily impact everything. It is neither sustainable nor desirable for large backend chunks of game renderers to delegate over to an IHV-specific graphics driver for reconstruction.

Obviously FSR2 gets a bit of a pass here because it is effectively example code, not driver magic. For that reason though, we shouldn't require game developers to implement "specifically FSR2". If they want to use that as a starting point where it makes sense, great. If they implement their own thing or use TSR or whatever, that's great too. Let's compare on quality and performance, not on buzzwords.

Whether we really ultimately need ML for this is - IMO - hugely up for debate. But even if you strongly believe that we do, then we need to get to a solution where you can implement something similar to DLSS/XeSS in standards-compliant ML APIs and it produces identical pixels across the different implementations. Everyone in the industry should be pushing the IHVs to do this and not just accepting their arguments about why they can't/don't want to. If they refuse to, we absolutely should refuse to use their closed-source implementations in the long run and developers should not be criticized for taking a hard line on this.

Since DF often makes good points about retro games and wanting to be able to preserve experiences into the future I will make one final point on that front: stuff like DLSS and XeSS is absolutely the sort of shit that will break and not work 10-20 years from now, potentially leaving us with games that are missing AA/upsampling entirely in the future. It's not reasonable or desirable for games to delegate such a core part of the renderer to external software.

Enough ranting, but had to get that out