I don't know of any point at which any of this was proprietary or closed source. Again I'm legitimately curious... where are you guys getting these ideas?Are features like Temporal Super Resolution (TSR) and Chaos physics considered "like the rest of the engine"? Could be other features but at one point I knew they were proprietary but may have been open sourced.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry Article Technical Discussion [2023]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

Oh you're right. Probably just an animation loop that fooled me.I think it's just the randomness of the movement. If you look to 22:00 the RR shot is moving while the other isn't.

There's nothing unreasonable about this. It's been made pretty clear that if you've got the hooks into to implement FSR2, there's genuinely no good reason to not also offer the clearly superior DLSS2. Given Nvidia's dominance in the market and the fact that they're a large AAA dev, it's genuinely quite unreasonable to NOT do so, in my opinion. If you actually care about your customers and aren't trying to follow your perceived guidelines from the hardware vendor that wrote you a big check, of course....In case you didn't get the memo the entire tech enthusiast community is showing a clear case of playing favouritism since most of them are willing witch hunt/bully developers into supporting a certain vendor's non-standard proprietary technology ...

This isn't some huge effort at all. I'm not generally a fan of gamer witchhunts, especially from the PC community, but I dont think they're wrong in this instance.

Not an unreasonable take, yea. It's just one of those things that's very hard to say without really having insider knowledge.Of course it doesn't. But poor GPU utilisation as indicated by abnormally low power draw does.

It may be the case that a game can be properly optimised for only one of the major IHV's at a time. And if a game chooses AMD to optimise to then that is absolutely fine. But let's not pretend this is a well optimised game on Nvidia GPU's when the power draw figures clearly show otherwise.

The power draw figures I've seen as standard in Starfield are not dissimilar to what I see in Ratchet & Clank when I'm VRAM limited and losing a good chunck of performance as a result. Obviously VRAM limitations aren't the issue with Starfield, but the GPU is underutilised in a similar way.

I honestly cant think of an example where there was any large outrage over a game running much better on Nvidia hardware. Seriously, I cant. Maybe my memory is simply failing me, that's entirely possible, but can you think of examples yourself?When NVIDIA equivalent GPUs run 30% better, yes, people do. Don't try to paint this as a double standard when there isn't one.

D

Deleted member 2197

Guest

It's good to know that features like TSR and Chaos Physics are open source. Some discussion on Unreal forums and a past comment you made lead me to believe certain aspects of UE5 were not true open source like Blender or Godot:I don't know of any point at which any of this was proprietary or closed source. Again I'm legitimately curious... where are you guys getting these ideas?

The reason Unreal Engine is not Open Source is because its source code is not available for anyone to use. It’s simply available as a reference for developers, and like Open Source, it allows the community to contribute code back to Unreal Engine. Even if you do use the UE source and make your own program out of it, Epic Games still has all the rights to it.

When this is the case, where you can find the source code for a program but can’t use it however you’d like, it’s called source available.

There is a huge misconception that if a program’s source code is available, that it is Open Source. This is not the case. As @LogierJan said, Open Source is a licensing model.

...

Plugins have nothing to do with the source code subject. They are a way to extend a program and add functionality into it that wasn’t there before. Most modern-day programs support this, including Photoshop, Unity, and Google Docs, even though none of those programs are Open Source or even source available. Unreal Engine supports this concept as well.

This is the promise Temporal Super Resoluton by AMD.

It's not, this is an Unreal algorithm/solution. AMD's thing is called "FidelityFX Super Resolution" (FSR) if I recall correctly.

https://forum.beyond3d.com/threads/...ability-2022-04-05.61740/page-35#post-2205905

You don’t recall the outrage of the so-called underground sea of tessellation in Crysis 2 that was apparently ordered by NVIDIA to gimp AMD GPUs? Because this example is even more infamous than Starfield.I honestly cant think of an example where there was any large outrage over a game running much better on Nvidia hardware. Seriously, I cant. Maybe my memory is simply failing me, that's entirely possible, but can you think of examples yourself?

I think from a perspective of just a PC gamer, this is true, somewhat - I really don’t know how many players are on pascal for instance. But from a developers viewpoint, the most modern feature set with the largest group is DX12 Ultimate, and if you don’t need the most modern functionality, DX12 is actually the largest group.Of course a developer has every right to ensure the playability of their game on old hardware, but they also can't escape the responsibility of carering to the majority of their target hardware. Doing one thing doesn't negate the other.

Nvidia moves so fast, it’s not a target that developers should aim for. I literally bought a 3070 and it’s whole obsolete if we go by some posters here. I don’t have frame Gen, and I’m very lucky to have RR. I have 8GB of memory my card is basically useless across the board.

If I had a 4090 I get the emotions a little more, because you pay a pretty penny for it, but a 3070 during the height of covid was not cheap either! And my card is obsolete: all it took was some fancy RT hardware, frame Gen, and ps5 games taking way above 8GB of vram.

Am I happy they UE5 and Starfield don’t require these things? Yes! It may perform like shit on a 4090 respectively to what you’re used to, but I can play this game at fairly decent performance with lowered settings.

That really frames the discussion because when I see people upset about the majority being catered to, I only see the 40XX cards being mentioned.

At least DLSS is coming. That would support down to 20XX

Can you think of an example that isn't from more than a decade ago? I know you'll accuse me of shifting the goalposts, but seriously, that was two generations of console hardware ago, and actually might still have some merit.You don’t recall the outrage of the so-called underground sea of tessellation in Crysis 2 that was apparently ordered by NVIDIA to gimp AMD GPUs? Because this example is even more infamous than Starfield.

When NVIDIA equivalent GPUs run 30% better, yes, people do. Don't try to paint this as a double standard when there isn't one.

I think people convince themselves that certain GPUs are 'equivalents' and then abstract away differences in the hardware and then get surprised when there's an outlier.

Ah, this is really just people splitting hairs over licensing terminology. You can call it "source available" if you want. Conversely though the classic "open source" GPL/LGPL licenses are restrictive in other directions, so they are quite different from stuff like Apache or BSD license. Obviously licenses matter for using the stuff, but in all of these cases the full source code is available. In Unreal's case there is of course a royalty that kicks in after (IIRC) $1 mil in revenue which is what the person here is noting vs. non-commercial engines like Godot.It's good to know that features like TSR and Chaos Physics are open source. Some discussion on Unreal forums and a past comment you made lead me to believe certain aspects of UE5 were not true open source like Blender or Godot:

In terms of trying to keep secrets or something, I'll just quote from the page I linked earlier https://www.unrealengine.com/en-US/ue-on-github:

Q: "Can I study and learn from Unreal Engine code, and then utilize that knowledge in writing my own game or competing engine?"

A: "Yes, as long as you don't copy any of the code. Code is copyrighted, but knowledge is free!"

Last edited:

How often does it happen that AMD or NVIDIA outperform their counterparts by over 30% in non-RT workloads? You won't hear much complaints other than "It favors AMD or NVIDIA" when the difference is within 10-15, or even sometimes 20%. Recent examples being AC Odyssey or COD Warzone where AMD GPUs perform far better than NVIDIA ones. The 7900 XTX beats the 4080 by a whopping 38% in Starfield according to HU and this came after the controversy that AMD is/was supposedly blocking DLSS implementation from the game. The outrage is very easy to understand when put into context. There's no double-standard at play.Can you think of an example that isn't from more than a decade ago? I know you'll accuse me of shifting the goalposts, but seriously, that was two generations of console hardware ago, and actually might still have some merit.

Nobody "convinces" themselves of anything. We look at the available data and 95% of the time, a 7900 XTX will perform within 10% of a 4080. This goes for multiple GPUs and has been a thing for a long time, 3080/6800 XT, 3090/6900 XT, 670/7950, R9 290X/780 Ti, etc. That the 7900 XTX crushes the 4080 by almost 38% in a game where DLSS is conspicuously missing will raise eyebrows.I think people convince themselves that certain GPUs are 'equivalents' and then abstract away differences in the hardware and then get surprised when there's an outlier.

Nobody "convinces" themselves of anything. We look at the available data and 95% of the time, a 7900 XTX will perform within 10% of a 4080. This goes for multiple GPUs and has been a thing for a long time, 3080/6800 XT, 3090/6900 XT, 670/7950, R9 290X/780 Ti, etc. That the 7900 XTX crushes the 4080 by almost 38% in a game where DLSS is conspicuously missing will raise eyebrows.

Yup. You've abstracted away differences in the hardware and expect similar results from somewhat disparate shader workloads.

Expect is not the word I would use. Everyone will rightfully wonder where the disparity stems from. So far, no one seems to really know.Yup. You've abstracted away differences in the hardware and expect similar results from somewhat disparate shader workloads.

Flappy Pannus

Veteran

I honestly cant think of an example where there was any large outrage over a game running much better on Nvidia hardware. Seriously, I cant. Maybe my memory is simply failing me, that's entirely possible, but can you think of examples yourself?

It stands to reason that the vendor with the significantly higher marketshare is going to have the larger userbase, their ire is going to be louder when they notice this disparity just due to the numbers - but also combine that with one of the biggest PC game launches in years, it's naturally going to get more attention. The FSR exclusive stuff was also likely occurring well before Starfield, it's just that it was such a large release it finally got a spotlight shone on it (not saying the performance discrepancy is due to anything contractual by AMD though, I think it's just more of a game heavily optimized to the Xbox/RDNA architecture, which is fine if so).

That being said, is there an example of a game running 30% better on Nvidia compared to the equivalent Radeon when we're not talking about RT? Honest question, I don't know.

Last edited:

@trinibwoy where do you think Jakub said that the higher ups were not excited about path tracing?

Jakub commented that he presented the first iteration of path tracing to some directors at the company and the response was "no way" due to the poor rendering of character faces.

30:10 in the video.

Jakub commented that he presented the first iteration of path tracing to some directors at the company and the response was "no way" due to the poor rendering of character faces.

30:10 in the video.

That is not what he said. He's talking about presenting the addition of ray reconstruction (2nd iteration) to some directors and how much better the faces look. Their, "No way!" response is basically a "I can't believe it" response.

That is not what he said. He's talking about presenting the addition of ray reconstruction (2nd iteration) to some directors and how much better the faces look. Their, "No way!" response is basically a "I can't believe it" response.

Lol that definitely didn't come across as a positive "No Way". I read it completely differently as the reaction to the "first implementation" of path tracing.

For quite a long time, nearly any game on UE4.It stands to reason that the vendor with the significantly higher marketshare is going to have the larger userbase, their ire is going to be louder when they notice this disparity just due to the numbers - but also combine that with one of the biggest PC game launches in years, it's naturally going to get more attention. The FSR exclusive stuff was also likely occurring well before Starfield, it's just that it was such a large release it finally got a spotlight shone on it (not saying the performance discrepancy is due to anything contractual by AMD though, I think it's just more of a game heavily optimized to the Xbox/RDNA architecture, which is fine if so).

That being said, is there an example of a game running 30% better on Nvidia compared to the equivalent Radeon when we're not talking about RT? Honest question, I don't know.

Was the disparity ever that large in UE4 titles?For quite a long time, nearly any game on UE4.

Flappy Pannus

Veteran

For quite a long time, nearly any game on UE4.

I get you're not big on specifics, but try it sometime.

Yup. You've abstracted away differences in the hardware and expect similar results from somewhat disparate shader workloads.

It's a question of what is a reasonable expectation. I think it's certainly possible (perhaps even desirable) across different GPU's with different architectures, for games to have varying performance across GPU's in the same class, obviously if they were identical then you would flip a coin to decide your next purchase. It's the degree of this difference which is the outlier now though, with the rendering load being equal. Being an 'outlier' doesn't imply fraudulent/artificial mind you, at least not in my eyes - there could very well be some significant architectural advantages of RDNA that just haven't been utilized that well due to market conditions without AMD's assistance, sure.

However, it is also perfectly reasonable for gamers to see this particular level of performance discrepancy, after years of comparable data points between these GPU families, and wonder 'uh...wtf?!'. If we had more examples of this kind of chasm, then sure - you could say "Eh, different architectures, deal with it duh" - but we just don't. If so, please point me towards those games?

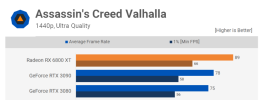

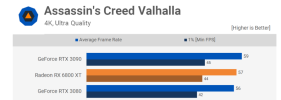

For example, when it was released, AMD had a clear edge in AC: Valhalla. I routinely saw people wonder what was up that title on Nvidia GPU's, and some Nvidia fanboys complained when it was included in benchmarks as it would 'unfairly' skew results to Radeon (which was silly, it was the latest release in a huge franchise). Eventually, driver updates from Nvidia negated much of that earlier lead. This 'large' AMD advantage at launch though? Well:

The 6800XT had a 15% advantage at 1440p over the 3080, and was all but identical at 4k. That was what defined an "AMD optimized" title in years past. 30-40%? This is decidedly new territory in rasterized titles, it's understandable for people to see that and be surprised.

Last edited:

- Status

- Not open for further replies.

Similar threads

- Locked

- Replies

- 3K

- Views

- 337K

- Replies

- 855

- Views

- 75K

- Replies

- 65

- Views

- 10K

- Replies

- 3K

- Views

- 420K