You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

arandomguy

Veteran

Would AMD really use FP32 for the compute shader? I thought they talked about FP16...

It's just guesswork on my part based on HUB's data.

The 6800 XT I think has something like 35%? more FP16 throughput than the RTX 3080 on paper, but I don't know off hand what real tests of the two compare in that respect.

At least with my rough estimation, as I haven't gone through the data finely and human pattern recognition of course can be pretty faulty, the most commonality to me does seem to be FP32 relative to everything else. Just to me more precise don't mean just total FP32 throughput, but how much FP32 is available to the GPU relative to where it performs for gaming.

Also the other issue is this could just be correlation and something else on the GPUs that is scaling with FP32 resources is accounting for the difference. As well as the limited sample size we have here.

arandomguy

Veteran

Would AMD really use FP32 for the compute shader? I thought they talked about FP16...

Just a follow-up, so I decided to parse some numbers. More ideally I would have liked to have actual overhead numbers (as I used in an earlier post), but making do with perf gain instead. Performance gains numbers is how much more avg FSR 2.0 quality has over native. FP32 numbers pulled from TPU's database, FP16 ratio also pulled from TPU's database. I used "vs" because the cards being compare were the closest to the same performance tier for this game based on the numbers used and they had the same settings.

RX 570 vs. 1650 Super at 1440p medium -

RX 570 - 10% perf gain. 1650 Super - 6%.

RX 570 - 5.1 tflops, 1:1 FP16. GTX 1650 Super - 4.4 tflops, 2:1 FP16.

The 1650 Super however has a faster baseline and therefore less total frame time per frame to work with for speedups.

Vega 64 vs 1070ti at 1440p ultra -

Vega 64 - 25%, 1070ti - 20%

Vega 64 - 12.7 tflops, 2:1 FP16. GTX 1070.ti - 8.2 tflops, 1:16 FP16.

Both had essentially the same perf, so frame time per frame would be very close.

5700 XT vs. RTX 2060 at 1440p ultra, except RTX 2060 used low textures

5700 XT - 21%, RTX 2060 - 18%

5700 XT - 9.75 tflops, 2:1 FP16. RTX 2060 - 6.5 tflops, 1:16 FP16.

6800 XT vs. RTX 3080 at 4K Ultra

6800 XT - 28%, RTX 3080 - 38%

6800 XT - 20.7 tflops, 2:1 FP16. RTX 3080 - 29.8 tflops, 1:1 FP16.

6800 XT was actually faster at native but ended up slower with FSR 2.0 scaling regardless of quality.

I'm going to throw this possibility out there as well. I'd wonder if AMD were to anticipate that performance comparisons against DLSS would likely been done on Ampere that they might have put some priority in optimizing for Ampere?

As another aside especially with XeSS also coming up and some speculation on how it's going to approach things, I wonder if we need to maybe test scaling image quality to see if it's final result consistent across different architectures and/or GPUs.

Last edited:

If I had a 2080 I would love to double check that.Unfortunately it seems like there is only 1 GPU that was tested which used 4K and 1440p resolutions which was the RTX 2080. So this is the only sample in that in which we can compare the scaling overhead against native.

RTX 2080 avg Frame times in milliseconds -

1440p Native - 13 (77 fps)

FSR 2.0 Quality 4k- 18.5

DLSS Quality4k - 16.7

This means the avg frame time cost for FSR 2.0 is 5.5ms, DLSS is 3.7ms.

RTX 2080 1% Frame times in milliseconds -

1440p Native - 18.2 (55 fps)

FSR 2.0 Quality 4k- 23.3

DLSS Quality 4k - 21.7

This means the 1% frame time cost for FSR 2.0 is 5.1ms, DLSS is 3.5ms.

So at least for the RTX 2080 in this test case the overhead for FSR 2.0 Quality 4K is about 50% higher than DLSS Quality 4K, which means DLSS is 50% faster or FSR 2.0 is 33% slower depending on perspective.

However at least my rough gauge of the overall data gives me the impression that FSR 2.0 might be most dependent on the available amount of FP32 resources relative to everything else. This would mean that Turing and by extension the RTX 2080 might be "weaker" (well we don't really have a baseline point) with respect to FSR 2.0 performance. This would however also does mean the interesting irony that FSR 2.0 performs better on Ampere than RDNA2, which at least the data for the 6800 XT and RTX 3080 in this test would corroborate.

After my vid is done Here, I am not sure why I am measuring FSR 2.0 usually being more expensive on AMD than NV, but is being measured. But one cannot forget it was not completely consistent. 580 using my measurement executed FSR faster than the 1060 at 1080, apparently.

Edit: If i have time (which I do not), I would love to profile the cost of FSR 2.0 more and more accurately. Even using native res internal is not the best as a base, as you are missing out on the UI costs And anything else that changes when output res is higher (mips, geometry LODs, etc). And even just toggling FSR 2.0 is completely opaque as to what it is doing. It could also be changing the costs of other things as well and not just adding in FSR 2.0 reconstruction.

Last edited:

arandomguy

Veteran

It's difficult to guess what the bottleneck is because scaling between GPUs is all over the place. Pascal processes the FSR portion of the frame faster than than GCN despite the FP32 and FP16 deficit.

What's giving you that impression?

Vega 64 for instance has more perf gain with FSR2 than the 1070ti. The 1070ti's relative perf against the Vega 64 increases as as resolution is lowered. We don't have a 1706x960 test, but at 1080p Vega 65 fps, 1070 ti 68 fps. Whereas at 1440p FSR2 Quality Vega 64 60 fps, 1070ti 59 fps. This at least to me suggests the Vega 64 is spending less time on FSR2 on avg.

Would AMD really use FP32 for the compute shader? I thought they talked about FP16...

Last edited:

arandomguy

Veteran

I parsed TPU's numbers with their RTX 3060 (https://www.techpowerup.com/review/amd-fidelity-fx-fsr-20/2.html) using the first 2 comparison FPS numbers -

RTX 3060 frame times -

1440p - 14.1ms

DLSS Q 4k - 18.2ms

FSR2 Q 4k - 19.2ms

FSR1 Q 4k - 16.7ms

DLSS Q 4k - 4.1ms. FSR2 Q 4k - 5.1ms. FSR1 Q 4k - 2.6ms.

Are you seeing it being more expensive on AMD vs. NV in general or is there specific architecture to architecture variances? At least based on my very early conjecture while Ampere seems faster than RDNA2, is that extending to all architectures? At least in the HUB test it doesn't seem like Turing is faster than RDNA1, nor is Pascal faster than GCN.

I agree the overhead time isn't strictly just FSR 2.0 (or DLSS) processing itself as not everything else is identical. I guess maybe the better term would be functional overhead or functional cost in terms of what this would be referring to. If hypothetically one upscaler does need higher inputs of certain factors that another doesn't that would still from a functional stand point mean it does have a higher cost. The interesting follow-up on this line though would be to know what maybe the other differences are between the upscaling methods aside from the actual scaling process itself.

The other advantage of having that data is it better isolates against the issue of low vs high fps if we're assuming the upscalers have a relatively fixed cost. As if it's true than gains for scalers are higher at lower fps but that also shrinks the difference in performance differences between the scalers. While the reverse would be true at a higher fps.

RTX 3060 frame times -

1440p - 14.1ms

DLSS Q 4k - 18.2ms

FSR2 Q 4k - 19.2ms

FSR1 Q 4k - 16.7ms

DLSS Q 4k - 4.1ms. FSR2 Q 4k - 5.1ms. FSR1 Q 4k - 2.6ms.

If I had a 2080 I would love to double check that.

After my vid is done Here, I am not sure why I am measuring FSR 2.0 usually being more expensive on AMD than NV, but is being measured. But one cannot forget it was not completely consistent. 580 using my measurement executed FSR faster than the 1060 at 1080, apparently.

Edit: If i have time (which I do not), I would love to profile the cost of FSR 2.0 more and more accurately. Even using native res internal is not the best as a base, as you are missing out on the UI costs And anything else that changes when output res is higher (mips, geometry LODs, etc). And even just toggling FSR 2.0 is completely opaque as to what it is doing. It could also be changing the costs of other things as well and not just adding in FSR 2.0 reconstruction.

Are you seeing it being more expensive on AMD vs. NV in general or is there specific architecture to architecture variances? At least based on my very early conjecture while Ampere seems faster than RDNA2, is that extending to all architectures? At least in the HUB test it doesn't seem like Turing is faster than RDNA1, nor is Pascal faster than GCN.

I agree the overhead time isn't strictly just FSR 2.0 (or DLSS) processing itself as not everything else is identical. I guess maybe the better term would be functional overhead or functional cost in terms of what this would be referring to. If hypothetically one upscaler does need higher inputs of certain factors that another doesn't that would still from a functional stand point mean it does have a higher cost. The interesting follow-up on this line though would be to know what maybe the other differences are between the upscaling methods aside from the actual scaling process itself.

The other advantage of having that data is it better isolates against the issue of low vs high fps if we're assuming the upscalers have a relatively fixed cost. As if it's true than gains for scalers are higher at lower fps but that also shrinks the difference in performance differences between the scalers. While the reverse would be true at a higher fps.

These are wrong numbers since 1440p resolution scene here is very likely CPU bound, you need a GPU utilization counter to make sure scene is not CPU bound, which is available on the next page - https://www.techpowerup.com/review/amd-fidelity-fx-fsr-20/3.htmlI parsed TPU's numbers with their RTX 3060 (https://www.techpowerup.com/review/amd-fidelity-fx-fsr-20/2.html) using the first 2 comparison FPS numbers -

GPU utilization here is 97% in 1440p, which indicates GPU bound scene.

DLSS Quality on 3080 takes 1.01 ms for 4K reconstruction vs No AA 1440p

FSR 2.0 Quality on 3080 takes 1.66 ms for for 4K reconstruction vs No AA 1440p

You can search for DLSS integration guide where execution times are highlighted for different RTX models, the calculations above align with DLSS execution time numbers in this guide.

Alex tested a 1060 vs a 580. Despite the 580 having almost 50% more FP32 compute and 800% more FP16 compute a 1060 runs the algorithm faster.What's giving you that impression?

Vega 64 for instance has more perf gain with FSR2 than the 1070ti. The 1070ti's relative perf against the Vega 64 increases as as resolution is lowered. We don't have a 1706x960 test, but at 1080p Vega 65 fps, 1070 ti 68 fps. Whereas at 1440p FSR2 Quality Vega 64 60 fps, 1070ti 59 fps. This at least to me suggests the Vega 64 is spending less time on FSR2 on avg.

Turn down texture quality and see how it does. I am also curious how DLSS performs versus FSR 2.0 with a power limited GPU such as a laptop 2060, maybe the tensor cores are able to make a bigger difference in performance there.On my 3090 with a 225W instead of 375W power limit DLSS performs much better:

Quality: +16%

Performance: +20%

Tried it on my 80W RTX2060 in the Notebook but it looks like 6GB VRAM is not enough in this game...

DegustatoR

Legend

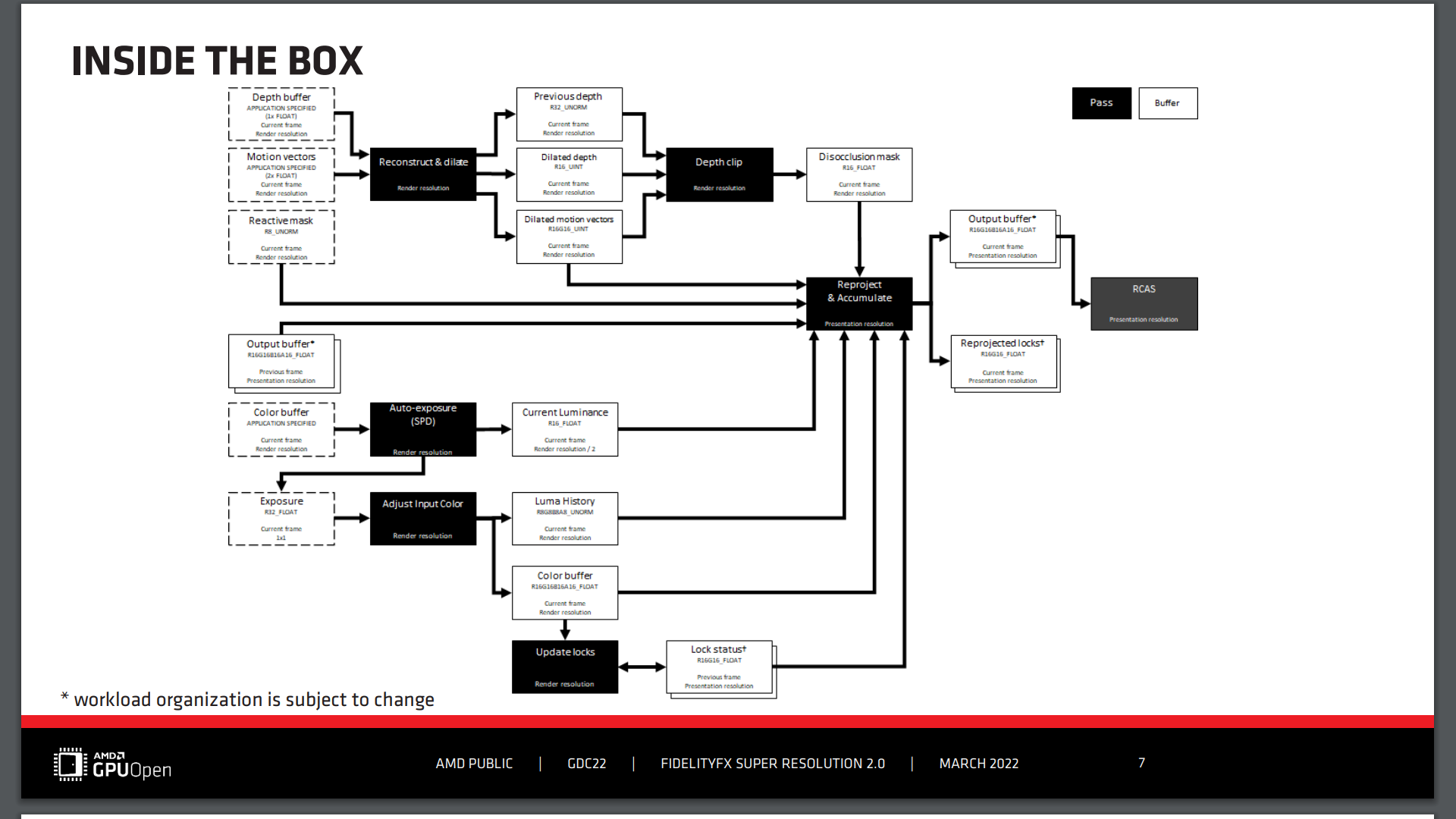

That's data formats but there are no indication what math they are using to process all these buffers? Unless I'm not seeing it.

D

Deleted member 2197

Guest

Agreed, and will be welcome to see how FSR 2.0 scales outside the Deathloop universe.More importantly i'd wish to see more then just one game which was truly optimized for the first show to the public. How does it perform in Horizon, CP2077, god of war....

AMD’s FSR 2.0 debut, while limited, has upscaled our GPU hopes | Ars TechnicaAt this point, the real question is exactly how well FSR will scale outside of Deathloop's specific traits: its first-person perspective; its small, tight cities; and its high-contrast, saturated-color art style. Will FSR 2.0's rendering wizardry look as good while driving a virtual car or when seen in third-person perspective, across a foliage-lined landscape, or pumped full of more organic colors and constructions? Or is this where the FSR 2.0 model will begin coughing and stuttering as it tries to intelligently process wooden bridges, prairies full of swaying grass, or level-of-detail pop-in of elements on the horizon?

On my 3090 with a 225W instead of 375W power limit DLSS performs much better:

Quality: +16%

Performance: +20%

Tried it on my 80W RTX2060 in the Notebook but it looks like 6GB VRAM is not enough in this game...

Did the opposite test - how less power DLSS needs to archive the same performance:

350W - FSR Performance in 4K: 100FPS

280W - DLSS Performance in 4K: 100FPS

Shame that i have not a better notebook. Would be really interessting to see how both upscaling methods behave in a tight power budget scenario.

Last edited:

Just turn down texture quality or disable Raytracing. There are plenty of ways to test that.Shame that i have not a better notebook. Would be really interessting to see how both upscaling methods behave in a tight power budget scenario.

Smart testDid the opposite test - how less power DLSS needs to archive the same performance:

350W - FSR Performance in 4K: 100FPS

280W - DLSS Performance in 4K: 100FPS

Shame that i have not a better notebook. Would be really interessting to see how both upscaling methods behave in a tight power budget scenario.

Did a few comparisions between DLSS and FSR 2.0 with overlapping geometry:

https://imgsli.com/MTA3OTc5/0/1

https://imgsli.com/MTA3OTY1

FSR 2.0 performance is breaking down in such scenarios...

https://imgsli.com/MTA3OTc5/0/1

https://imgsli.com/MTA3OTY1

FSR 2.0 performance is breaking down in such scenarios...

This one lacks description. Which one is FSR / DLSS? Thanks.

Similar threads

- Replies

- 7

- Views

- 2K

- Replies

- 41

- Views

- 4K

- Replies

- 1

- Views

- 1K

- Replies

- 200

- Views

- 36K