GDeflate in DirectStorage could be one of that.

That’s a good example.

Now that opens the door for MS to ship an ML based upscaler one day.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

GDeflate in DirectStorage could be one of that.

Haha so it's just FSR2 built into DX which is essentially useless.Wow I am really surprised DirectSR is coming with an included implementation. Is there any other feature of DirectX that includes a GPU implementation in the runtime? It will be even easier for developers to include upscaling in their games now.

Haha so it's just FSR2 built into DX which is essentially useless.

But the biggest question of how a game would "enumerate" other options remains unanswered.

Will these options be a part of the driver now in which case AMD users won't be able to use XeSS?

Newer versions of DLSS don't necessarily improve upscaling quality in older games, and they also provide several options for a game to choose from. XeSS is similar I guess. All of this would lost with driver side implementation?It also opens the door for IHVs to improve upscaling quality of older games that are no longer being updated by the developer.

Newer versions of DLSS don't necessarily improve upscaling quality in older games, and they also provide several options for a game to choose from. XeSS is similar I guess. All of this would lost with driver side implementation?

The way it is done now is IMO more convenient for the user than shipping just one version for all games with the driver.

And I honestly don't care about developers who can't manage to add an SDK of 5 DLLs to their projects.

But if that would be the case then the idea of the driver providing improvements with updates goes out the window.It doesn’t have to be just one version in the driver. Drivers already ship tons of custom application profiles.

I don't see any "pros" still.We’ve covered all of this before. The pros of a common SDK + driver side implementations far outweigh the cons.

No, it just means that the user side of this is way more important to me than the developers who can't find a week of time to integrate these SDKs.Well that just means you don’t have a full appreciation of what it takes to develop and ship software. /shrug

so if I understand that correctly, you can decouple FSR 3 Frame Generation from FSR and use DLSS + FG on RTX 2000 and RTX 3000 cards and XeSS + FG on Intel and whatever GPUs? That's excellent news if trueAlso of note in this discussion:

AMD marks the ability to integrate FSR 3.1 via an API which "unlocks" "upgradability" via DLLs as a benefit of the FSR 3.1.

If DirectSR will prevent this from being possible then it goes against this slide as well.

Yep. Tying FSR FG to FSR SR was one of the weird things of FSR3.so if I understand that correctly, you can decouple FSR 3 Frame Generation from FSR and use DLSS + FG on RTX 2000 and RTX 3000 cards and XeSS + FG on Intel and whatever GPUs? That's excellent news if true

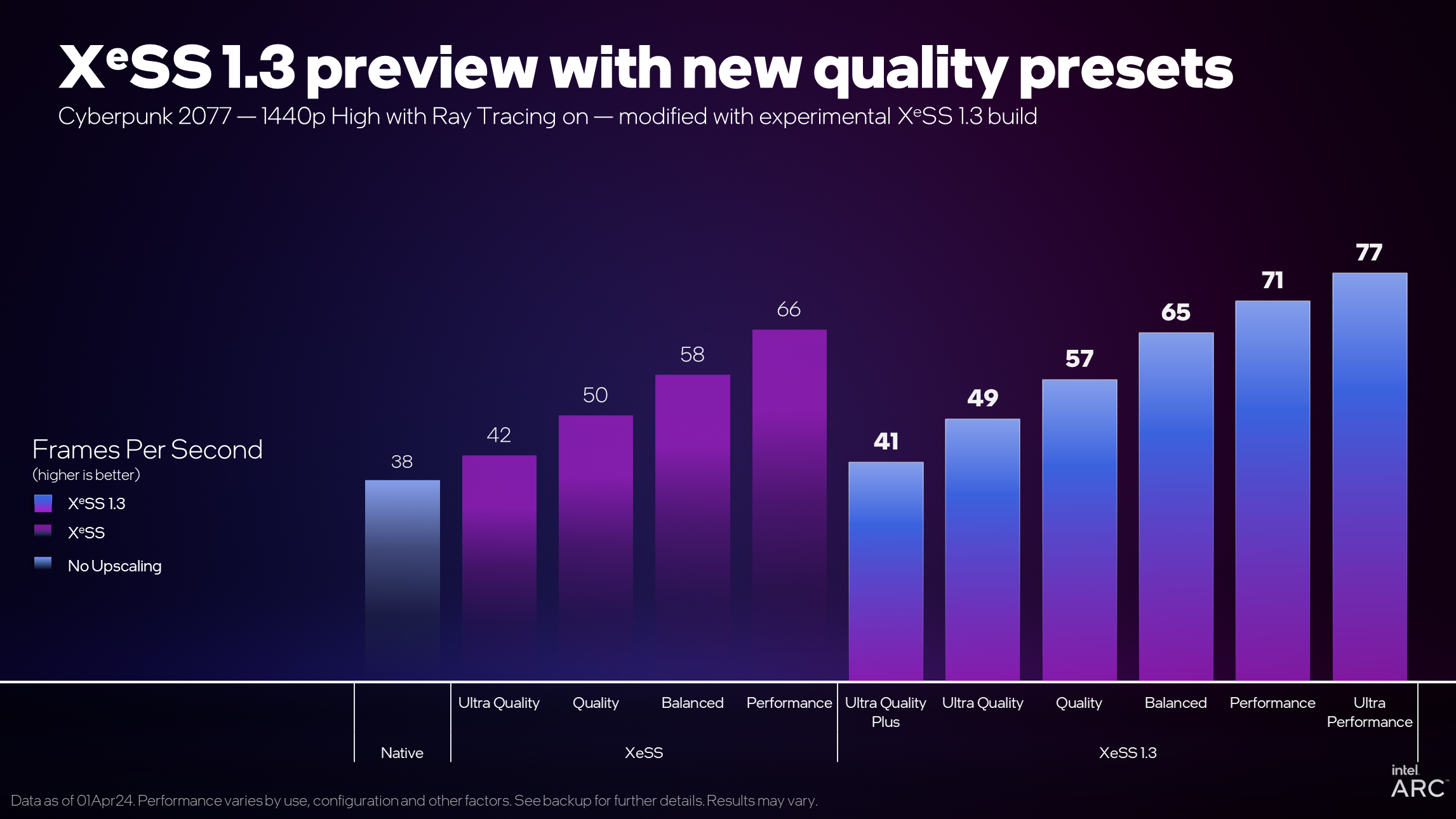

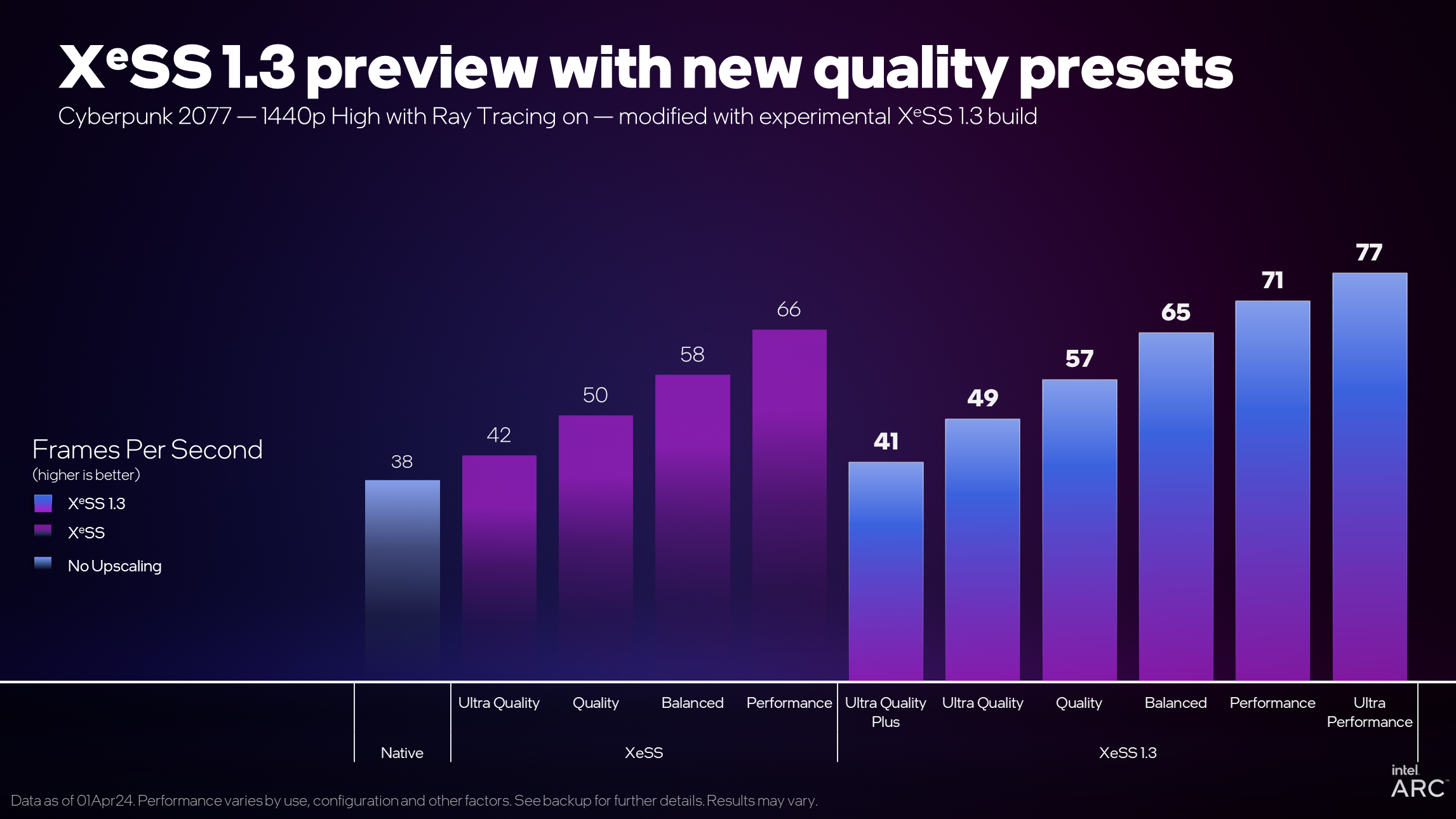

| Preset | Resolution scaling in previous XeSS versions | Resolution scaling in XeSS 1.3 |

|---|---|---|

| Native Anti-Aliasing | N/A | 1.0x (Native resolution) |

| Ultra Quality Plus | N/A | 1.3x |

| Ultra Quality | 1.3x | 1.5x |

| Quality | 1.5x | 1.7x |

| Balanced | 1.7x | 2.0x |

| Performance | 2.0x | 2.3x |

| Ultra Performance | N/A | 3.0x |

It just means they rearranged their scaling factors.new scaling factors for old ones too, apparently with the idea that new and old offer similar quality with new performing better

Yes, but with the improved quality of 1.3 at least I got the impression old and new preset x offer similar quality with new one being faster due lower rendering resolution.It just means they rearranged their scaling factors.

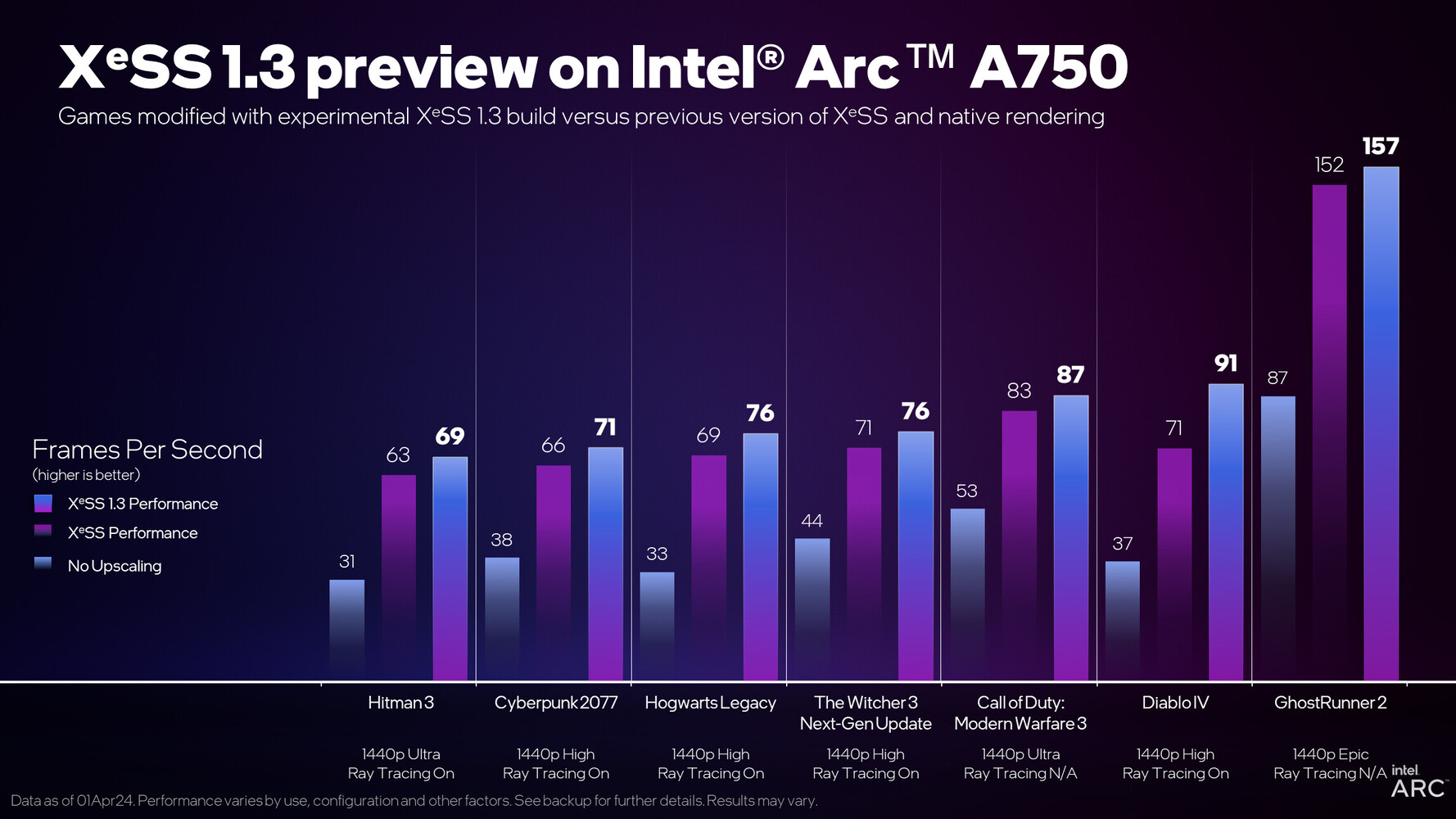

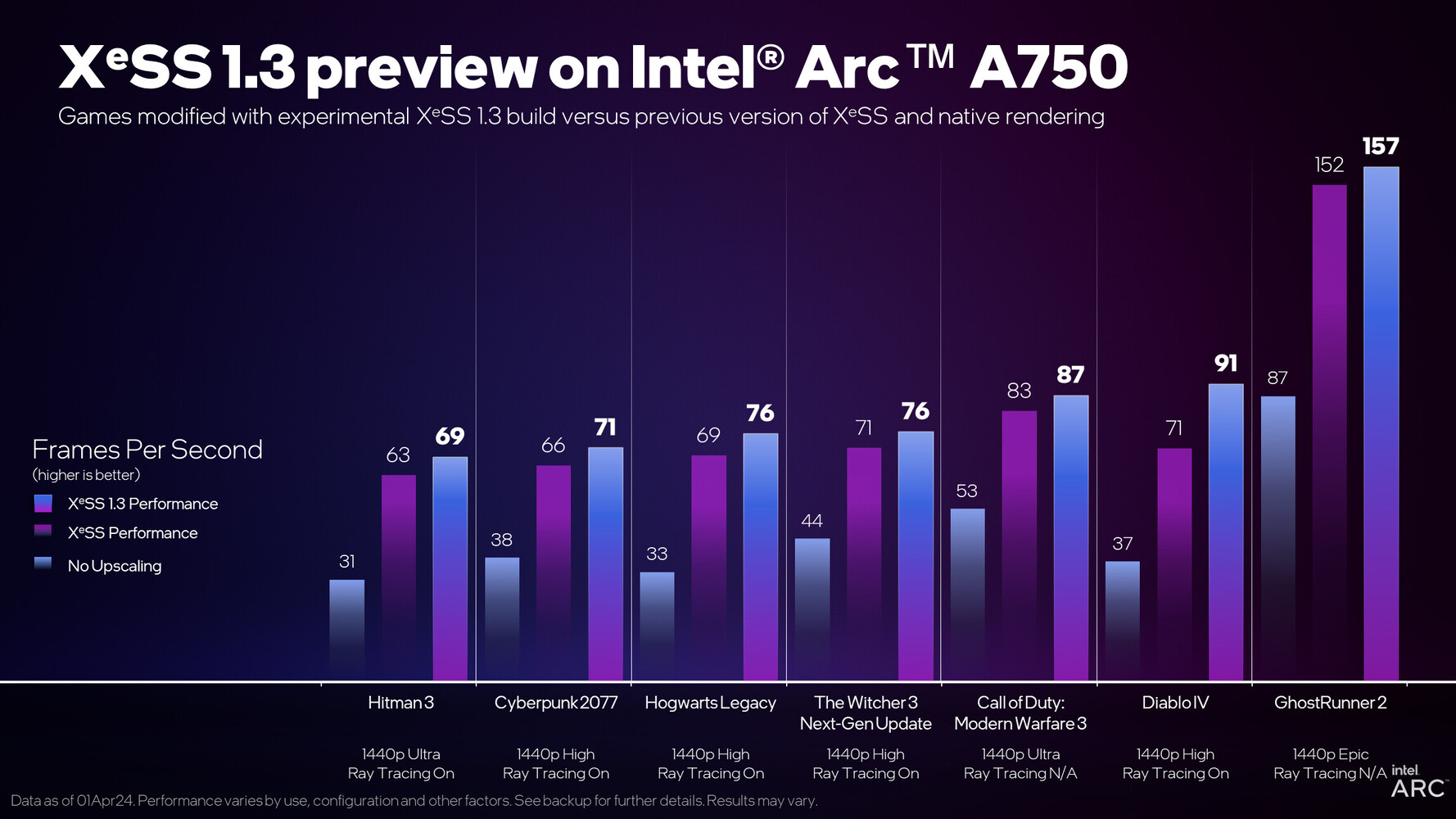

Old XeSS 1.2 Performance had scaling of 2x, meaning 1080p to 2160p, but the new XeSS 1.3 Performance goes to 2.3x, meaning ~900p to 2160p, which will deliver slightly faster performance as it renders lower resolution, which they show in their marketing slide.

The old Quality preset is 1.5x scaling, meaning 1440p to 2160p, the equivalent to that in the new preset is Ultra Quality, both deliver similar performance in Intel's testing (~50fps).

I’ve worked on many FSR (2+3) integrations at this point, and properly integrating it (or XeSS or DLSS for that matter) takes a bit longer than that. Initial integration to get up and running can be quick, depending on engine architecture, but the polish to get to shipping quality can be time consuming.No, it just means that the user side of this is way more important to me than the developers who can't find a week of time to integrate these SDKs.

On the other hand it even further solidifies interpolation, which is a dead end for VR.DirectSR isn’t a panacea, but it can be a time and complexity and maintenance saving for developers that have other priorities. That’s who it’s aimed at primarily, and widening upscaling tech adoption in general is a good idea.

Elucidate on this further, I'm not sure if I follow but I'm curious...On the other hand it even further solidifies interpolation, which is a dead end for VR.