You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Scaling is all over the place.

They're testing older gpu's with it

davis.anthony

Veteran

I think the question for me is FSR2.0 the best we're going to get from solutions that don't use ML?

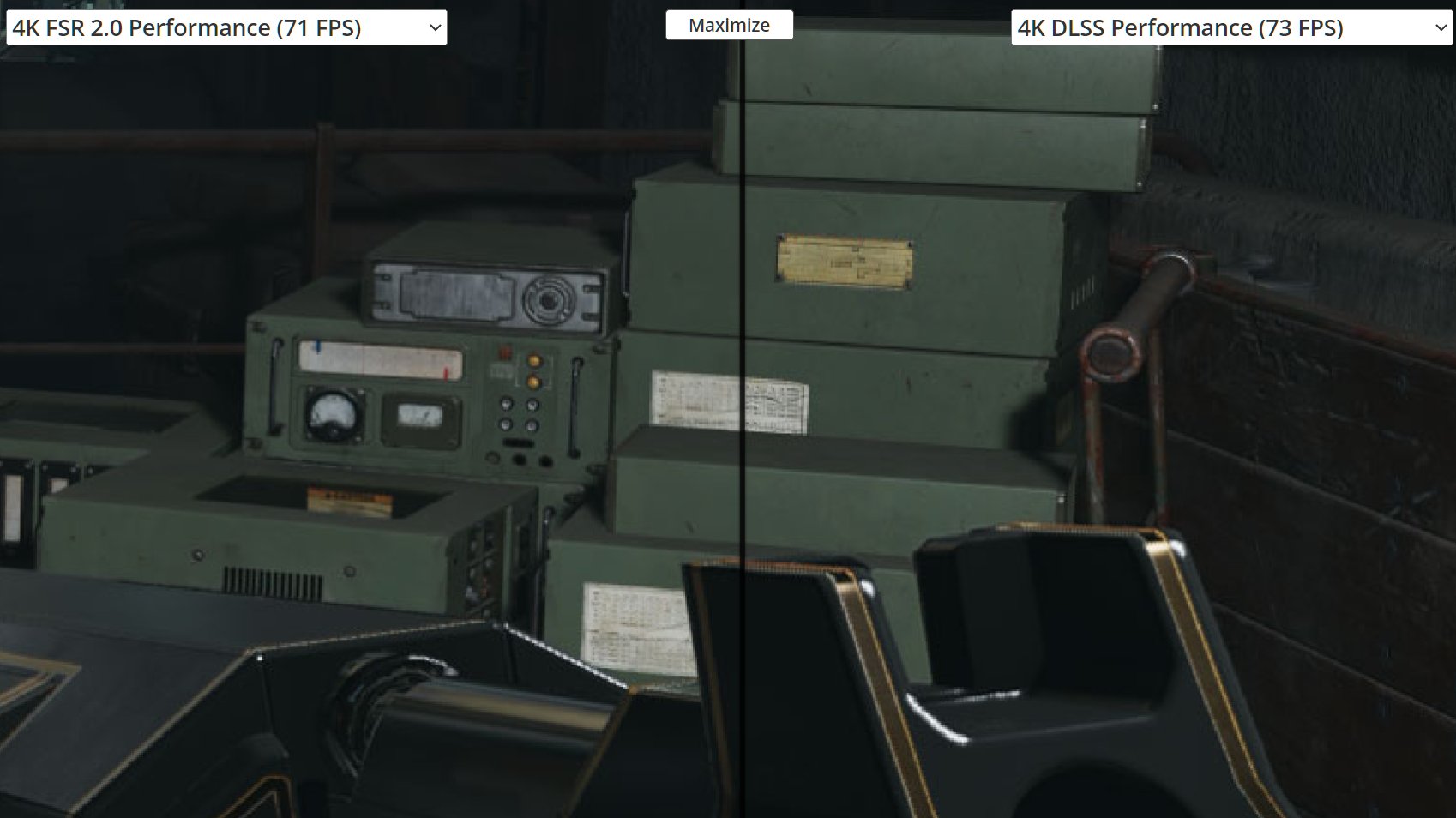

DLSS still has a noticeable advantage in details like hair and transparent textures like chain-link fences.

DLSS still has a noticeable advantage in details like hair and transparent textures like chain-link fences.

I think the question for me is FSR2.0 the best we're going to get from solutions that don't use ML?

Probably. It will be close to TSR, TAAU and other custom solutions, but available to use freely by developers who want to (or dont want to use their own).

DavidGraham

Veteran

They're testing older gpu's with it

On the RTX 2080, DLSS Quality still gives 10% more fps compared to FSR 2 Quality, and with higher quality of upscaling. DLSS Performance gives you 15% more fps compared to FSR 2 Performance at a much much higher upscaling quality.Scaling is all over the place.

For those still in doubt, this is the difference that ML makes compared to hand crafted algorithms.

arandomguy

Regular

I wish they had performance numbers at the native scaling resolution for comparison.

For example having 1440p Native to compare against 4k Quality. Or mainly 720p for the 1440p only GPUs.

I'll see if I have time later to manually parse their numbers and try to assemble a chart with comparisons.

For example having 1440p Native to compare against 4k Quality. Or mainly 720p for the 1440p only GPUs.

I'll see if I have time later to manually parse their numbers and try to assemble a chart with comparisons.

Last edited:

I wish they had performance numbers at the native scaling resolution for comparison.

For example having 1440p Native to compare against 4k Quality.

I'll see if I have time later to manually parse their numbers and try to assemble a chart with comparisons.

More importantly i'd wish to see more then just one game which was truly optimized for the first show to the public. How does it perform in Horizon, CP2077, god of war....

For image quality comparisons with these solutions I'd prefer to see them compared to a more "reference" image than "native." I'd like to see 1440p FSR 2.0 Quality, 1440p DLSS Quality compared to 1440p TAA super-sampled. Not sure how many games that support FSR 2.0, DLSS have an option to increase render-scale to 200%. Not sure the easiest way to get super-sampled output other than that. DSR on Nvidia seems kind of inconsistent. Super-sampled comparisons would be the best way to check things like colour accuracy.

davis.anthony

Veteran

For image quality comparisons with these solutions I'd prefer to see them compared to a more "reference" image than "native." I'd like to see 1440p FSR 2.0 Quality, 1440p DLSS Quality compared to 1440p TAA super-sampled. Not sure how many games that support FSR 2.0, DLSS have an option to increase render-scale to 200%. Not sure the easiest way to get super-sampled output other than that. DSR on Nvidia seems kind of inconsistent. Super-sampled comparisons would be the best way to check things like colour accuracy.

You can use NvInspector to force SSAA in games.

On the RTX 2080, DLSS Quality still gives 10% more fps compared to FSR 2 Quality, and with higher quality of upscaling. DLSS Performance gives you 15% more fps compared to FSR 2 Performance at a much much higher upscaling quality.

For those still in doubt, this is the difference that ML makes compared to hand crafted algorithms.

Or because dlss use dedicated cores while FSR 2 is stealing processing power from the main gpu cores? I'm probably wrong tho as I basically understand nothing about this

Edit: btw what if Nvidia modifies FSR 2 to run on tensor cores? Is that even feasible? As how specialized tensor cores are

You can use NvInspector to force SSAA in games.

Cool. I think FSR 2.0 vs DLSS vs native vs super-sampled is probably the best. Super-sampled will show you what your target is. Even if SSAA is running at 5 fps, just getting some frames to compare would be the "ground truth" or reference image for quality.

On the RTX 2080, DLSS Quality still gives 10% more fps compared to FSR 2 Quality, and with higher quality of upscaling. DLSS Performance gives you 15% more fps compared to FSR 2 Performance at a much much higher upscaling quality.

For those still in doubt, this is the difference that ML makes compared to hand crafted algorithms.

I love how you just decide to ignore all of the other data including the frame-rates on Ampere GPUs and the 2060 data in that review where the difference with FSR in regards to performance is <5% to just pull out this one result with the 2080 and claim victory for AI as a whole.

It's a great laugh, not gonna lie.

btw what if Nvidia modifies FSR 2 to run on tensor cores?

We are already lucky that they don't modify it to run on cpu single thread

davis.anthony

Veteran

On the RTX 2080, DLSS Quality still gives 10% more fps compared to FSR 2 Quality, and with higher quality of upscaling. DLSS Performance gives you 15% more fps compared to FSR 2 Performance at a much much higher upscaling quality.

For those still in doubt, this is the difference that ML makes compared to hand crafted algorithms.

You also get this extra bonus with DLSS too.

The infamous dlss ghosting? I wonder which version of dlss 2.3 was used

You gonna made me have physx nightmare

We are already lucky that they don't modify it to run on cpu single thread

You gonna made me have physx nightmare

Reynaldo

Regular

Maybe put the whole thing into context?You also get this extra bonus with DLSS too.

Cool. I think FSR 2.0 vs DLSS vs native vs super-sampled is probably the best. Super-sampled will show you what your target is. Even if SSAA is running at 5 fps, just getting some frames to compare would be the "ground truth" or reference image for quality.

Super-sampled isn’t the ideal target for motion artifacts though. In my experience 4xDSR doesn’t fully squash shader and temporal aliasing. Maybe 16xSSAA would do the trick.

DavidGraham

Veteran

RTX 2060 @1440p:I love how you just decide to ignore all of the other data including the frame-rates on Ampere GPUs and the 2060 data in that review where the difference with FSR in regards to performance is <5% to just pull out this one result with the 2080 and claim victory for AI as a whole.

DLSS Q is 2% faster than FSR Q

DLSS P is 5% faster than FSR P

RTX 2080 @4K:

DLSS Q is 10% faster than FSR Q

DLSS P is 12% faster than FSR Q

RTX 3080 @4K:

DLSS Q is 6% faster than FSR Q

DLSS P is 3% faster than FSR P

FSR 2 performance looks like this:

Riddlewire

Regular

It works well (according to AMD) with dynamic resolution scaling.

Could other dynamic image alterations have a negative impact on the final upscaled image from FSR?

Variable Rate Shading, for instance. I know VRS should only be applied to insignificant portions of the frame, but if you're getting frame-to-frame differences in VRS application, could that cause a problem for FSR? Perhaps a flickering in a fairly static surface.

Could other dynamic image alterations have a negative impact on the final upscaled image from FSR?

Variable Rate Shading, for instance. I know VRS should only be applied to insignificant portions of the frame, but if you're getting frame-to-frame differences in VRS application, could that cause a problem for FSR? Perhaps a flickering in a fairly static surface.

Similar threads

- Replies

- 1

- Views

- 760

- Replies

- 194

- Views

- 28K

- Replies

- 43

- Views

- 3K

- Replies

- 21

- Views

- 6K

- Replies

- 32

- Views

- 5K