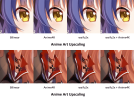

The image on the right is not clearly better. The amount of detail is the same. So what's the advantage?

You can upscale the vector image, and it does not change, yes.

But that's not our problem. We try to reconstruct missing information, including adding details. This 'missing' information is often present in older frames where the pixel grid was off by subpixel amount, which is how temporal reconstruction works. It gathers the high details over time from a combination of multiple low res images.

Additionally, ML methods can also 'invent' new details which they have learned from other, similar images.

What you have in mind is likely a purely spatial upscaling, like e.g. FSR 1.0 is.

Here, there are no previous frames of temporal data, and there is no invention of new detail.

Instead, we try to turn pixelated gradients into a sharp boundary, exactly like your given Photoshop filter does.

And that's the point i think you ignore or don't know, so listen:

To find the sharp curve, we analyse the image and calculate things like gradients.

It works by relating the current pixel with it's neighbors. The gradient then is a vector, centered at the pixel, and it can point into the direction where brightness increases, or some color changes, etc.

This vector is quantized in position, but not in direction. The direction can be any angle at unlimited precision.

If we want a vector at arbitrary position, we interpolate surrounding vectors at this position. We may use a 3x3 region of pixels and a cubic filter, which uses the same math than various splines.

After we can do this, we can follow a gradient vector, for example until its magnitude becomes zero. We will end up at some sub pixel position which isn't quantized.

We just found one point of our curve. We repeat the search on another nearby position, and connect the found points to form a spline.

So this is how your idea works. This is how to convert quantized image data to vector data, which can be polygons or bezier patches, solid colors or gradients.

But what you should realize is: We can do this, using the exact same methods, getting the exact same final results at target resolution, without a need to deal with vector data.

I can, did, and will keep doing this in image data, simply because its much faster and easier as well.

So if you understand this and agree, then what's your point to use vector data anyway, although there is no need for it.

If you understand and don't agree, what is where i'm wrong?

If you don't understand, there's no outcome from reading papers if you're not a developer yourself to implement them. You can not invent solutions by combining random papers which sound like some good idea by belly feeling.