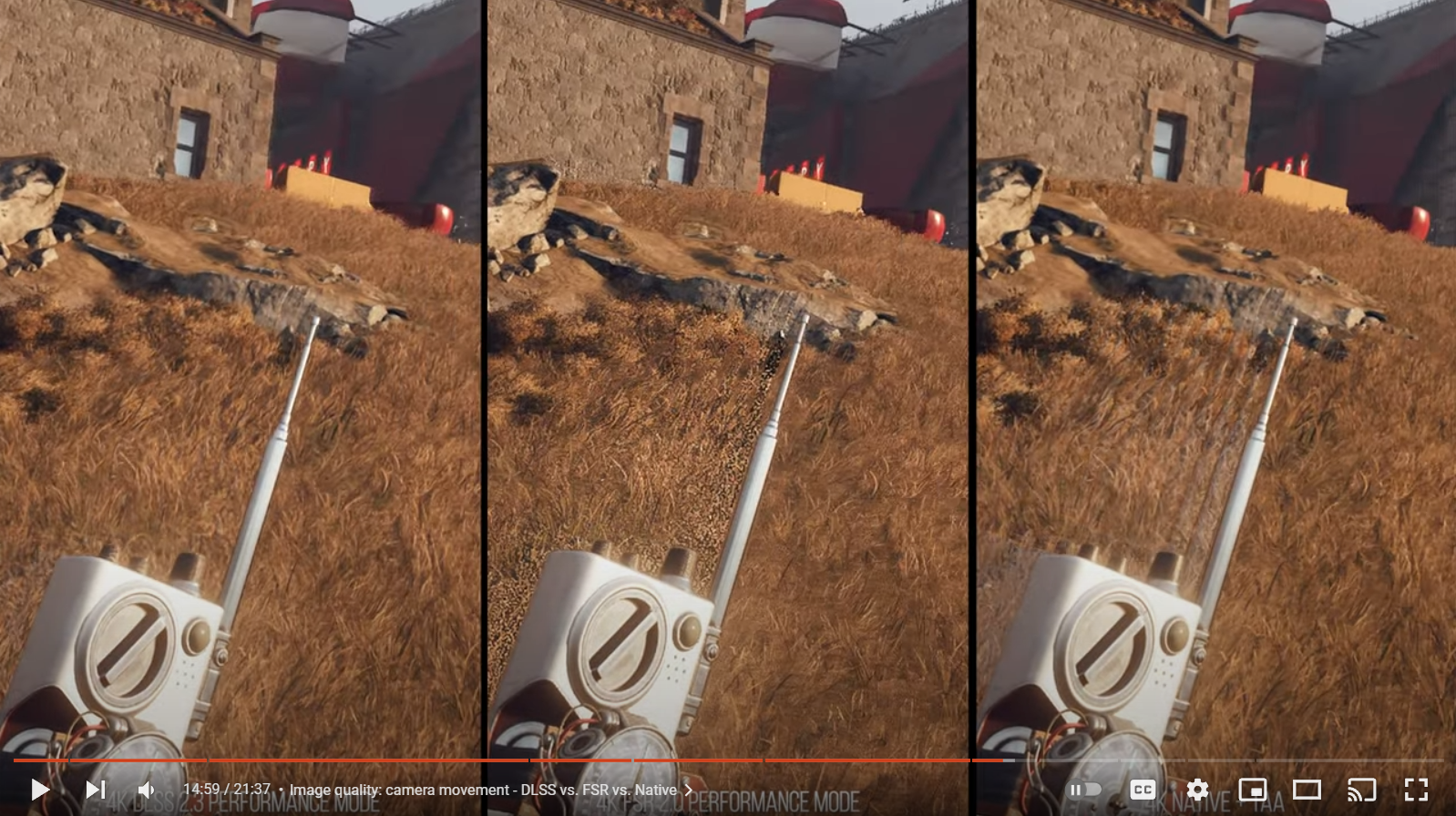

Dave ain't no nVidiot, he's just posting up facts. He ain't saying FSR 2.0 sucks, just nVidia's solution is faster which it is. (Gonna call me an nVidia fanboy?  )

)

EDITED BITS: Does anyone else find it amusing that the unreadable text looks clearer through DLSS 2.0 even though it's still illegible?

EDITED BITS: Does anyone else find it amusing that the unreadable text looks clearer through DLSS 2.0 even though it's still illegible?