I don't know, Alex mentioned this on that reddit post linked above.Do you really think that's the case? What's the chance that MOST people actually MAKING games and working on a daily basis with the people who created the engine woud not know about it? If it not widely used, quite probably there are downsides to it.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hardware Unboxed seamed a little more upbeat about it, but I think the big things is that not every game runs on Unreal. Unreal's TAAU might be best in class, but that does nothing for games that don't use Unreal. And plenty don't. Take a look at May's NPD numbers, there are only 3 games that run on Unreal (MK11, Days Gone and Biomutant, unless I missed one) in the top 20.very underwhelming results (worse results than unreal eninge taa upsample), maybe quality will improve in future

I've wanted a tiny laptop with midrange graphics and a 120hz+ screen forever. They make 13" gaming laptops, but they are.... I don't know. You pay more for them, and the GPU can't really push what the screen res is. I mean, some of those have 4k screens, and that's neat, but I would take a 10" laptop that was 720p or 900p and be fine with it, especially if I could get 2060ish level performance and have DLSS.hmmmm never thought of that tbh, but now that you mention it, imagine the benefits. Laptops not heating up to 90ºC anymore, while having a somewhat decent image quality to play with and the possibility to enjoy games which were almost unplayable.

As for desktop computers and from personal experience, the thing I care the most about a future GPU -which I am going to need in a year or two- is power efficiency to play at 1440p, adding robust framerate. So I am torn between nVidia, AMD or Intel and have time to decide.

The thing I care the most is the electricity bill and the temperature of the components. That's why I always preferred the most efficient hardware rather than brute force hardware. In that sense, oddly enough, AMD has the best GPUs, imho, as of now

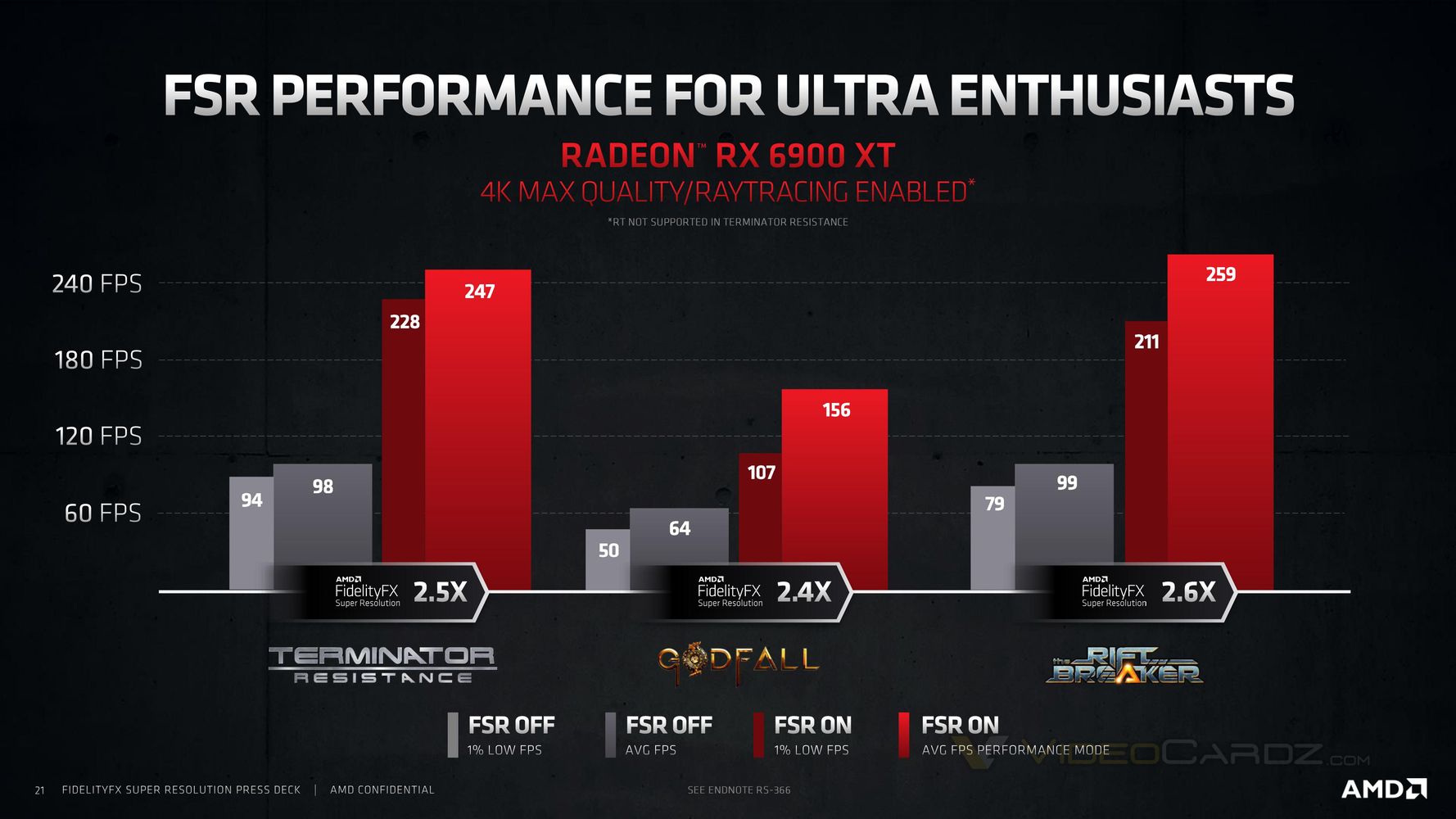

You ignore all but the Performance mode - the one mode AMD actually says you shouldn't even use.

For marketing Performance mode is good enough but not for gamers?!:

https://videocardz.com/newz/amd-launches-fidelityfx-super-resolution-upscaling-technology

Yep.For marketing Performance mode is good enough but not for gamers?!:

https://videocardz.com/newz/amd-launches-fidelityfx-super-resolution-upscaling-technology

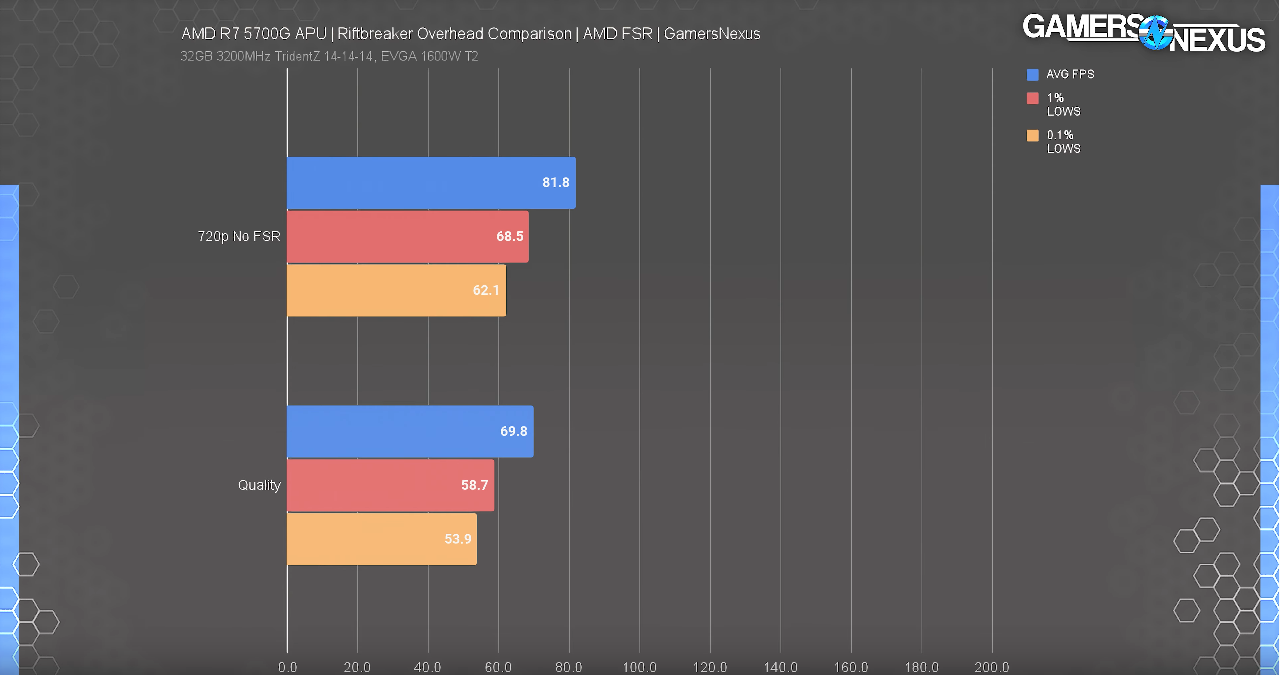

If this is showing what I think it is*, then that's exactly where I see the real benefit of FSR. It's not a competitor for high-end-tech like DLSS2.x working on equally high end graphics hardware, but a means to boost fps for gamers with older hardware or even integrated graphics at an acceptable loss of image quality. And looking at it from that perspective, it's really a worthwhile addition to the arsenal of tools.From GamersNexus video:

Granted that's on a 5700G so it would be interesting to see the cost on proper desktop GPUs.

Watched HUB's video and yeah it's bad again. All they are saying there goes against every other review and image I've seen so far.

I think AMD should have marketed it as that more clearly, instead of leaving it open to interpretation if this is their DLSS competitor, which in my opinion it's clearly not.

*the quality results being 720p input -> 1080p output, i.e. what you can expect with a 5700G on your FHD display.

If this is showing what I think it is*, then that's exactly where I see the real benefit of FSR. It's not a competitor for high-end-tech like DLSS2.x working on equally high end graphics hardware, but a means to boost fps for gamers with older hardware or even integrated graphics at an acceptable loss of image quality. And looking at it from that perspective, it's really a worthwhile addition to the arsenal of tools.

I think AMD should have marketed it as that more clearly, instead of leaving it open to interpretation if this is their DLSS competitor, which in my opinion it's clearly not.

*the quality results being 720p input -> 1080p output, i.e. what you can expect with a 5700G on your FHD display.

You nailed it there i think.

DegustatoR

Legend

It shows the cost of FSR (overhead) compared to native rendering in the resolution from which FSR upscales. Here it's ~15%. But you do get a better IQ with FSR of course. And this should be tested on modern dGPUs too to get a more complete picture.If this is showing what I think it is*

The problem with that is that the loss of IQ from FSR is more severe in lower resolutions meaning that the gains from FSR in the low end (on older h/w) are more limited. It is better than just running a game in lower resolution but not so much if the game have a TAAU option.It's not a competitor for high-end-tech like DLSS2.x working on equally high end graphics hardware, but a means to boost fps for gamers with older hardware or even integrated graphics at an acceptable loss of image quality.

Also how many games have issues running on even 10 series GPUs these days? It's usually games which use RT which have such performance problems, and these won't run on older / low end GPUs anyway making the whole FSR promise of being widely compatible a bit moot. Maybe in the future this will change as games will become more heavy even without RT.

snc

Veteran

ue is one of most popular engine and other also has other reconstrucion technigs (like insomniac engine has one of best) so yeah, they quality must improve to have any usage, for now its like dlss 1.0, good path, still works to doHardware Unboxed seamed a little more upbeat about it, but I think the big things is that not every game runs on Unreal. Unreal's TAAU might be best in class, but that does nothing for games that don't use Unreal. And plenty don't. Take a look at May's NPD numbers, there are only 3 games that run on Unreal (MK11, Days Gone and Biomutant, unless I missed one) in the top 20.

DegustatoR

Legend

Most people who actually care about gaming do. The fact that it's 1060 which leads Steam h/w survey proves this without any doubt.1050 is also a 10-series GPU. And i am not even mentioning 1030, because of reason.

But on a 1050 or 1050 Ti, there are a lot of games that could use a few more fps.

Not everyone plays with a high-end card for 300 $ and up.

Some of the newer laptops come with AI engines/socs. I would be interested to see if there is sufficient power in those for a AI upscaler even if it's minimal, it should outperform this first version of FSR.I've wanted a tiny laptop with midrange graphics and a 120hz+ screen forever. They make 13" gaming laptops, but they are.... I don't know. You pay more for them, and the GPU can't really push what the screen res is. I mean, some of those have 4k screens, and that's neat, but I would take a 10" laptop that was 720p or 900p and be fine with it, especially if I could get 2060ish level performance and have DLSS.

One thing i wish alex mentioned was how games look when compared to the native resolution they are being upscaled from rather than native 4k. Is the improvement noticeable with fsr upscaling from 1612p versus a native 1612p? What are the performance trade offs of that ect

I didnt go in expecting fsr to be as good as native 4k but i was expecting comparisons from basic 1440p to fsr upscaled from 1440p ect.

I wondered that. For example, How does 1440p/FSR look compared to 1440p with and without other forms of AA/upscalers? And how fast does it run?

If you use 1440P base resolution + FSR, you get a higher resolution. If you have native 1440p, and output 1440p, then you can't compare the images without downsampling the 4K FSR back to 1440p.I wondered that. For example, How does 1440p/FSR look compared to 1440p with and without other forms of AA/upscalers? And how fast does it run?

If you decide to measure 1440p ->4K FSR, vs 1440p outputting at 4K, then you've chosen bilinear upsampling for the native image, so in theory you could also choose TAA Upsampling for instance.

I'm not sure a proper comparison can be done.

You'll lose the frame rate advantage of comparing 1440p vs 1440pFSR.

Technically, according to these graphs, to use Ultra Quality, both consoles will likely incur about a 1ms penalty for processing.

Last edited:

Frenetic Pony

Veteran

Well, it looks fine, technically slots right into where it should (after TAA but before post processing) and of course doesn't look as good as say, Epic's new TAA upscaler. But there's probably other devs that will benefit from it, EG I can see it being used on Elden Ring.

The neat thing is, one could hypothetically use it with temporal upscaling as well. Apply it to current frame information before resolving with the history buffer. Even better upscaling, especially for new areas with no history to resolve from!

But otherwise, it's an fps boost, just like DLSS is. It's odd that so many "tech" sites miss that, have missed that for DLSS too, extra performance is the entire point. How much image quality you're trading off for that performance boost matters, that's the equation, but it's still about the performance boost. From that perspective, well it's fine.

The neat thing is, one could hypothetically use it with temporal upscaling as well. Apply it to current frame information before resolving with the history buffer. Even better upscaling, especially for new areas with no history to resolve from!

But otherwise, it's an fps boost, just like DLSS is. It's odd that so many "tech" sites miss that, have missed that for DLSS too, extra performance is the entire point. How much image quality you're trading off for that performance boost matters, that's the equation, but it's still about the performance boost. From that perspective, well it's fine.

D

Deleted member 2197

Guest

Wouldn't a better option for those with entry level gpu's simply be lowering quality from Ultra to Very High, or Very High to Medium? Many using cards in this category would prefer clean, blur/artifact free visuals ... I don't think ingrained console type choices regarding fps gains vs visual quality hold much water for PC gamers, (at least in my case since I'm fine running at 30-40 fps as long as the visuals are fine).

Well, it looks fine, technically slots right into where it should (after TAA but before post processing) and of course doesn't look as good as say, Epic's new TAA upscaler. But there's probably other devs that will benefit from it, EG I can see it being used on Elden Ring.

The neat thing is, one could hypothetically use it with temporal upscaling as well. Apply it to current frame information before resolving with the history buffer. Even better upscaling, especially for new areas with no history to resolve from!

But otherwise, it's an fps boost, just like DLSS is. It's odd that so many "tech" sites miss that, have missed that for DLSS too, extra performance is the entire point. How much image quality you're trading off for that performance boost matters, that's the equation, but it's still about the performance boost. From that perspective, well it's fine.

But i get that by just lowering the resolution. FSR doesnt reconstruct details and create new informationen. Buying a used 1080p display for gaming is more useful than using FSR to upscale from 1080p -> 2160p.

those are the slides I was most interested in! Thx for sharing those slides Especially the 1440p numbers.If you use 1440P base resolution + FSR, you get a higher resolution. If you have native 1440p, and output 1440p, then you can't compare the images without downsampling the 4K FSR back to 1440p.

If you decide to measure 1440p ->4K FSR, vs 1440p outputting at 4K, then you've chosen bilinear upsampling for the native image, so in theory you could also choose TAA Upsampling for instance.

I'm not sure a proper comparison can be done.

You'll lose the frame rate advantage of comparing 1440p vs 1440pFSR.

Technically, according to these graphs, to use Ultra Quality, both consoles will likely incur about a 1ms penalty for processing.

The future seems very interesting for this tech. However, the main downside imho would be if this technology only worked in new games.

If it could be forced into the GPU drivers to support our favourite games ever (Divinity Original Sin 2, Skyrim, etc etc) then I think there is no contest at all, it'd be the best upscaling technique to date hands down.

Hardware Unboxed review. @London Geezer they measure the image quality at 1440p too, which is what I also wanted to watch.

edit: according to them Terminator Resistance looks better with FSR on at ultra quality 1440p than native 1440p.

edit2: They also compare FSR not only between different native resolutions, including 1080p and 1440p, but mention GPUs such as the RX 570 4GB which they benchmarked with it as well. In addition they compare the FSR with typical upscale techniques -it wins every time-, also with Fidelity CAS where FSR again wins, and even with the best sharpening filters of adobe premiere , and surprisingly so they compare it to DLSS 1.0 -FSR wins again-, etc etc. And they even compare it with DLSS 2.0 and say it's very difficult, it's not a black or white thing, it's more complex than that, they say.

They explain why DLSS 2.0 is not necessarily better than FSR as of now, in some areas, while it is in others.

Last edited:

digitalwanderer

Legend

I liked Gamer's Nexus' video on it and tend to agree with their conclusions. Looks neat, but need a couple of games to use it to check it out first.

Frenetic Pony

Veteran

But i get that by just lowering the resolution. FSR doesnt reconstruct details and create new informationen. Buying a used 1080p display for gaming is more useful than using FSR to upscale from 1080p -> 2160p.

Then buy a 1080p display. No one is forcing you to use it, same as with DLSS or any upscaler. Shrug

Similar threads

- Replies

- 7

- Views

- 2K

- Replies

- 41

- Views

- 5K

- Replies

- 1

- Views

- 1K

- Replies

- 201

- Views

- 38K