Projections indicate a notable increase in the cost of 2nm wafers compared to 3nm wafers. It is anticipated that 2nm wafers will see a 50% cost increase, reaching approximately $30,000 each. Taiwan Semiconductor Manufacturing Company (TSMC) is currently producing 3nm wafers at a cost of $20,000 per unit. The upcoming surge in prices for 2nm wafers has substantial implications for manufacturers like Apple, which faces challenges in maintaining profitability, especially for high-end products that utilize the 2nm process.

...

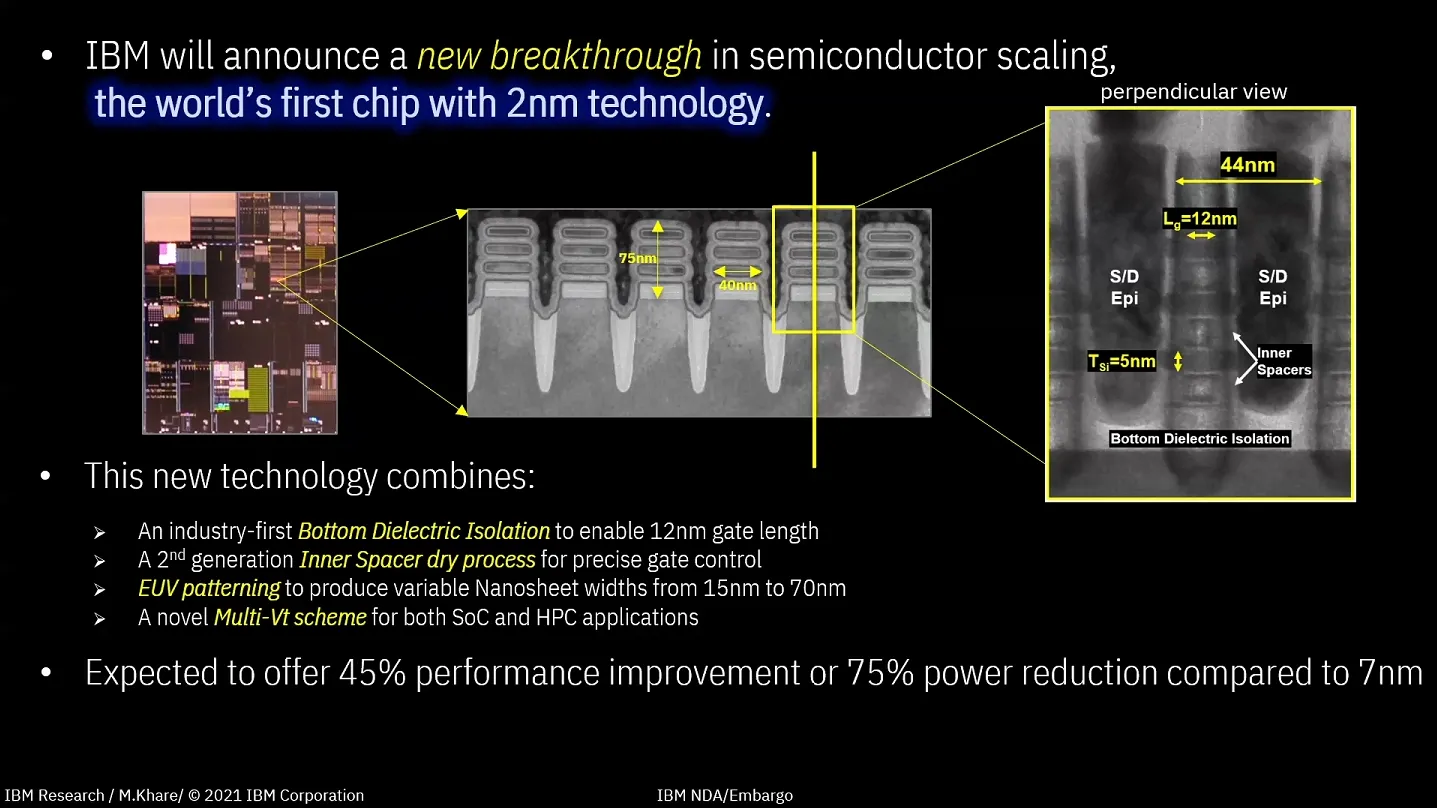

IBS estimates that a 2-nanometer fabrication facility capable of producing about 50,000 wafers monthly would cost around $28 billion, compared to $20 billion for a 3-nanometer facility. This increase is largely attributed to the need for more advanced extreme ultraviolet lithography (EUV) equipment to sustain a 2-nanometer production capacity of 50,000 wafers per month. Consequently, the production cost for each wafer and chip has risen significantly. Apple, a key user of advanced processes, is likely to be impacted by these cost changes. Presently, Apple is the sole user of TSMC's latest N3B (3nm) processors for mass production in its smartphones and PCs.

...

IBS projects that when TSMC launches its 2nm process between 2025 and 2026, the cost of each 12-inch wafer used by Apple will be around $30,000. In comparison, the cost of 3nm wafers is estimated at about $20,000. However, semiconductor news outlet Tom's Hardware has noted that IBS's chip cost estimates might be overstated. IBS approximates Apple's cost per 3-nanometer wafer at $50, but the actual cost, factoring in an 85% yield rate, is closer to $40.

...

TSMC is at a critical point as competitors, such as Samsung, are advancing in the sector. Samsung plans to start 2nm wafer production, with current pricing not yet competitive but likely to change in the future. The rapid development of artificial intelligence (AI) technology is influencing companies like NVIDIA and AMD to consider 3nm wafers and more sophisticated fabrication processes for their future products.

These advanced processes will facilitate the creation of more powerful, albeit more expensive, AI chips. NVIDIA, currently manufacturing AI chips at 5nm, already faces significant production costs. In conclusion, the differential pricing between 2nm and 3nm wafers is set to reshape the semiconductor industry, compelling companies to strategize carefully in terms of cost management and alignment with the evolving demands of technologies like AI.