arandomguy

Regular

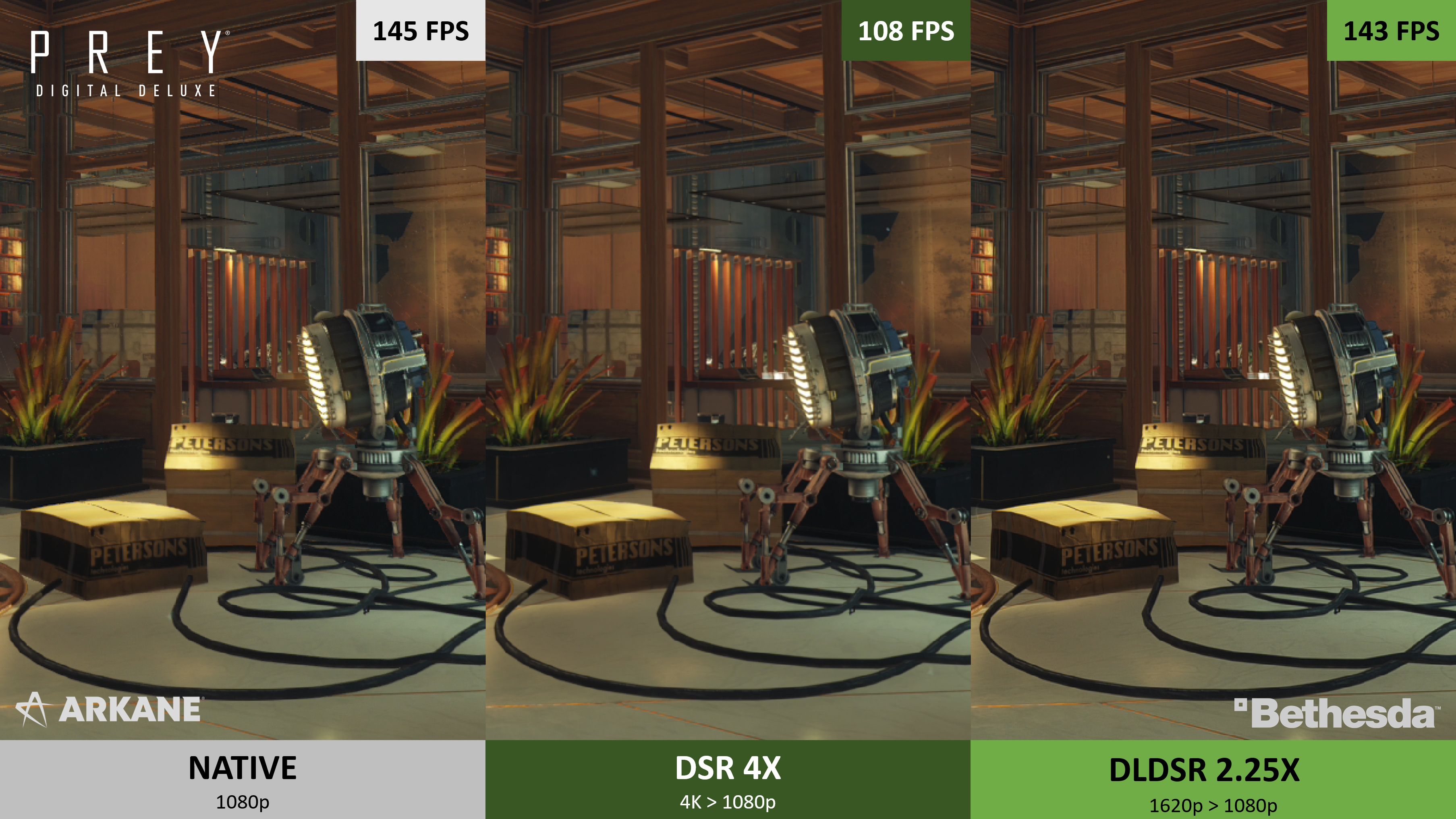

I haven't used DSR in quite awhile but from what I remember when it first launched the impressions was that anything other than factor of 4 (eg. 4k ->1080p) down scaling was less than ideal in terms of the ends results, especially without a lot of manual adjustments of the smoothing setting. This of course meant there was an inherent usability issue in that the performance requirement jump would be quite big to achieve x4. If this just From the preview screenshot it seems like this focused on in between steps with 1.78x and 2.25x scaling with DL.

What would interesting is to compare DLDSR on a lower resolution display against DLSS on a higher resolution display. For instance 1080p DLDSR vs. 1440p DLSS/FSR/XeSS. 1440p DLDSR vs. 4K DLSS/FSR/XeSS.

What would interesting is to compare DLDSR on a lower resolution display against DLSS on a higher resolution display. For instance 1080p DLDSR vs. 1440p DLSS/FSR/XeSS. 1440p DLDSR vs. 4K DLSS/FSR/XeSS.