DavidGraham

Veteran

FIFA 19 dropped it as well, as well as several Xbox exclusives, such as Quantum Break, Sea of Thieves, Sunset Overdrive .. etc.An interesting case is the new Hitman game: They dropped DX12 although it was faster for the previous game (on older GPUs it was twice as fast!). Likely cost to maintain both was too high to be worth it for them.

WOW is a CPU bound game, it's almost single threaded at this point. And from the link you posted, it doesn't benefit one iota from DX12. Only when Blizzard activates multi threading does the game gain significant boost to performance. But multi threading is only available in DX12 as Blizzard didn't bother bringing it to DX11.I wouldn't say dx12 is a complete waste. World of warcraft added a dx12 renderer in its new expansion and in a patch after launch it improved performance even further. The initial implementation was slower on Nvidia hardware for whatever reason so they defaulted to the old dx11 renderer, but amd and Intel defaulted to dx12. With the newest version all default to dx12.

In fact when DX12 was first introduced to the game (July 2018), NVIDIA's DX11 was 20% faster than AMD's DX12.

https://www.computerbase.de/2018-07...agramm-wow-directx-11-vs-directx-12-1920-1080

https://www.extremetech.com/gaming/273923-benchmarking-world-of-warcrafts-directx-12-support

It took significant time to bring multi threading to DX12 (December 2018), once that happened all GPUs had improved fps. NVIDIA even touted a 25% uplift on a 2080Ti @1080p.

https://www.nvidia.com/en-us/geforce/news/world-of-warcraft-directx-12-performance-update/

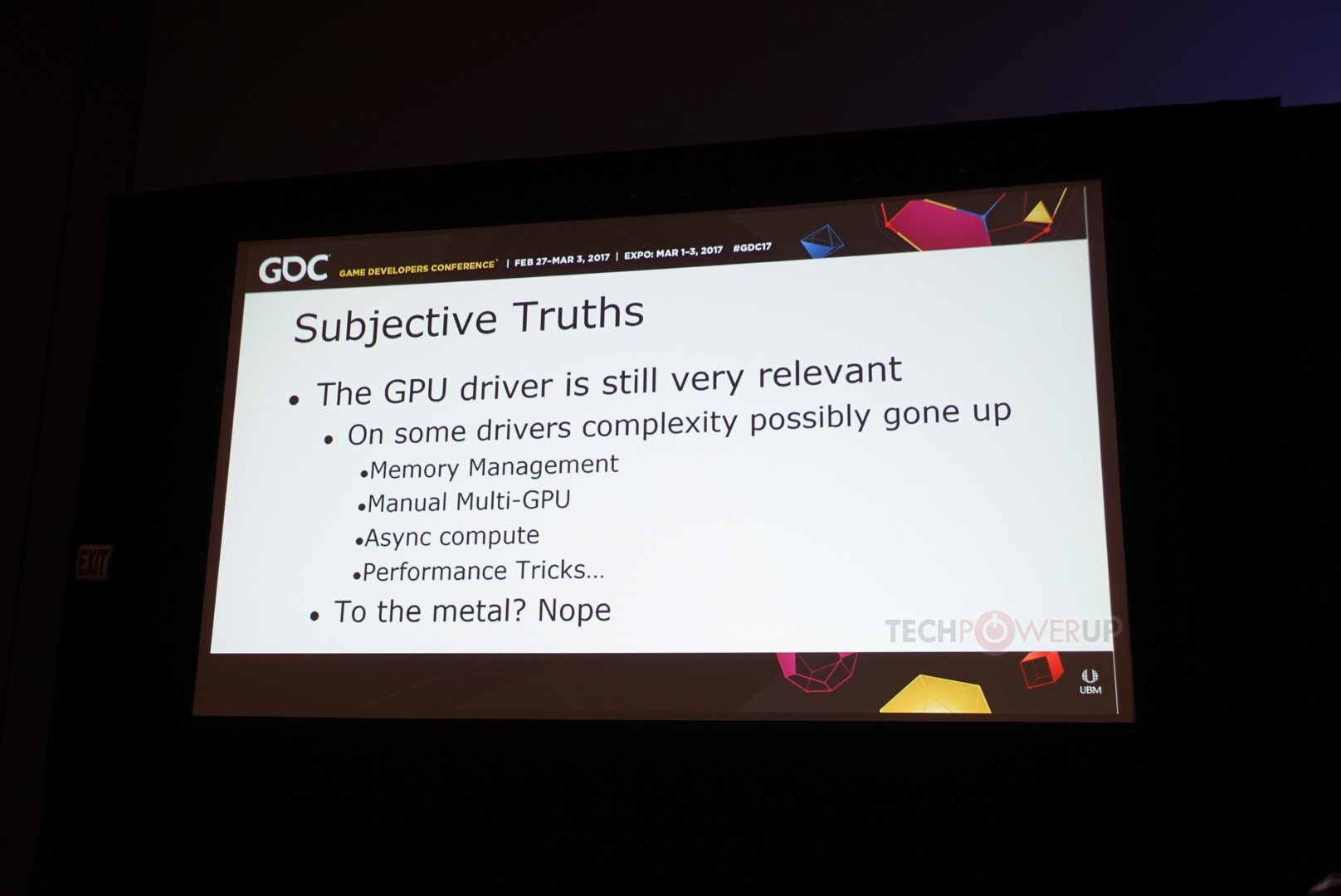

But the weird hacks continued, NVIDIA delivered consistent driver updates that improved DX12 and Vulkan performance, we've seen that in Hitman and Doom. These lower level APIs still rely on the driver for a great deal of their work.I think the idea is that 12/Vulkan was a break away from DX9-11 and whatever legacy items were in there; from that perspective there is benefit. A single API whose behaviours should be properly defined and supported, no weird hacks to get an intended result.