hesido

Regular

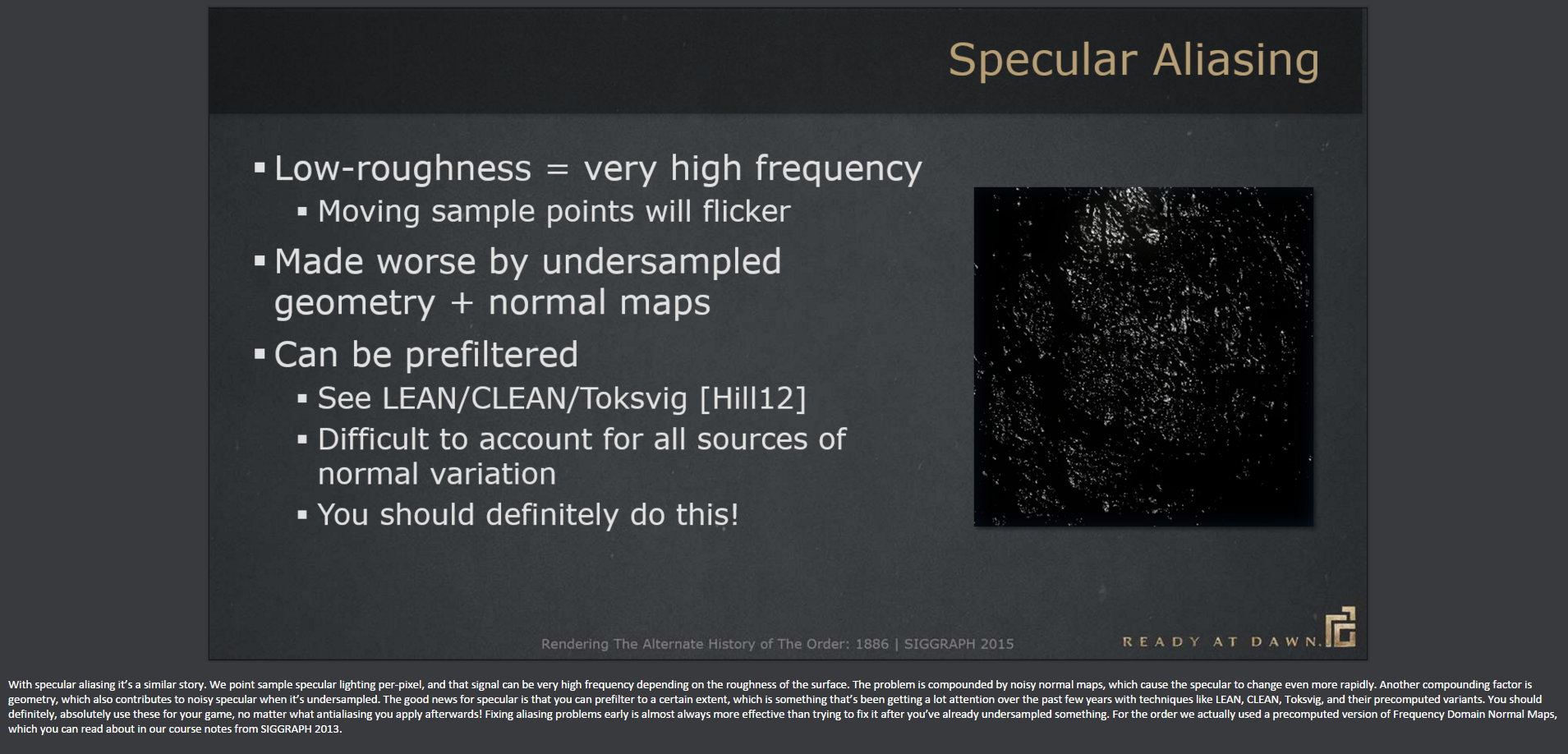

Shader aliasing is a subset of aliasing we began to see when pixel shaders were introduced. Until then, aliasing was almost always was seen at the edges of geometry (which MSAA does a very nice job of removing, and might be called "standard" aliasing). Shader aliasing happens when the shader has high frequency output (varying contrast within less than a pixel or between pixels), but not sampled enough per pixel. MSAA cannot deal with this. The solution would be to either do more samples per pixel, or use a shader that does not result in high frequency output.Dumb question, but how does one tell Shader Aliasing from standard Aliasing?

Please correct me without hurting my feelings if I'm wrong.