The Substance apps (Painter and Designer) are designed to integrate pretty well into modern game asset pipelines (and also work reasonably well with CG animation / VFX stuff). I'm still not familiar enough with the software, but here are some things I've found remarkable.

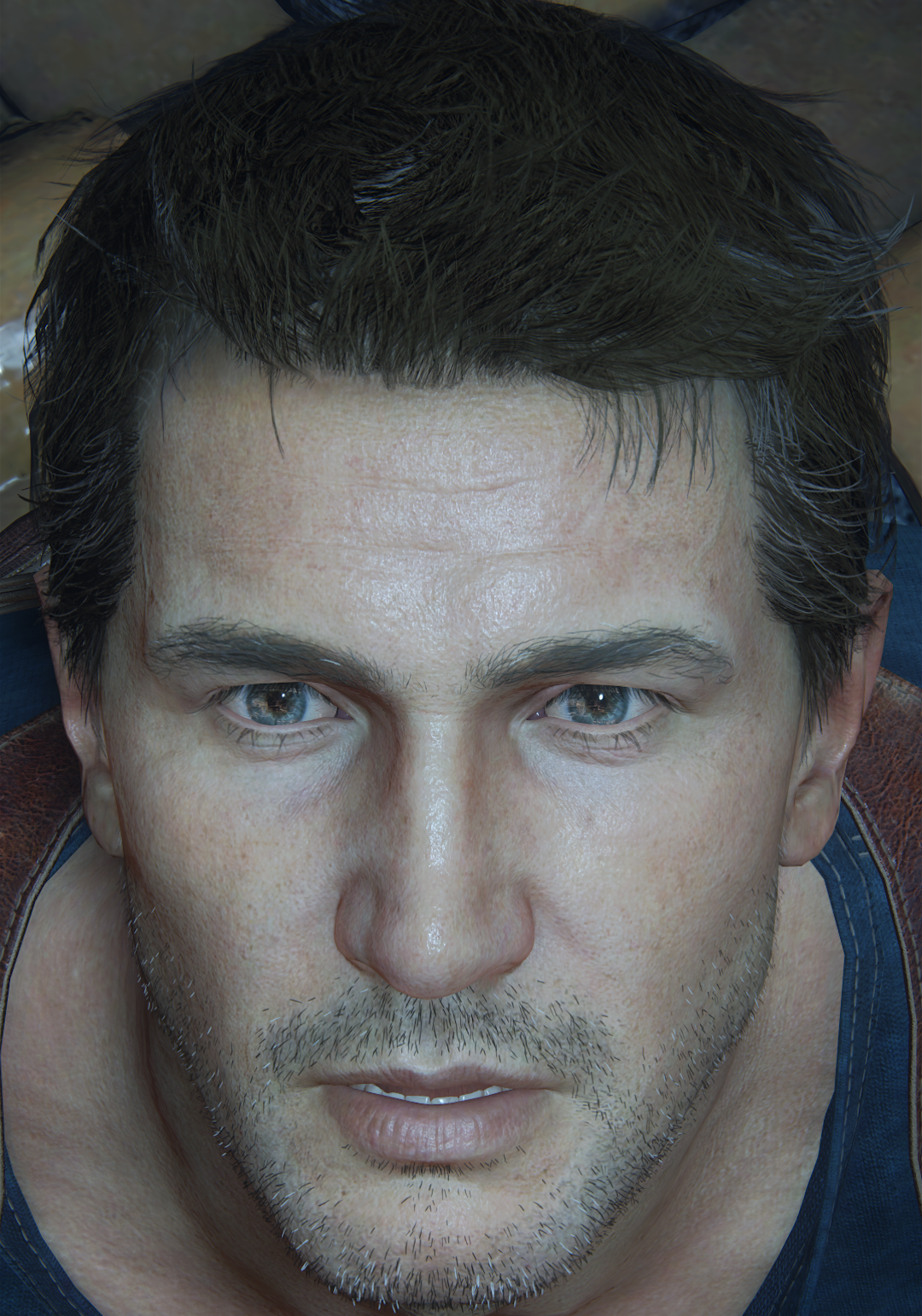

Most assets begin as a high poly model or Zbrush sculpt which defines the forms and shapes. Substance then generates a lot of different 'procedural' maps, which are based on this geometry; I'm not sure about all, but here are some examples:

- Edge map: areas of the model where the surface normal changes sharply - this can guide the intensity of wear and tear, as parts that stick out tend to get more abuse

- Cavity map: sort of an inverse to the edge map, marking the crevices and such (kinda similar to AO) - this can guide the accumulation of dirt, moss etc.

- I think there's a map that's based on the vertical orientation of the surfaces - this can guide stuff like the way corrosion from rain etc. flows downwards, or maybe how sunlight fades out the colors

- Normal maps are usually generated by the artist and not Substance, and they're probably also an important element of the Edge and Cavity maps

- Material ID maps, where the artist paints on the Zbrush sculpt in some bright, easy to separate colors which are usually a combination of the RGB components (so primary, secondary etc) - these can be associated with various PBR materials automatically

Edit: looking at their site, some of the map names they're listing are Curvature, Thickness, Position - I guess it's easy to see how these are really helpful in generating proper results.

Then there are a lot of different types of the 'classic' procedural noise maps which an artist can use to create various patterns with.

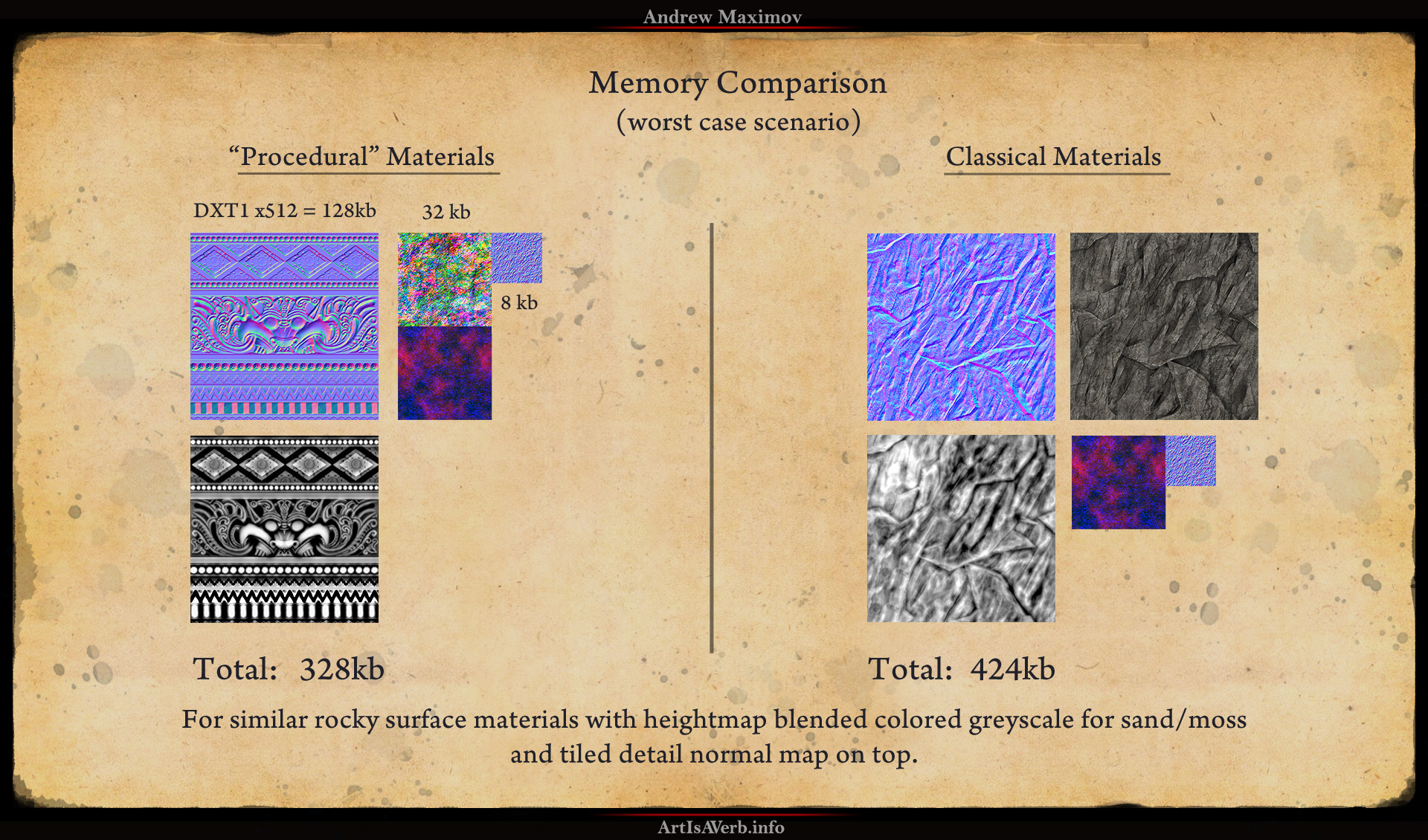

The actual workflow is that each generated map, procedural noise, and usually some tiled bitmap textures, are each represented as a node in the shading network. Each can be used as actual textures, masks to blend between the textures, and they can all be plugged into the various channels (diffuse, specular etc) of the PBR shaders.

At the starting phase of a project, the artists will build a library of these shaders, stuff like various kinds of metal with various levels and types of wear and tear, or leather, or wood, and so on.

Then, these shader templates can be thrown onto all the objects coming out of the modeling/sculpting departments. The various maps generated from the model will create texture details that are driven by the object itself, instead of generic templates - so they're look a lot more correct and believable. The material ID masks will also help to deal with more complex objects which increases productivity a lot.

And of course it's always possible to have an artist do a manual pass on any procedural or generated maps, even the noises, to fine tune the final results for hero assets.

Substance will then generate the final set of maps using the templates, procedural maps and hand painted stuff; and because the shading network and all components are still all there, it's also very fast and easy to iterate on any or all of the components to polish or tweak the results.

Also, Substance has presets to generate output that fits the requirements of most modern offline renderers - which is the part we're most interested in

I'd also like to add that all of the above stuff is not really that new, almost everyone working in 3D asset creation has used some of the principles listed above. The big step forward was to combine all the approaches into a powerful app with great ease of use and automation.

So basically, Substance is a procedural texture creation tool; but a great part of that procedural stuff is heavily based and actually generated from the individual models, thus it will always generate textures with a much more unique and realistic look.