You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

UC4: Best looking gameplay? *SPOILS*

- Thread starter RenegadeRocks

- Start date

-

- Tags

- uncharted 4

- Status

- Not open for further replies.

I agree the setpieces are extremely complex and very interesting in Uncharted 4. Even if they are by and large scripted, I find them highly impressive as well, there is lots of continuous and sometimes very rapid change in the environments in those kind of scenes

Unfortunately, there are no buzz words for such incredible accomplishments... apparently, as long as you can't say GI, AO, SSR, it's not impressive

The explosion of the grenade is reflected in the ground as SSR, similar to Killzone Shadowfall.

The other extra sparks are not though. But it is still a nice effect

Some of those sparks does reflect. But not all. Perhaps to save ressources.

I remember Second Son where some GPU particles had collosion enabled, but just a few (the most fell through the floor).

rockaman

Regular

Yes interesting comparison too, it has been a really long time since I have played Infamous SS... I have not yet finished it, but I do remember lots of SSR in that game too, as well as nice particles. I assume the smoke powers being so prominent, it makes lots of sense to have them be interactive directlySome of those sparks does reflect. But not all. Perhaps to save ressources.

I remember Second Son where some GPU particles had collosion enabled, but just a few (the most fell through the floor).

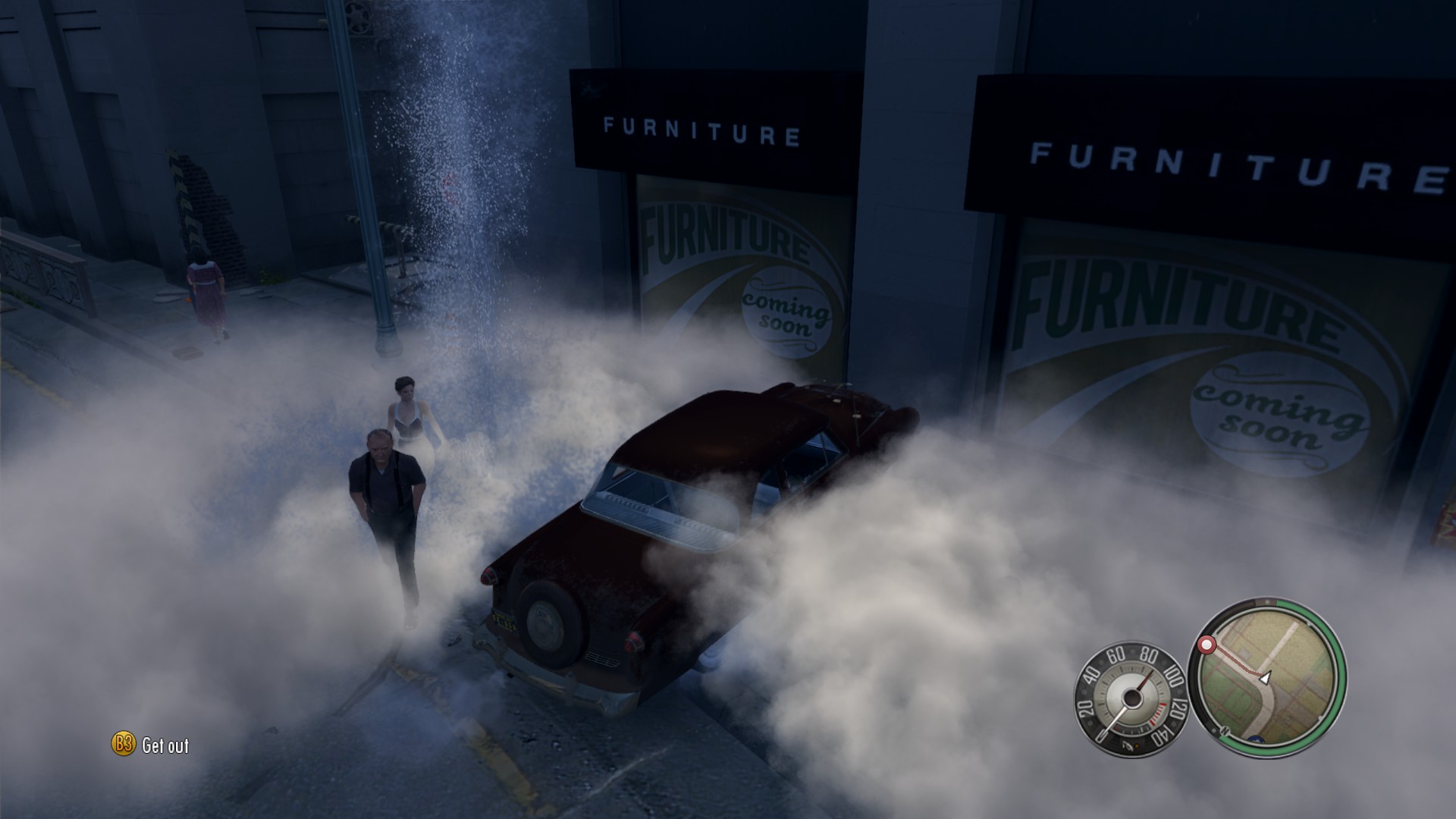

Probably a bit redundant citing Mafia 2 again, but if you have PhysX enabled I believe all the particles, at least the larger smoke ones, also interact with game geometry. And even characters. I should have some pictures of this

It's probably hard to tell from just a screenshot, but the people walking, and the car driving in, the smoke actually push the particles away, it's pretty neat for a 2010 title, it's also a nice thing it runs much better on current hardware ^^

Some of those sparks does reflect. But not all. Perhaps to save ressources.

I remember Second Son where some GPU particles had collosion enabled, but just a few (the most fell through the floor).

Honestly, i don't see any reflection but even if you are right, it's quite unimpressive visually because it means that really few particles are reflected to the point that i can't even see them.

Moreover, i suppose those gifs are from the PC version ?

It's complicated. Generally temporal reprojection, resolve, and spatial stuff (like SMAA1x) itself is done in screen-space, but the information that these things have to work with and the quality of the results is heavily dependent on the scene makeup and in some cases things that are done per-object.Someone correct me if I'm wrong, but I don't think realtime AA works on a per object basis. I'd imagine AA performance is independent of scene complexity and more dependent on resolution since it's done in screenspace.

For instance, if you want reprojection to be accurate in motion and work for moving objects, you need some way of creating and storing good motion data, which means per-object velocity calculations that get stored in screen-space for use by the TAA (and MB and whatnot). By contrast, the TAA in Halo Reach just rejects pixels where it thinks motion is occurring, so it doesn't need precise motion results; it just needs to know whether motion is occurring. But, the results are also not as high-quality, since the game has no explicit AA in motion (the classical tactic of hoping that the jag won't be as noticeable in motion, especially with motion blur; the obvious failure case being ultra-slow panning, where you have neither motion nor AA to cover for the raw render).

Scene makeup also strongly affects results, for a few reasons. One, high-contrast regions of the screen with lots of high-frequency detail are where AA is needed the most, but they're also a challenging case for deciding whether a reprojected sample is valid or not. Two, depth discontinuities also create challenges for deciding whether a reprojected sample is valid (hence why you have cases like TO1886 where the TAA is mostly intended for shader aliasing and MSAA is being used to clean up high-frequency geometry), and they create disocclusion areas where TAA is simply has nothing to work with.

Both of the above paragraphs also obviously introduce motion itself as an element of scene complexity. Even camera movement (or perhaps most predominantly camera movement) is extremely important in terms of the kinds of crap you run into. And this matters for graphics in general, more or less. For instance, it's REALLY DIFFICULT to hold the camera in FFXIII stable when you're holding it sideways from a character walking in a straight line; this makes perfect sense given that fast parallax would cause the huge amount of nearby detail implemented as skybox to stand out more. The terrain is just rough enough in UC4's Madagascar that I was rarely able to move the jeep fast without moderate angular camera movement, which is great because it means that the noisy MB is breaking up middle-frequency shenanigans like LOD transitions in the grass (which at the contrasts and densities being used is a challenging thing to hide).

They might be getting rendered in different ways. One of the challenges with SSR is that it relies on the depth buffer, and transparencies don't like depth buffers.Some of those sparks does reflect. But not all. Perhaps to save ressources.

The typical quirk would be that if a transparency is in front of a wall in screen-space, naive SSR would reflect it as if it were a texture painted on the wall.

edit: Kind of like this:

Oh sixth-gen games, why are you still so awesome...I remember Second Son where some GPU particles had collosion enabled, but just a few (the most fell through the floor).

Last edited:

rockaman

Regular

Still my favourite Halo game! Great PC version, and with OpenSauce 4.0 you can have nice SMAA and FOV adjustmentsOh sixth-gen games, why are you still so awesome...

I haven't uninstalled it for 8+ years now (just guessing, it might be longer than that XD) and still play it today ^^

Also really nice explanation of TAA details, reading now, brain hurting

It's a great PC version in that it's included functionality and modability is decent enough, but visually it's a poor port. Unfortunately it's also been used as the basis of every version since, without fixes.Great PC version

rockaman

Regular

Ah I didn't know that, I don't own the original Halo. Something I want to buy when I get a 360 againIt's a great PC version in that it's included functionality and modability is decent enough, but visually it's a poor port. Unfortunately it's also been used as the basis of every version since, without fixes.

I do remember though... it was very intense on GPUs at the time, I don't know the first GPU I was playing it on, but it had a lot of trouble running it smoothly, even my very long time ago ATI 9800 XT didn't like it very much

They might be getting rendered in different ways. One of the challenges with SSR is that it relies on the depth buffer, and transparencies don't like depth buffers.

The typical quirk would be that if a transparency is in front of a wall in screen-space, naive SSR would reflect it as if it were a texture painted on the wall.

edit: Kind of like this:

Oh sixth-gen games, why are you still so awesome...

These are not GPU particles. I know that every CPU particle in Crysis 1 could collide on both, static and moving objects. But Second son used screen space GPU particles to save ressources.

And if it is very easy so find reflection with GPU partciles. Please show me that in games like Uncharted 4. I have trouble to find them in many games.

rockaman

Regular

I don't believe Uncharted 4 employs SSR very much, if at all. I didn't notice it personally while playing at least, unless there were reflections that were SSR that I did not recognize.

It's definitely something that other games depend on more, or utilize more frequently, like DOOM, Infamous SS, DriveClub, etc. Probably that they spent more resources on other effects in UC4.

It's definitely something that other games depend on more, or utilize more frequently, like DOOM, Infamous SS, DriveClub, etc. Probably that they spent more resources on other effects in UC4.

Some things spawn large numbers of huge full-res transparent layers, some things spawn very large reasonably-persistent decals, the game uses very large high-quality light sources, you can create a couple hundred simultaneous colliding particles with a single weapon... load varies, to say the least. It's spiky on original Xbox as well.I do remember though... it was very intense on GPUs at the time, I don't know the first GPU I was playing it on, but it had a lot of trouble running it smoothly, even my very long time ago ATI 9800 XT didn't like it very much

I know.These are not GPU particles.

What's peculiar about these cases is that screen-space particle collision *should* allow for collision with any opaque surfaces, but this functionality is frequently stripped out by the time a game launches.I know that every CPU particle in Crysis 1 could collide on both, static and moving objects. But Second son used screen space GPU particles to save ressources.

Halo Reach was supposed to be able to handle tens of thousands of particles with collisions that could either cause bounce or transform the particle into another phenomenon, but the shipping game didn't have particularly insane amounts of bouncing particles. Most stuff that spawns lots of sparks uses tons of particles that fall through the floor plus a couple of bouncing particles, making me wonder if they stripped the GPU bounce out and use CPU particles for a small number of collisions.

(The transformation still exists, and is used to excellent effect with rain; raindrops turn into splash sprites when they intersect opaque surfaces, and even create little normal-mapped splash waves when they land on flat environment surfaces.)

I didn't claim it was, I was speaking to particle systems in general. (The video I posted also isn't SSR, obviously.)And if it is very easy so find reflection with GPU partciles.

...Whether their movement is calculated on the GPU doesn't necessarily dictate whether they can be reflected, as rendering and physics are two different things. Although, implementation might influence where and how they can be used in the graphics pipeline. If they're in the color buffer prior to reflections and have depth information, they can be reflected by SSR. If they're in the color buffer prior to reflections and don't have depth information, they can be incorrectly reflected by SSR. If they're not in the color buffer prior to reflections, they won't be reflected by SSR.

It's definitely there, but a lot of reflective surfaces don't employ it, probably for both image stability and performance reasons.I don't believe Uncharted 4 employs SSR very much, if at all. I didn't notice it personally while playing at least, unless there were reflections that were SSR that I did not recognize.

Part of what makes it less noticeable is that it's less artifacty than most implementations. The box-projected cubemaps are usually pretty well-made and the capsule occlusion takes over for character reflections as people leave the screen.

Last edited:

rockaman

Regular

Capsule AO (ambient occlusion?), very interesting. I noticed in DriveClub particularly that you can move the camera to "remove" reflections in the car body in the photo mode a lot.

But I bet that is harder to make a reflection of a sharper edge that we find on cars compared to soft body characters? Maybe, little bit of guesstimation from me?

I once had an excellent picture for this but I think I've lost it now

But I bet that is harder to make a reflection of a sharper edge that we find on cars compared to soft body characters? Maybe, little bit of guesstimation from me?

I once had an excellent picture for this but I think I've lost it now

I edited my post to read "capsule occlusion", as "AO" is perhaps a reductive description.Capsule AO (ambient occlusion?)

Human characters in the game, in addition to their actual model, have a simple representation made up of a bunch of spheroidish "capsules." It's simple enough that it can be used in shader calculations to occlude light via crude cone tracing. So, when the character is off-screen and the SSR no longer picks up their model, environmental cubemap specular is still being occluded in that direction by the blobby capsule representation of the character.

Maybe. It would be possible to use capsules to approximate a car, but it's debatable whether there's much point in bothering, at least as far as reflections are concerned. Cameras in racing games are relatively SSR-friendly. They stay pretty level so the horizon isn't constantly bobbing in and out of view, and cars that need to occlude light or be reflected also tend to be on-screen.But I bet that is harder to make a reflection of a sharper edge that we find on cars compared to soft body characters? Maybe, little bit of guesstimation from me?

Last edited:

The chase scene in Uncharted 4 is a step higher

And there is nothing like that in Doom

And there's nothing like any of the Doom arena battles UC4. Please stop making apples to oranges comparisons. That's not just aimed at you.

rockaman

Regular

100s of players? Which NBA are you watching?Are you guys seriously comparing the hero character in UC4 to characters in a sports game that features like hundreds of players??

Basketball has 10 players on a very limited environment setting. Bench players always have much lower LOD model, coaches also look like garbage, and audience members essentially look like HD PS2 characters.

It's apples and oranges, but let's not pretend that sports games have huge massive environments or something. They aren't pushing technical boundaries very much beyond the on-court character models (10 players for basketball, 12 for Hockey).

Any modern action game easily puts as many high quality character models in their much, much larger environments.

rockaman

Regular

They don't actually create all those faces individually from scratch. All NBA games use head-scanning technology. They also invest particularly more attention to star players and popular players, which is like 50 players max.I'm talking about the assets. UC4 has like 6 talking leads and lots of henchmen, NBA has hundreds of players. Do the math.

And if you're saying Uncharted doesn't have to create so many high quality characters, well then NBA also does not need to create incredibly high fidelity environments either. Almost all the budget of graphics in NBA games can go to characters, whereas it can't in Uncharted. So it's not so completely lopsided of a comparison like you are suggesting.

It would kind of make sense that they have a lot more time to invest in their character model design, and it shows, NBA 2K16 and NBA Live look great in character models. The comparison existing doesn't mean anyone said NBA Live is "completely outclassed" or anything.

You should be less offended there is a comparison, that's all. Too much offense taken. Chill out.

- Status

- Not open for further replies.

Similar threads

- Replies

- 24

- Views

- 2K

- Replies

- 90

- Views

- 17K

- Replies

- 16

- Views

- 4K