Also, why is everyone assuming that the total bandwidth figure of 200GB/s+ is automatically just marketing speak? Just because adding in the CPU happens to add up to 200GB/s? If so, that wouldn't make sense for them to say

MORE than 200GB/s...that's add up precisely 200GB/s, no?

Just because DF and Anandtech assume it's the CPU's contribution doesn't actually mean that's some given fact. We DID have that VGLeaks article which claims specs had been adjusted a month or so ago (same rumor as the XboxMini + BC info). And that bandwidth is clock dependent, so if they upped the clocks at all it'd point to a higher bandwidth figure.

Like Rangers, I too find it interesting that sebbbi noted the 200GB/s+ figure as a starting point when he could have just as easily qualified his estimates with a sentence like "if you assume the leaks are true and total bandwidth was 170GB/s...". Plus rumors of the consoles running hot and having relatively weak yields supposedly, slight delays in shipping beta kits out, and the fact that MS didn't disclose the clocks nor the bandwidth for the eSRAM/GPU/CPU at the reveal nor the hardware panel. Seems to me the reason they bragged about 8 core CPU and custom DX 11.1 AMD GPU and 8GB of RAM etc is because those all are identical with PS4 qualitatively on the surface. But...so are the leaked clocks.

If their agenda was to paint X1 as identical to SP4 hardware-wise they should have likewise trumpeted "an 800MHz GPU with 170GB/s of available bandwidth and a 1.6GHzCPU...", no? Unless, of course, they really did make last minute changes to the clocks as VGLeaks' source claimed.

Just for fun, how would having say 204.8GB/s of overall bandwidth change things? How does that affect flops, clocks, etc?

It'd be something like this perhaps:

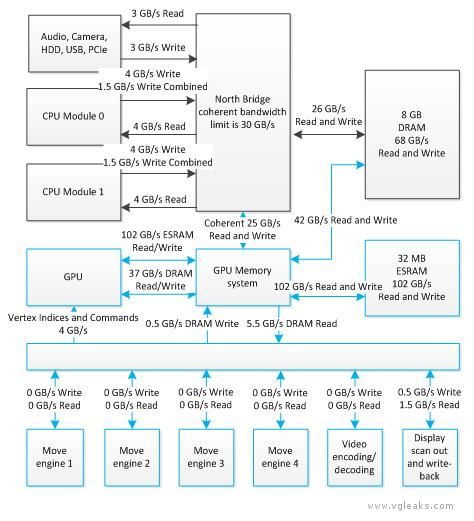

204.8GB/s total to the GPU ("more than 200GB/s of available bandwidth"); does not include any CPU contribution

204.8-68.3=136.5GB/s for eSRAM

(136.5/102.4)*800MHz=1.066GHz GPU ==> 1.64Tflops

Assuming coherency between the chips on the SoC...that's a CPU at 2.13GHz.

Before you shout at me and tell me how that CPU clock can't possibly work because of the thermal considerations of Jaguar's spec, I'll note that X1's CPU isn't actually a Jaguar (even though everyone is reporting it as such by assuming).