I think Sony will want to compete with the Marvel Cinematic Universe and release a new one every six months or so. Gotta keep up with the times!PS5 will be 2018, just to piss people off.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PS4 Pro Speculation (PS4K NEO Kaio-Ken-Kutaragi-Kaz Neo-san)

- Thread starter mpg1

- Start date

-

- Tags

- sony sony roach motel

- Status

- Not open for further replies.

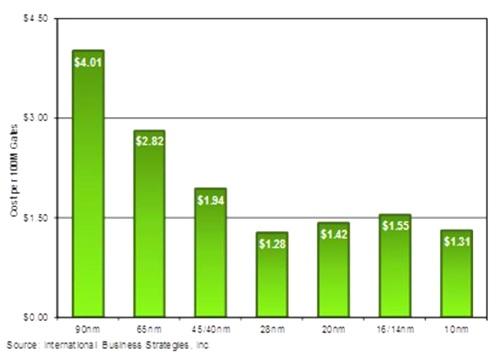

Not sure if it's still valid, but 10nm and 7nm are the nodes that are expected to bring back a nice drop of cost per transistor and expected to have the longevity of 28nm. It should have massive volume.I wonder if it really is that important. The lower cost is questionable. New node tends to be more expensive and like discussed earlier, the dies are already small to the point where taking full advantage of the shrink is complicated. Also PS4 is already quite small. Does it really need to be smaller? PS4 Neo probably has around the same power draw if not slightly less. I think these consoles don't really rely on shrinking the die, until and if it really becomes much cheaper to do so and at that point we might be at a point where Sony already has 2 more powerful SKUs out.

http://www.eetimes.com/author.asp?section_id=36&doc_id=1326864

Also reducing the power consumption is saving additional costs in many other places. I suppose as 10nm and 7nm matures it would continue to drop in cost, and improve yield, just like 28nm did?

edit: fixed nodes that are different, unlike 14/16 which are "same same, but different, but still same"

Last edited:

I don't think you can pair 10nm and 7nm like you would 16/14nm. Generally 10nm is to 7nm what 28nm was to 20nm, or perhaps more ominously 20nm to 16/14nm in terms of total improvement.

I wasn't sure on this so I put out feelers on how others felt. Something caught my eye when I saw both CPU and GPU come in at much higher Mhz. If we look at Xbox today with it's over sized cooling solution, it's still significantly under clocked compared to the specs shown here. Which why I felt that the spec sheet for Neo could not be taken for face value, my general feeling and I think we can agree is that it's unlikely to be a 28mm SoC.It's an interesting question. Optimistically, and taking GF and AMD and Samsung claims at face value, you should be able to get about twice the performance for the same power by going wider or about 35% faster for the same power just by upping clock. I think that was mostly with reference to GPU, but I can't remember and I can't find the damn slides now!

Here's something from last year that alludes to something similiar: http://www.dailytech.com/TSMC+Hypes...ggles+to+Hit+Volume+at+16+nm/article37298.htm

Anway, it might be possible for Sony to get both the 30% clock jump for Jaguar and the double width GPU (with slight upclock) for something like close to the launch PS4. Perhaps they could even share the expensive HS&F and power modules with the PS4 if that was the case ...?

Edit: Or Neo could be based on a 500 mm^2 28nm chip and the system could be pulling 250W

1.75 Ghz

853 Mhz

vs

2.1GHz

910 Mhz

Rumors said that Polaris 10 will use ~110W, but that was already with 8GB of GDDR5 and higher clocks that PS4K will use. With added CPU module and other console components, I think PS4K will have simmilar TDP as the launch PS4. ~150W.Anyone wants to calculate the power draw?

Another thing to watch out for when using the current consoles as a starting point is that the Jaguar architecture was not a fully successful implementation in terms of power management and DVFS. Jaguar should have had turbo and better clocks than it did, as that was part of the architecture's announced feature set.

The later Puma chips finally had sufficient physical characterization or bug-fixing to get chips with turbo to 2.4 GHz, and more uniform base clocks of 1.8-2.0 GHz in the 15W power range. Those 28nm Cat cores might serve as a better reference on how much is really being done to update the CPU section.

The later Puma chips finally had sufficient physical characterization or bug-fixing to get chips with turbo to 2.4 GHz, and more uniform base clocks of 1.8-2.0 GHz in the 15W power range. Those 28nm Cat cores might serve as a better reference on how much is really being done to update the CPU section.

as long as it output in 4k it should be fine right?

its like those 576p games that have 720p or 1080p label.

its like those 576p games that have 720p or 1080p label.

AMD did shrink K10 to 32nm for Llano. They had yield issues on the CPU side and clock speeds were disappointing, rendering them uncompetitive.

These days, die shrinks aren't necessarily a magic wand that makes everything massively faster and cooler. 32nm to 28 nm at GF made design choices that prioritised density increases (suiting GPU) but hurt frequency at the top of the CPU spectrum.

The top end Jaguar on desktop hits 2.05 gHz, and that sees a bump for the APU power to 25W from 15W. The top end Puma is 2.2 gHz, and that's desktop only with a 25W TDP for the APU. Sony aren't doing badly with 2.1 gHz for 2 Jaguar modules - they're likely at the point where either power would start to sky-rocket or yields would drop off. Jaguar simply isn't designed for high clocks, and Sony have a sizeable GPU in there needing power too.

I look at Intel's past gains from die shrinks on PIII into the rev-arch core and P4 and I know about AMDs past die shrinks of "poor yielding Athlon X2s on 65nm" which even had "magical bugs preventing overclocking like the 90nm CPUs...

That was blatantly obsoletenced arch in favor of pushing up the first Phenoms which shipped with bugs...something that could have been avoided if focus was placed on proper 65nm maturation to surpass the 90nm, sell tons of chips then ship flawless quad-cores then die shrink...etc

The Llano smells a lot like usual AMD shens that cost them.

Who cares if overclockers were gonna snap them up...they could have mimicked an extended "tic toc boom" instead of superficially copying Intel (which is impossible because Intel maxed out their performance before making replacements) then again AMD couldn't charge $999.95 CPUs...some of the decision making is just or has just not really been based on making strong transitions when the same problem magically appears.

28nm Zambezi Octa-core shrinks would have helped keep channels filled instead of dried up leaving Intel to collect most of the pie.

Jaguar can safely go to 2.4ghz.

Along with die shrinks there's usually additional process enhancements.

Jaguar+ can safely go to 4.2Ghz.

Doubts are very justified at this point.

Remember that Sony needs to incentivise people to buy the new platform, while at the same time maintaining the original PS4 userbase.

If a game is 'too much better' on PS4K, and the PS4 version runs like crap, people might buy into the new system but I would expect the backlash against Sony to be similar to what MS experienced at the beginning to this generation.

If a game runs great on PS4, without much of a difference from the Neo version, then what's the point of buying the bloody thing?

They will need to strike the right balance, right in the middle of these two outcomes, and I think it will be a tough job.

PS1 coexisted with PS2 for a ridiculous amount of time...we could argue that Sony and AMD stand to make profits of 14nm shrunk PS4 slims while coexistence with PS4Kaio Ken.

Remember that besides the "enhanced old games" there's still devs who would take the challenge to make PS4Kaio Ken exclusives which could be standard 1080p but much higher image quality thanks to a combined fusion of all parts.

Uncharted 4 is already being said to fill the 50GB Blu Ray on single player campaign alone leaving the Online multiplayer a DLG component which is a smart move as past Uncharted series games didn't have huge online communities compared to close the "usual suspects" and rivals.

Again SATA-III 6GB/s hardware controller chip built in regardless (again) of diminishing returns on non-SSD drives could prove an additional testing platform for early adopters who may already have 512GB and 1TB SSDs

Doubt is casual adoption...and hardcores are unreliable consumers...but what if there's special exclusive titles?

Eventually the hardcore graphics whore is not gonna resist.

But Nvidia has less efficiency.

Lol I like that but wanted to say that we should remember the GeForce GTX 980 which back then was a lower TDP and thermals than what high end GPUs were.

When Mark Cerny called it a "super-charged PC architecture" it's evident that PS4 is essentially a PC with fewer kinds of models and a closed architecture just like Mac. With x86, unlike Cell, they don't have to think about the long term ventures like depreciation of semiconductor factories by themselves. What they have to think about is how they can maximize profit and reach at the global level, some countries need cheaper, affordable models, other countries need pricier and shinier models, etc. The variations with different HDD size could justify the prices of more expensive models, why not try more substantial customization with the additional bonus of UHD BD & HDR support?

Maybe it was designed to be flexible as a hidden deck of cards to play.

PCs rely on brute force...not consoles.

Rumors said that Polaris 10 will use ~110W, but that was already with 8GB of GDDR5 and higher clocks that PS4K will use. With added CPU module and other console components, I think PS4K will have simmilar TDP as the launch PS4. ~150W.

AMD already presented their engineering PC "unnamed midrange" parts consuming less than 90W in OVERALL power draw in the video with identical (according to AMD) PC components minus GeForce 28nm v Polaris 14nm FinFet.

Iirc they only showed Battlefront fps matching while being careful not to talk too much benchmarks.

PS4Kaio Ken is a full CPU and GPU die shrink, arch revision Zenkai clocks power up.

Even accounting for a full power transformation the CPU/GPU are more than likely to either consume less or nearly similar figures.

My estimate would be a conservative 40W less overall than current PS4.

Transponster

Newcomer

That was Polaris 11(not 10), gpu itself was consuming like ~50W, it will probably end up in Home NX, which is why it was ready so early...AMD already presented their engineering PC "unnamed midrange" parts consuming less than 90W in OVERALL power draw in the video with identical (according to AMD) PC components minus GeForce 28nm v Polaris 14nm FinFet.

Iirc they only showed Battlefront fps matching while being careful not to talk too much benchmarks.

Love_In_Rio

Veteran

First development kits could be at 28nm?.But would be strange.Is possible tu use a discrete R380X paired with a PS4 cpu overclocked?.Another thing to watch out for when using the current consoles as a starting point is that the Jaguar architecture was not a fully successful implementation in terms of power management and DVFS. Jaguar should have had turbo and better clocks than it did, as that was part of the architecture's announced feature set.

The later Puma chips finally had sufficient physical characterization or bug-fixing to get chips with turbo to 2.4 GHz, and more uniform base clocks of 1.8-2.0 GHz in the 15W power range. Those 28nm Cat cores might serve as a better reference on how much is really being done to update the CPU section.

That was Polaris 11(not 10), gpu itself was consuming like ~50W, it will probably end up in Home NX, which is why it was ready so early...

That would satisfy Nintendo TDP goals on a derivative part. I'll be glad that all three will be on 14nm FinFet leaving variation architecture and design goals.

I still feel that numerically speaking the shrink of 28nm to 14nm (plus) FinFet is a dramatic change in power efficiency, output etc. I'm kinda looking at it like a 90nm to 45nm (before the wider usage of half nodes)

Comparing an old G80 (90nm) GeForce 8800 GTX to a G92b GeForce GTS 250 (55nm) and noting that Nvidia was somewhat conservative in TDP, Wattage etc (due to a lacking stiff competition which appeared in 2008 with RV770) refinements, enhancements...p-states.

G80 @575/1350Mhz v G92b 738/1836Mhz and much cooler efficient version.

First development kits could be at 28nm?.But would be strange.Is possible tu use a discrete R380X paired with a PS4 cpu overclocked?.

I wouldn't be surprised if 14nm FinFet engineering sample prototypes that have to be returned, are available specially if it's coming soon.

Prototype PS3s based on engineering sample 90nm Cell/RSX existed in 2005...these are different contracts but if they are ramping up soon...then a full to spec sample must be available.

Also I do understand the conservative stance on no major enhancements however what if there's a sales phenomenon?

What if 40 million Kaio Kens are sold in the same PS4 timeframe? Likewise for XboxUno.Cinco.

Eventually something has to give.

Transponster

Newcomer

Which? There should be only 2, full and the one with 4 CU's disabled(-~10% in performance, at worst), it's more of "how" question, at ~high settings at best, SW:BF 1080p P11 demo was done using "MED PRESET".Curious now as to which Polaris 10 gpu was running that Hitman demo at 1440p/60fps...

More like ~40W, probably.Polaris 11 was consuming like ~50W

i wonder, does PS4K will be the secret sauce Japan Studio need to release The Last Guardian?

Which? There should be only 2, full and the one with 4 CU's disabled(-~10% in performance, at worst), it's more of "how" question, at ~high settings at best, SW:BF 1080p P11 demo was done using "MED PRESET".

Also that Hitman demo was a DX12 version so wouldn't apply directly to playstation. Nevertheless the PS4 neo leaks about gpu specs fits neatly with the Polaris 10 gpu specs leaked here: http://videocardz.com/58639/amd-polaris-10-gpu-specifications-leaked

But seems like the PS4 neo will have higher clock speed than whatever that card is...

So assuming Vega is going to be used for the Fury series then PS4 neo gpu will be equivalent to R9 480X if not 490??

Unless AMD isnt doing X versions this time around and Polaris 10 is simply the 480 and 490. In which case the ps4 neo GPU would be equivalent to the 480.Also that Hitman demo was a DX12 version so wouldn't apply directly to playstation. Nevertheless the PS4 neo leaks about gpu specs fits neatly with the Polaris 10 gpu specs leaked here: http://videocardz.com/58639/amd-polaris-10-gpu-specifications-leaked

But seems like the PS4 neo will have higher clock speed than whatever that card is...

So assuming Vega is going to be used for the Fury series then PS4 neo gpu will be equivalent to R9 480X if not 490??

Unless AMD isnt doing X versions this time around and Polaris 10 is simply the 480 and 490. In which case the ps4 neo GPU would be equivalent to the 480.

From the sounds of it there are 40 and 36 CU versions of Polaris 10 so they'll either need very high clock speeds (like Pascal, i.e. 1400Mhz territory) or massive efficiency improvements (or a combination of both) to be able to displace the 390 and 390x.

So with these it's possible PS4K will be equivalent to a 490 (perhaps a heavily underclocked version) if neither of the above hold true though then it's more likely to be a 480 (non X) equivalent. My moneys on the latter.

- Status

- Not open for further replies.

Similar threads

- Replies

- 296

- Views

- 38K

- Replies

- 126

- Views

- 48K