This finally puts to rest a lot of partisan nonsense about how PS5 eschewed wide shader arrays because Cerny smart, how PS5 eschewed VRS because Cerny smart, that PS5 didn't support int4 and int8 because Cerny smart, that PS5 has a special Geometry Engine that's super custom unique (designed by Cerny, he smart).

Cerny

is very smart, but PS5 is the way it is because

that was the best Sony could do at the time. Now that they have access to tier 2 VRS, full Mesh Shader equivalence, AI acceleration instructions etc they've got it all. They probably have Sampler Feedback too. And now that the best way to push compute further is to go wider and - if anything - a little slower on balance they are doing that too.

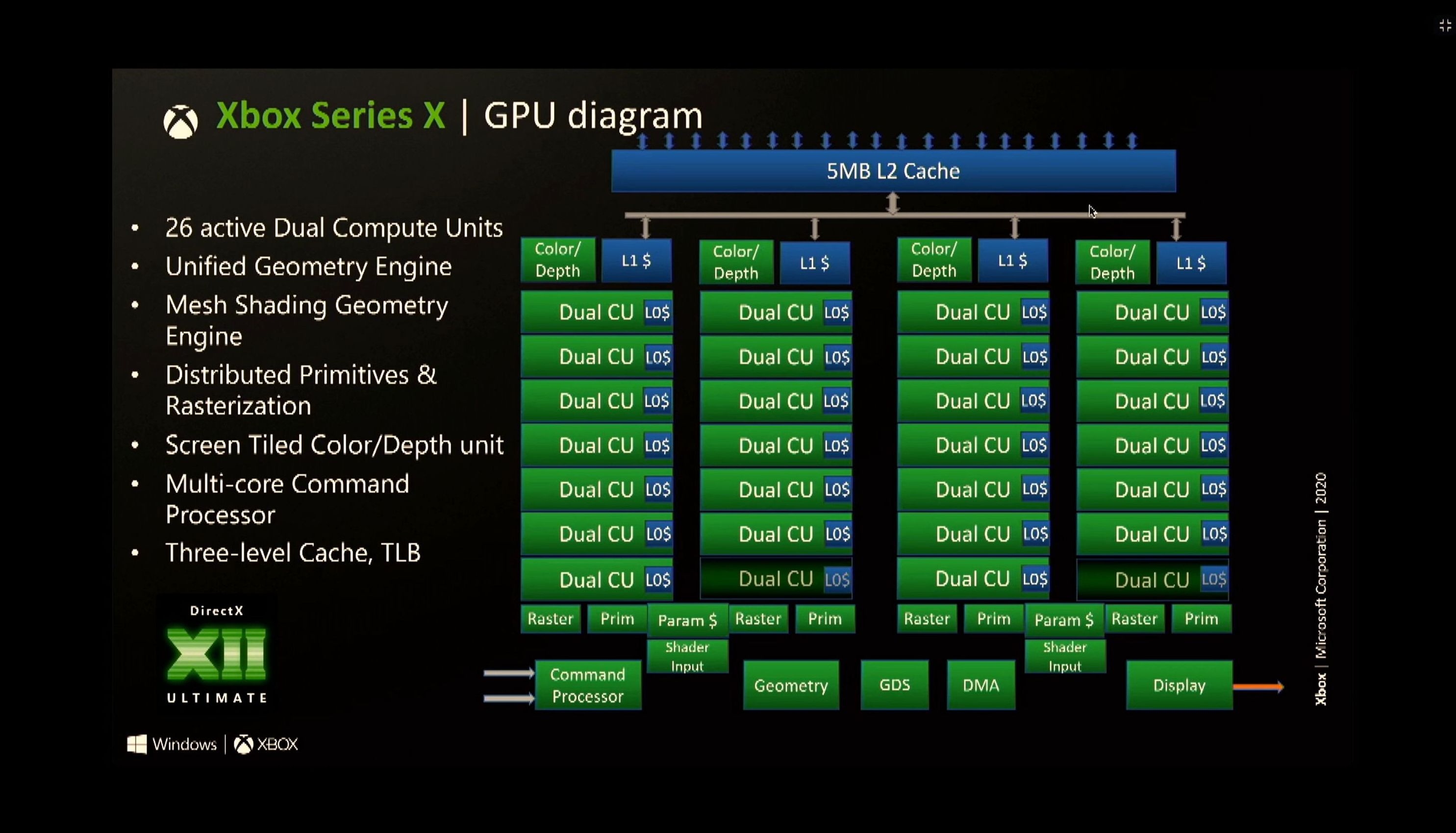

There's a reason that the PS5 Pro is looking similar to the Series X at a high level - it's because they're both derived from the same line of technology, and they both face the same pressures on die area and power, and they both have very smart people deciding what to do with what's available.

Bit of a bummer that PS5 Pro doesn't have any Infinity Cache, but understandable given that it eats up die area. Being 2 ~ 3x faster at RT in the absence of any IC is cool though, and makes me quietly optimistic for RDNA4 and any possible AMD based handheld console.

@Dictator do you know how many ROPs the PS5 Pro has? Is it still 64?