They should be the exact same test. Look at the fps on your screen for the PS5. 27fps, just like with my video comparing the 4070S to the PS5. DF only uses two settings for Cyperunk. RT Quality Mode or Performance Mode. This is RT Quality Mode.Your video is of the 2160p 'resolution' quality mode and not the RT quality mode that I used for my comparison.

So yea, completely different to what I did.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Digital Foundry Article Technical Discussion [2024]

- Thread starter Shifty Geezer

- Start date

davis.anthony

Veteran

They should be the exact same test. Look at the fps on your screen for the PS5. 27fps, just like with my video comparing the 4070S to the PS5. DF only uses two settings for Cyperunk. RT Quality Mode or Performance Mode. This is RT Quality Mode.

They're different settings which can clearly be seen in the video I used to get the settings.

Cyberpunk has two modes on consoles; ray tracing and performance. You're using the ray tracing mode settings which are the same Rich used in all his videos for PC comparisons. Rich used the same settings for the 4070S. That's why the PS5 has the same frame rate in both videos. How can they be different settings when the PS5 is 27fps in both?They're different settings which can clearly be seen in the video I used to get the settings.

davis.anthony

Veteran

I'm not going backwards and forwards with you.Cyberpunk has two modes on consoles; ray tracing and performance. You're using the ray tracing mode settings which are the same Rich used in all his videos for PC comparisons. Rich used the same settings for the 4070S. That's why the PS5 has the same frame rate in both videos. How can they be different settings when the PS5 is 27fps in both?

The proof they're different is in the video I used.

They aren’t and I clearly explained it to you. There are two modes in Cyberpunk and Rich is using the same mode in both video comparisons.I'm not going backwards and forwards with you.

The proof they're different is in the video I used.

Your results don’t add up. Stop doubling down on being incorrect. The PS5 wouldn’t have the exact same fps in the same place with wildly different settings.

There are two modes. RT or Performance and RT has a fixed resolution. You clearly didn’t use Performance Mode so that leaves only one mode. What hidden third mode with settings different from either did you use?

Last edited:

davis.anthony

Veteran

RT or Performance and RT has a fixed resolution.

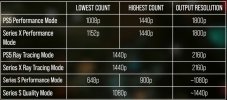

Sigh.....these are the settings confirmed by DF after they paid someone to revisit the game and find the equivalent settings, including matching the DRS scaling.

So no, RT mode isn't a fixed resolution as you can clearly see in black and white is says DRS

My settings are a match to what DF confirmed above after revisiting the games settings.

Alex from DF even liked my comparison post, and I would like to think that if there was an issue with the settings I used he would have at least mentioned it, but he hasn't said anything so that should give an indication the settings I used are correct.

I'm not replying to you further.

talking of which, as impressive as the game engine and the game is, I found the original game on PC to be more on the non realistic side. It looked really good but unnatural. That's besides the point though, it's impressive they managed to fit this on the Switch, specially taking into account how super demanding the game is to this date on PC. When I saw the video's thumbnail I double checked 'cos I couldn't believe they were talking about Kingdom Come Deliverance.

Pretty astonishing work.

Also, and I might sound totally crazy for this, but this version somehow manages to look.....more realistic to me....than the main console or PC versions. At least in a sense. There's something about the low resolution and reduced texture detail combined with the lower framerate character animations that gives it almost an FMV look. But even outside of characters, the sharpening and low res with very natural lighting model seems to give environments a boost to realism as well, where it's allowing my mind to make up the difference more than the 'clearer' and smoother versions of the game do.

And here's the part that explains your much higher performance. This is the comment of the guy who matched the settings in the video you linked.Sigh.....these are the settings confirmed by DF after they paid someone to revisit the game and find the equivalent settings, including matching the DRS scaling.

View attachment 11020

View attachment 11021

So no, RT mode isn't a fixed resolution as you can clearly see in black and white is says DRS

My settings are a match to what DF confirmed above after revisiting the games settings.

Alex from DF even liked my comparison post, and I would like to think that if there was an issue with the settings I used he would have at least mentioned it, but he hasn't said anything so that should give an indication the settings I used are correct.

I'm not replying to you further.

Hi, guys! This is Mohammed Rayan. I'm the one who worked on this for Digital Foundry. Just wanted to make a small correction to the 'Ray Tracing' mode's resolution there. 1440p is the internal (input) resolution which is then upscaled to 2160p via FSR 2. I should have mentioned "2160p FSR 2 (Quality mode)" there, not "1440p". Sorry about that.

So did you set your game to 1440p/DRS Quality or 4K/DRS Quality? Because the latter is correct and the former is what appears in the video but is incorrect.

DavidGraham

Veteran

0:00:00 Introduction and DF merchandise

0:02:26 News 01: Rise of the Ronin preview

0:11:40 News 02: Nvidia announces upcoming game enhancements

0:35:55 News 03: Intel unveils PresentMon 2.0

0:51:08 News 04: Stellar Blade demo released, quickly pulled

0:56:48 News 05: Peter Moore questions the future of consoles

1:12:11 Supporter Q1: Should console platforms let you impose frame-rate caps yourself?

1:19:39 Supporter Q2: What rendering tech will we see pushed after path tracing?

1:27:22 Supporter Q3: Should more developers expose debug menus in their games?

1:31:43 Supporter Q4: What storage tech do you work off of?

1:39:38 Supporter Q5: What would Digital Foundry have looked like in the 1990s?

RTX DI is very promising. In Cyberpunk 2077 the lack of shadows from local light sources was the biggest graphical weakness before they added path tracing. As mentioned in the video shadows from local light sources are also missing in Avatar. Almost all games with local light sources share this problem. Like in Cyberpunk 2077 the world of Star Wars Outlaws will also include environments with many light sources. RTX DI will improve this game greatly.

I like the worlds that Massive is building. Avatar, for example, is picturesque and comes up with a mood like in concept art. However, I would much rather have seen Massive do a world in the Dune- and Blade Runneer-universe than Star Wars and Avatar.

As far as physics in games is concerned I find Control with PhysX very appealing.

I like the worlds that Massive is building. Avatar, for example, is picturesque and comes up with a mood like in concept art. However, I would much rather have seen Massive do a world in the Dune- and Blade Runneer-universe than Star Wars and Avatar.

As far as physics in games is concerned I find Control with PhysX very appealing.

Last edited:

DavidGraham

Veteran

New info from DF.

Last edited:

Spec Analysis: PlayStation 5 Pro - the most powerful console yet

Tech specs for PlayStation 5 Pro have leaked - Digital Foundry verifies them and delivers analysis and further information.

Last edited:

Hopefully PS5Pro has some infinity cache (maybe 32 or 64MB?) or that bandwidth might be a little tight to really take full advantage of the extra compute.

Interesting that VRS has been added to the Pro, so on par with the Series consoles now. Further confirms that PS5 is from a pre-RDNA 2 branch of the RDNA.

Interesting that VRS has been added to the Pro, so on par with the Series consoles now. Further confirms that PS5 is from a pre-RDNA 2 branch of the RDNA.

$$$$Hopefully PS5Pro has some infinity cache (maybe 32 or 64MB?) or that bandwidth might be a little tight to really take full advantage of the extra compute.

Interesting that VRS has been added to the Pro, so on par with the Series consoles now. Further confirms that PS5 is from a pre-RDNA 2 branch of the RDNA.

cache is usually the first thing tossed on consoles.

imo, It's also another potential reason why MS may not release a midgen refresh - feature hardware wise they are already on parity.

*chonker*

solid @oliemack

Cooling will be very interesting on this console. I'm in agreement with Alex that all sorts of mini accelerators is where we need to go, the general 'compute' strategy will come back at a later time, but with constraints around power and price coming in at a massive premium, we're going to have to go back to the older days of dedicated silicon to make the next breakthrough.

Last edited:

A very interesting video. One of the things they focused on is the lack of CPU increase. How many games are cpu limited? I'm not sure but I can't imagine Sony didn't review this data when determining how to allocate their budget for hardware? I'd argue that compare to last gen, the games that are cpu limited are few and far between. Baldurs gate 3 was referenced several times but it comes across as the vocal minority? I mean for every Baldurs gate 3, you could probably name like 10 games that aren't cpu limited? They referenced a few upcoming games that are cpu limited so maybe dragons dogma or something else? That being said, I think if you look at the library of games on PS5 and compare the amount of 60 fps capable games, it's surely much better than the ps4 gen no?

All in all, it's a mid-gen upgrade. The specs are pretty alright. I'll probably buy 2 or so.

$$$$

cache is usually the first thing tossed on consoles.

imo, It's also another potential reason why MS may not release a midgen refresh - feature hardware wise they are already on parity.

True, but looking at RDNA 3 cards they seem to rely heavily on a phat L3 cache to keep bus size reasonable. If some kind of L3 was cheaper than a wider bus (and it seems to be on the PC) they might have bite the bullet.

If it had no infinity cache, and probably only 4MB of L2, PS5 Pro would seem to be in a tight spot. RT is supposed to be pretty BW heavy, and I don't know how you'd get 2 ~ 4x RT improvement there without something to mitigate the tight BW. I'm guessing PS5Pro will have double the ROPs of PS5 / XSX, and that would seem to need a lot of BW to support too. There's also CPU contention too I guess.

On another matter, I wonder what the arrangement of the GPU is. PS5 and Series X consoles seem to use one redundant DCU per shader engine. If PS5Pro has two redundant DCUs, could that imply only two shader engines?

snc

Veteran

Nice there is more memory availalbe for games

Spec Analysis: PlayStation 5 Pro - the most powerful console yet

Tech specs for PlayStation 5 Pro have leaked - Digital Foundry verifies them and delivers analysis and further information.www.eurogamer.net

Yes because the majority are cross gen, or cross gen derived.A very interesting video. One of the things they focused on is the lack of CPU increase. How many games are cpu limited? I'm not sure but I can't imagine Sony didn't review this data when determining how to allocate their budget for hardware? I'd argue that compare to last gen, the games that are cpu limited are few and far between. Baldurs gate 3 was referenced several times but it comes across as the vocal minority? I mean for every Baldurs gate 3, you could probably name like 10 games that aren't cpu limited? They referenced a few upcoming games that are cpu limited so maybe dragons dogma or something else? That being said, I think if you look at the library of games on PS5 and compare the amount of 60 fps capable games, it's surely much better than the ps4 gen no?

All in all, it's a mid-gen upgrade. The specs are pretty alright. I'll probably buy 2 or so.

I can only imagine redundancy and finalized clock speeds are solidified near the end. I suspect it will be 3 shader engines, but if 2 shader engines, this chip would be surprisingly close to the XSX.True, but looking at RDNA 3 cards they seem to rely heavily on a phat L3 cache to keep bus size reasonable. If some kind of L3 was cheaper than a wider bus (and it seems to be on the PC) they might have bite the bullet.

If it had no infinity cache, and probably only 4MB of L2, PS5 Pro would seem to be in a tight spot. RT is supposed to be pretty BW heavy, and I don't know how you'd get 2 ~ 4x RT improvement there without something to mitigate the tight BW. I'm guessing PS5Pro will have double the ROPs of PS5 / XSX, and that would seem to need a lot of BW to support too. There's also CPU contention too I guess.

On another matter, I wonder what the arrangement of the GPU is. PS5 and Series X consoles seem to use one redundant DCU per shader engine. If PS5Pro has two redundant DCUs, could that imply only two shader engines?

That’s fair but there haven’t been many “next-gen” games. Even then, I’d argue that a vast majority of them have a 60fps mode? I could be mistaken but that is my recollection. There are like 2-5 games with cpu issues? Gotham Knights(game design issue), Baldurs gate 3, and maybe Dragon's Dogma which isn't out yet. The rest of them games have a 60fps mode with some even supporting 120 fps. I don't know how a handful of games out of the 430+ ps5 games got spun into a narrative of cpu limitation? I mean this gen, devs have barely even used the cpu for anything interesting. The list I'm referencing: hereYes because the majority are cross gen, or cross gen derived.

Similar threads

- Replies

- 5K

- Views

- 470K

- Replies

- 3K

- Views

- 365K

- Replies

- 4K

- Views

- 515K