It would be helpful if the same devs who championed low level apis years ago gave their take on how close DX12 comes to achieving that vision. I don’t think I’ve seen a single studio admit that DX12 is hard yet the results haven’t been great.

Quite a few have expressed discontent about DX12, especially in the beginning, Stardock (the developer of the first ever DX12 game: Ashes of Singularity and one of those who championed lower level APIs) has posted a blog explaining why they abandoned DX12/Vulkan in one of their games: Star Control, in favor of DX11.

It basically boils down to the extra effort it takes to develop the DX12/Vulkan path, longer loading times and VRAM crashes are common in DX12, if you don't manage everything by hand. Performance uplift is also highly dependent on the type of game, and they only achieved a 20% uplift on the DX12 path of their latest games, which they say is not worth all the hassle of QA and bug testing.

In the end, they advice developers to pick DX12/Vulkan based on features not performance, Ray Tracing, AI, utilizing 8 CPU cores or more, Compute-Shader based physics ..etc.

Putting aside the theoretical power of Vulkan and DirctX 12 when compared to DirectX 11 how do they do in practice? The answer, not surprising is, it depends.

www.gamedeveloper.com

Another UbiSoft developer advised against going into DX12 expecting performance gains, as according to them achieving performance parity with DX11 is hard.

If you take the narrow view that you only care about raw performance you probably won’t be that satisfied with amount of resources and effort it takes to even get to performance parity with DX11,” explained Rodrigues. “I think you should look at it from a broader perspective and see it as a gateway to unlock access to new exposed features like async compute, multi GPU, shader model 6, etc

Tiago Rodrigues, 3D programmer at Ubisoft Montreal, hosted a talk on the Advanced Graphics Tech card at GDC last week, covering the company’s experiences porting over their AnvilNext engine to DirectX 12. The Advanced Graphics Tech prefix alone should have been the first hint that much of the low-

www.pcgamesn.com

Another veteran developer (Nixxez) states that developing for DX12 is hard, and can be worth it or not depending on your point of view, the gains from DX12 can easily be masked on high end CPUs running maxed out settings and you end up with nothing. They also call Async Compute inconsistent, and too hardware specific which makes it cumbersome to use. They also state the DX12 driver is still very much relevant to the scene, and with some drivers complexity goes up! VRAM management is also a pain in the ass.

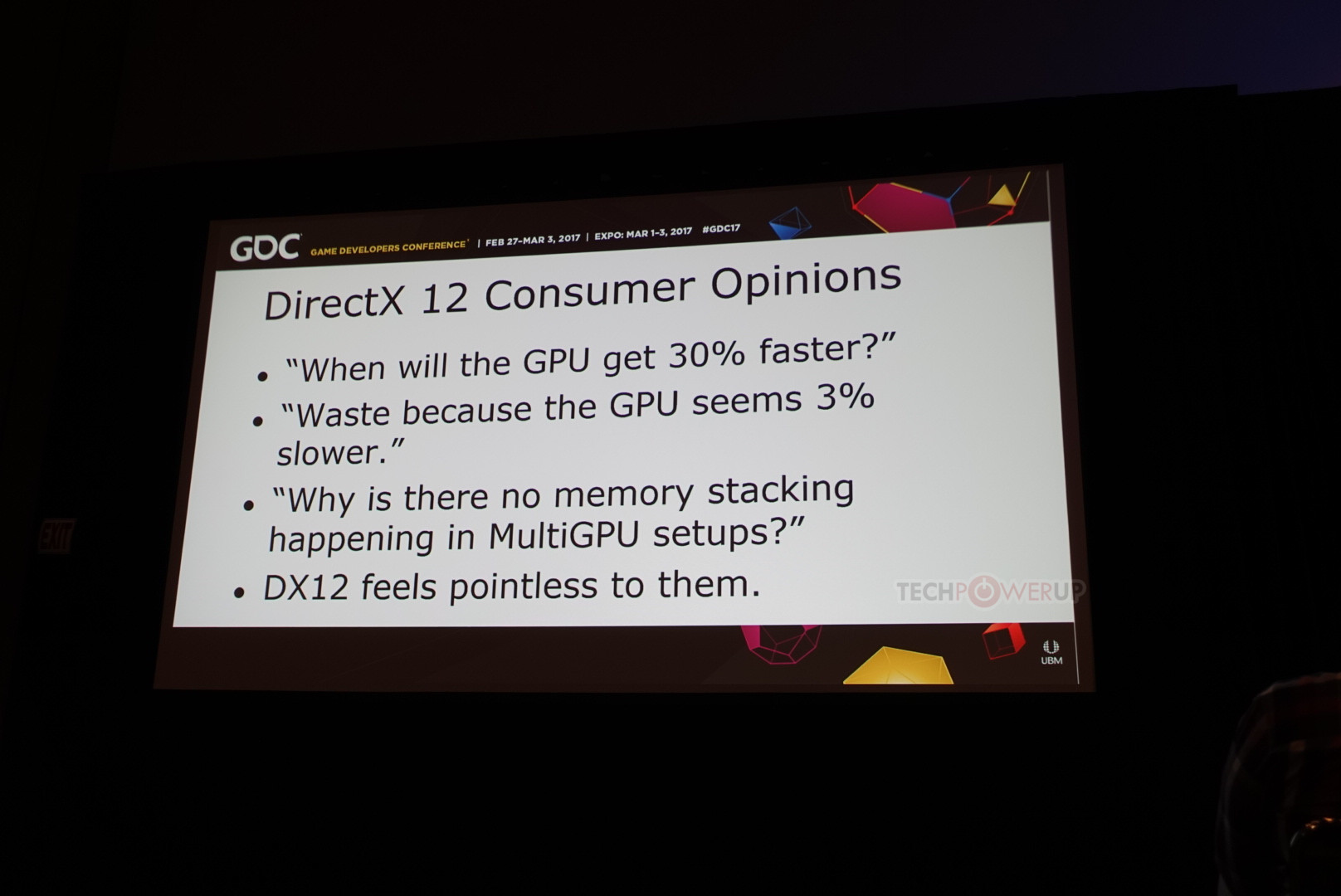

We are at the 2017 Game Developers Conference, and were invited to one of the many enlightening tech sessions, titled "Is DirectX 12 Worth it," by Jurjen Katsman, CEO of Nixxes, a company credited with several successful PC ports of console games (Rise of the Tomb Raider, Deus Ex Mankind...

www.techpowerup.com