Overstander

Newcomer

so i also reached the 10000 charakters limit

Only the Fact that due to much more efficient APIs on consoles made it possible to use a netbook CPU in the last Gen. Then there was no multithreaded Engine out there (in a AAA Third Party Game anyways) until Assassins Creed Odyssee i think. If i recal that was the first time the usual Suspect CPUs with 4 or less cores failed to deliver the PS4 Expirience.

Also the IPC of a Jaguar Core is not good - because it was not intended to end up in a Gaming machine. Thats way many 2 Core CPUs could outperform a PS4. It is like a said. That time the PC Community had much better HW already when it the Gen started.

This Time - not so much. Not the majority of PC gamers.

I meant the Cost of render time on GPUs. I simply doubt Nvidias statement of RTX I/O being so lean on a GPU. Like i wrote obove - i want to see something. At least a Demo of some sorts. Should not be so difficult. And to adress the "2Tf sacrifice are enough to catch up or even overtake PS5s I/O capabilitys" - i think that came out of another Thread wich was linked here some while ago. I absolutly tink that for one - on RTX 20xx nobody has 2Tf to spare when it renders a lets say 2nd wave PS5 Exclusive. I think trying to mimik PS5s I/O throughput is much more stressfull to a GPU then most people think. It is cute that Jen-Hsun Huang thinks that simply flipping a switch on a RTX 20xx / 30xx GPU is sufficient to mimik PS5 I/O Capabilitys without adding up a kinds of latencys in the CPU/ GPU correspondance ( wich is already much higher than on Consoles because UMA instead of hUMA ) .

People seem to forget that PS5s I/O is not only the Kraken Unit. There is more HW there wich is not present on PC.

Logic simply suggests that any major custom HW development that sums up many millions MUST be considered more efficient than using already existing (general purpose) tech, because all comitees and engineers involved in the process would have pointed out or even blocked further investigation in custom tech.

https://www.beyond3d.com/content/reviews/55/7 <- This here suggests a mere 1087.74 GB/s Bandwidth for L1 alone on an old GTX 470...

Playing a game for one hour has caused probably 1Million GB worth of internal GPU traffic for all we know...

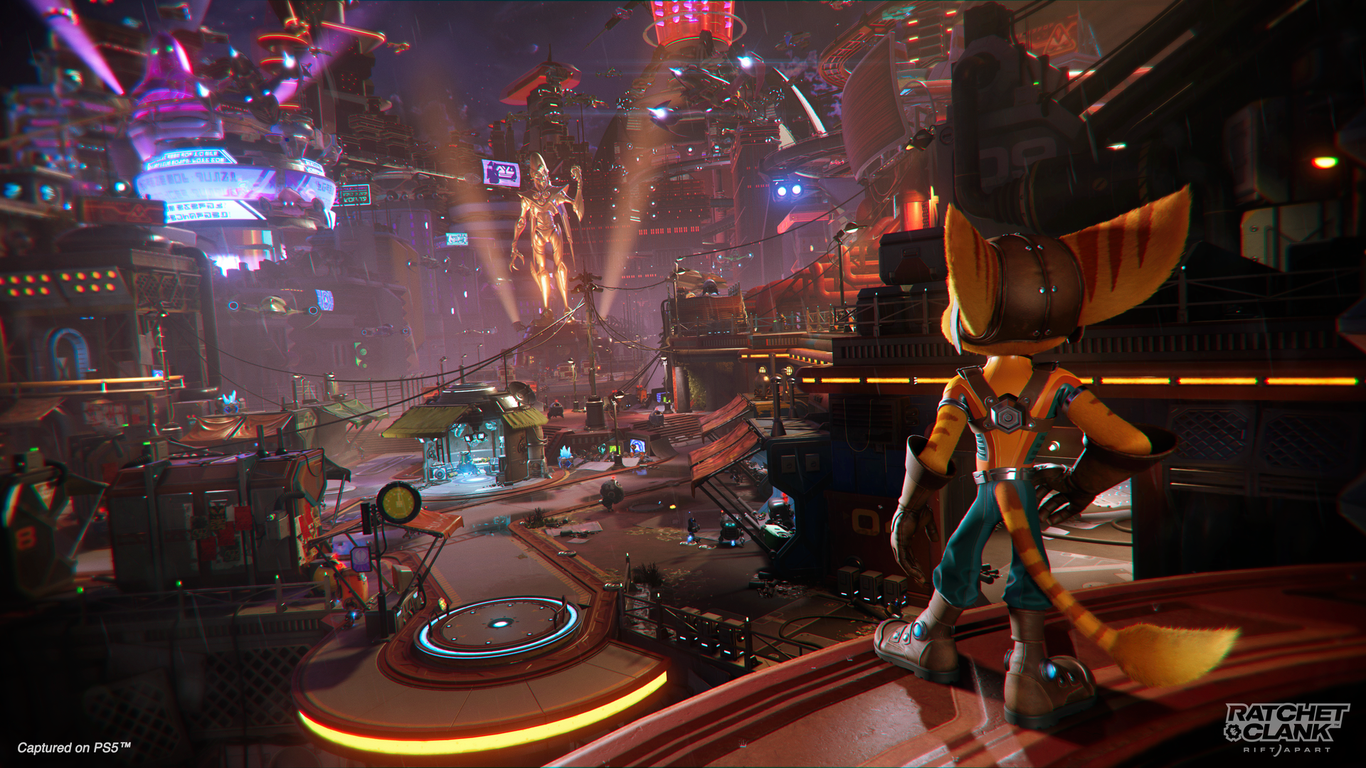

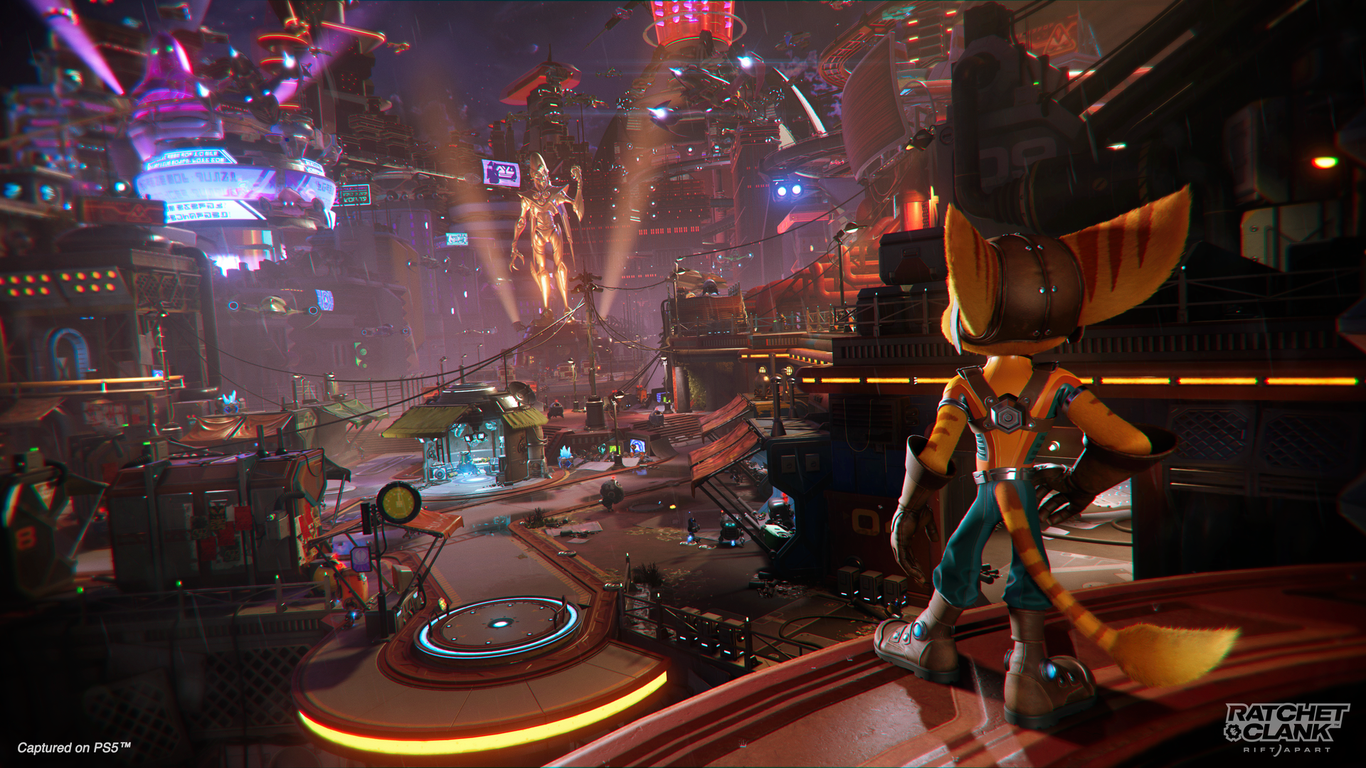

The game swaps out the entire memory usage of a level in a second thats one thing. The other things is that Rift Apart indeed makes use of Cernys forecasted new memory paradigm of only 1sec of gameplay resident in memory.

Memory in high active use.

View attachment 6823

Rift Apart Method of Memory usge is mentioned here :

www.axios.com

www.axios.com

So that will be it for right now - sorry for the other people answered to me - i come back

Those big posts are quite exhausting - especially for a non native english speaker hrhrh

Thats my Point regarding the Jaguar - it is a Netbook CPU. I think DF used one of these strange Xbox One APUs for Desktop PCs a while ago with Cyberpunk and showed that it would not be a valid gaming CPU on PC.But almost all games last generation were capable of running perfectly well on a dual core CPU which despite having roughly 4x more performance per core vs the console Jaguars, also have 4x fewer cores, so the overall performance is roughly equivalent. So it's not like PC's were throwing enormously greater amounts of CPU power at the problem to overcome this performance edge you speak of. Naturally a little more CPU power will always be needed on the PC side though to overcome the thicker API's.

Only the Fact that due to much more efficient APIs on consoles made it possible to use a netbook CPU in the last Gen. Then there was no multithreaded Engine out there (in a AAA Third Party Game anyways) until Assassins Creed Odyssee i think. If i recal that was the first time the usual Suspect CPUs with 4 or less cores failed to deliver the PS4 Expirience.

Also the IPC of a Jaguar Core is not good - because it was not intended to end up in a Gaming machine. Thats way many 2 Core CPUs could outperform a PS4. It is like a said. That time the PC Community had much better HW already when it the Gen started.

This Time - not so much. Not the majority of PC gamers.

What cost specifically? DirectStorage will remove the majority of the decompression load from the CPU. There is no CPU cost to this, it's a massive CPU saving. The GPU will be doing the work but by all reports the performance impact there will be negligible, or in Nvidia's words - barely measurable. It's not as if the spare GPU power isn't available to absorb this negligible impact.

As above, this is a false comparison, Richard was quite clear about that in his video and I'm sure would be disappointed to see it being twisted in this way.

I meant the Cost of render time on GPUs. I simply doubt Nvidias statement of RTX I/O being so lean on a GPU. Like i wrote obove - i want to see something. At least a Demo of some sorts. Should not be so difficult. And to adress the "2Tf sacrifice are enough to catch up or even overtake PS5s I/O capabilitys" - i think that came out of another Thread wich was linked here some while ago. I absolutly tink that for one - on RTX 20xx nobody has 2Tf to spare when it renders a lets say 2nd wave PS5 Exclusive. I think trying to mimik PS5s I/O throughput is much more stressfull to a GPU then most people think. It is cute that Jen-Hsun Huang thinks that simply flipping a switch on a RTX 20xx / 30xx GPU is sufficient to mimik PS5 I/O Capabilitys without adding up a kinds of latencys in the CPU/ GPU correspondance ( wich is already much higher than on Consoles because UMA instead of hUMA ) .

People seem to forget that PS5s I/O is not only the Kraken Unit. There is more HW there wich is not present on PC.

Again i doubt the Nvidia Statement. When it would be so much more efficient on GPU ("barely measurable") why did Sony or Cerny not take this aproach when designing the PS5? It would be way smarter then to not develop the whole custom I/O shenanigens and go with a bit bigger GPU and do the Decompression there. Smarter because then , if a game would for some reason not rely much on decompression they could use the GPU then for better IQ and Detail Settings instead. Clearly here is something that Nvidia is not telling. And again - where are the demos?RTX-IO, or more generally GPU based decompression is there to free up CPU cycles by moving that activity onto the GPU. Nvidia have stated that the impact of that on the GPU is "barely measurable", which stands to reason given that GPU's have orders of magnitude more compute power than CPU's. The RTX 3070 for example has roughly double the compute performance of the PS5 (20TF vs 10TF). That doesn't translate directly into game performance but if the GPU decompression is going to use that compute, then there is more than enough on tap with any Ampere to spare for this.

Now putting aside the IO point and talking about raw performance, a 3070 is roughly equivalent to a 2080Ti. All benchmarks we've seen to date put the PS5 performance at between 2060s and 2080 level depending largely on the use of RT, with more RT pushing PS5 down that stack. RT will obviously increase as the generation goes on and we see more current gen exclusives, so that performance advantage is likely to solidify over time. Spiderman is just the latest in a long line of examples of this with the 3060 (2070 level) offering an equivalent or better experience to the PS5.

Logic simply suggests that any major custom HW development that sums up many millions MUST be considered more efficient than using already existing (general purpose) tech, because all comitees and engineers involved in the process would have pointed out or even blocked further investigation in custom tech.

i .. I dont want to come across like talking down to you but forgive me when i say that does not make any sense at all. A Game being 66GB does not tell you anything about floating point calculations or texture/asset swaps. There might be a lets say high quality Tree model that weighs in lets say 500Mb copiying that very model 10 times in and out memory already caused 5GB of traffic. Without the Game being "sucked empty" lol. Also why are you oblivious to the fact that any calculations of vectors or basicly any gamedata that goes through the GPU is adding up to several 100GB of internal traffic within the GPU. L1 and L2 caches have hundreds if not thousends of GB/s Bandwith.That's been addressed many times here. The game is only about 66GB uncompressed. It's inconceivable that it's loading several GB/s worth of data with every camera move or else the game would be over in a hand full of seconds.

https://www.beyond3d.com/content/reviews/55/7 <- This here suggests a mere 1087.74 GB/s Bandwidth for L1 alone on an old GTX 470...

Playing a game for one hour has caused probably 1Million GB worth of internal GPU traffic for all we know...

The game does load new areas very fast when a portal is used but that's simply a loading speed challenge. Something that could well be a little slower on PC at the moment until DirectStorage GPU decompression is available, but if that equates to 2 second portal transitions vs the current 1 second then that's not exactly a deal breaker, and could very likely be mitigated with additional pre-caching into the PC's larger memory pool. Outside of those portal transitions there's no reason to believe the game wouldn't perform similarly to Spiderman insofar as a 3060 class GPU being capable of providing an equivalent experience. I would actually expect the game to be more forgiving on the CPU side due to the much slower world traversal which likely requires more modest streaming and certainly far less frequent/significant BHV updates which are the main cause of the high CPU requirements in Spiderman.

The game swaps out the entire memory usage of a level in a second thats one thing. The other things is that Rift Apart indeed makes use of Cernys forecasted new memory paradigm of only 1sec of gameplay resident in memory.

Memory in high active use.

View attachment 6823

Rift Apart Method of Memory usge is mentioned here :

Interview: How Insomniac made "Ratchet & Clank: Rift Apart" look so good

The veteran studio says the PS5's SSD helped in some unexpected ways.

also here:We spend as much memory as we can on just the things you can see in front of you at this moment. And the more we can do that and learn how to do that, the more stuff we can cram in there on, like a per pixel by pixel, basis."

So that will be it for right now - sorry for the other people answered to me - i come back

Those big posts are quite exhausting - especially for a non native english speaker hrhrh