First Post on this Forum after quite a lurking time

So what i think about the Spiderman Port to PC is that in contrary to the overall opinion in this Thread, PC is as a platform (not any high end user mind you) but as a platform of gamers it is in trouble. Cosnoles offer much better per watt / performance than PCs - they are engineered that way.

You always can make things go more fluid if you alter them from being general purpose (PC) to specifically render Game code as efficient as it can possibly get.

Especially Consoles APIs (and here Sony realy shines) are much more lean and have every layer removed that is not needed.

So that was and is true for PS4. The latest Video from Richard Leadbetter shows this - PS4 cannot be matched on PC with similar Hardware. He is running the Game in 900p and it still runnes less performant. The Base PS4 Game renders almost all of the time in 1080p. With hardlocked 30fps. And thats all with Richard Leadbetter using a CPU that is running circles around the PS4s Jaguar Cores.

The Performance Edge that Consoles have did not manifest as much last Generation mainly because the extraordinary edge the PC Community had with their CPUs over the Jaguar Cores. Console Performance and API Edge over PC was here "sacrificed" in a way by using a under normal (PC) circumcstances totally unfit for gaming CPU.

Only the less friction ( hardware and software wise ) Enviroment on Consoles made it possible to found a whole gerneration of still very good looking games with a Netbook CPU at a Time where so much more performant PC CPUs were used by people.

This Time however , all is different!

This Time a very good CPU is used. But it is still a lean and friction less console enviroment. Even more so on PS5 where its dedicated HW Decompression block does even more free up CPU time. Something the PC simply cannot copy 1:1.

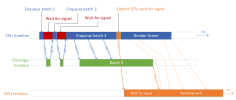

Direct Storage will help but it will come at a cost.

Ill jump over a few points now and adress my take on the Question of a RTX3070 is sufficient to play PS5 Ports in the future at the same ( or even better) Detail Settings.

My Answer is of course NO.

We all see what it takes CPU and GPU wise to play a mere PS4 Game uplifted per PS5 patch on PC to play at the same Settings. Gone are the times where way worse PC Hardware (read GTX 750) could bring something to the table.

I saw i a page or so earlier in this thread the Idea that People with RTX 30 Series or even RTX20 Series GPUs would be fine simply because RTX I/O is here to save the Day. It was aknowledged that RTX I/O on RTX 20 and 30 Series does not even come with dedicated decompression HW. Nvidia simply wants to use underutilised GPU parts. Someone stated that a mere 2Tf sacrifice would be enough to match PS5s I/O throughput. Also wrong - RTX30 Cards from 3070 upwarts enjoy a rasterisation edge vs PS5 but its not like it is in the next league. For RTX 20 cards? Forget it. I state that when the first 2nd Wave PS5 exclusive arrives wich should leverage PS5s I/O Block hard, the RTX 20 cards are not going to cut it anymore. And the chance is there that a RTX 3070 could not match a PS5.

Heck, i want to see a Ratched and Clank Rift Apart port to PC right now. Does anyone, given how a PS4 Game with a PS5 Patch stresses PC Hardware right now, believe that Rift Apart would run very good on the same range of hardware of today? The Game relies heavily on real time decompression of several GB/s with every cameramove. The "what is not in sight is not in memory" aproach that Cerny forecasted in his Road to PS5 Talk. And Rift Apart does not even bring the PS5 to sweat - we know that because the swap out of external SSDs wich are technicaly under Sonys recommendation for a SSD upgrade.

And please do not read this post as a stab against PC in general. I game on one as well - but things need to adressed in all fairness.

First, i think this PS5(console) vs PC is better suited for a system wars section or something. Anyway, a 7870/i5 setup from 2013 still does fine at equal to console (base console) settings, even today. Largest hurdle would be vram if anything. GTX750 didnt age very well, but thats due to Keplers architecture.

The notion that a RTX3070 is no match for the PS5 is kinda.... out of this world. The 3060 non-ti does actually run spiderman and practically everything else just aswell, mostly better whenever RT is involved vs the PS5 versions. I shared a YT video before here, 3700x/3060 combo outperforms the PS5 version of spider, at higher settings.

Theres no indication anywhere that you need 'more hw to make up for the evil, bad pc hw', in almost all titles, both PS4 and PS5, you can get around with ballpark matching hardware.

I also doubt that NV was lying when they said performance impact will be minimal to the point you wont notice when the GPU is decompressing. Thing is, i think GPU decompression is the way forward, GPU's are extremely efficient at doing parallel/decompression work. With todays drives being capable of 7gb/s and faster before decompression, i see no reason for concern that GPUs would loose any meaningfull performance doing on-gpu decomression. GPU

s could easily scale way beyond what the consoles are doing in this regard.

Gone are the days when you needed more bruteforce hw to compete with the consoles (pre PS4 generation), these days you roughly need matching specs to come by. Probably due to consoles having moved over to x86/amd hardware and windows/api and engines having made strides since. Heck, the lastets DF video shared today shows the 750Ti holding up very, very well. And thats for a remake/PS5 title.

The saying 'PC as a platform is in trouble' has been used in platform warring since forever, yet the platform never died. The wattage notion isnt true either, it depends on what you want. A laptop sporting 5800h/3070m does outperform the PS5 yet draws less watts from the wall. Power efficiency right. Now let laptops have become the most popular choice among gamers these days (with numbers growing). Also, a 5600x/3060 setup isnt drawing crazy numbers either.

I can turn this around and point to the consoles 'being in trouble', were clearly moving away from having singular platforms, everythings moving to multiplatforms these days, see sony extending to the pc and mobile market aswell as streaming and services. I think it will be a while, but the pc probably exists longer as a platform, though perhaps with gaming being declined. Still, gaming on the go/mobile will probably always exist, hence laptops in pc form will exist way beyond the desktop and console box under the tv.

Though to be fair, i think both pc and console will co-exist for a long time to come. No need to be concerned really.

Rift Apart will do well on pc if you're equipped with the right hw, PCIE4 nvme (already in the 13gb/s raw numbers today) 3700x or better and a 3060/6600XT or better and your probably looking at a better experience. Even with a fast PCIE3 nvme.