If there was/is any 'additional headroom' it should be quite visible

how would it be visible though if you clamped the headroom at 60.

I mean, hypothetically, what if I clamped the frame rate to 55 fps? You'll see a perfect straight line until the really bad dip; and when they dip both they're within 1fps of each other. at 54 and 53 respectively. Is their performance gap < 3%?

I'm not trying to say that XSX is performing better. I'm just trying to ensure there is a separation of arguments that

a) PS5 performs more or less like XSX in the game (true; respectably there's no difference imo, 5% is not enough to really matter)

b) PS5 performs more or less like XSX with respect to the settings they have provided and within range of 9% (true, from an experience perspective you're unlikely to notice without a metric counter)

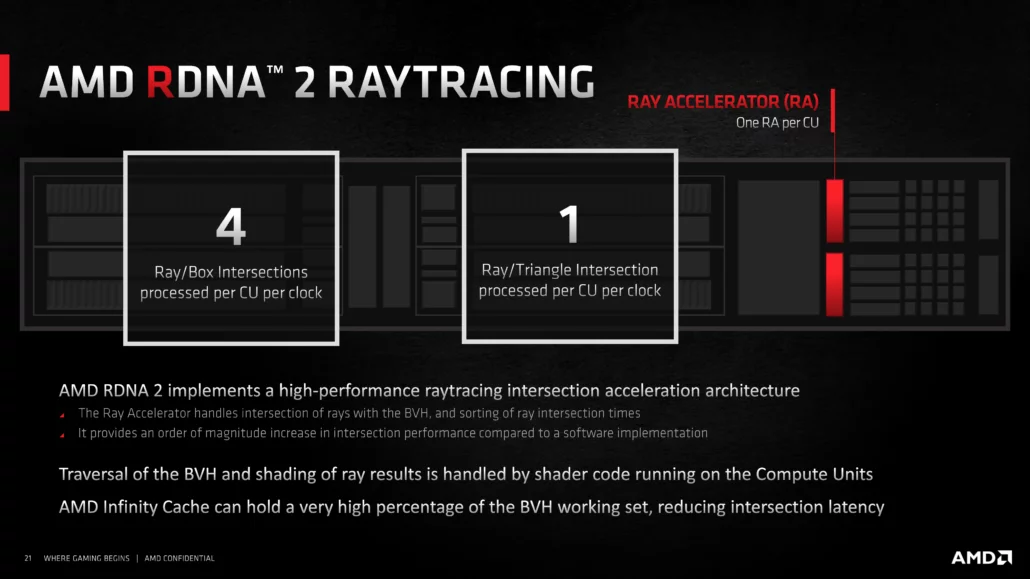

c) That the additional RT Units that XSX has only manifest to a 9% increase in performance over the clockspeed differential on PS5 for ray tracing (false, the metrics are not an indication of how the individual components are working towards their final output)

You can't prove C. Because at the very least you'd need to see the whole thing uncapped to really know what's going on beneath the hood.

Typically XSX has been a poor performer with a lot of alpha, how do you separate it dipping from having issues with alpha and PS5 not having issues with alpha. That may be a scenario where PS5 is making up time on RT by being better at rasterization since RT is a fixed cost. While I'm not saying it is, I'm just saying, you can't use this to describe how well the hardware performs on RT. Dips are not equivalent (see Hitman 3)

I mean; there really isn't enough RT computation here to really put the RT units to test.