Flappy Pannus

Veteran

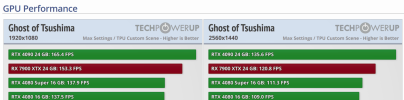

GPU Demanding as expected, but excellent consistency:

Edit: Eh, maybe spoke too soon. Looks like it has a memory leak issue.

I'm sure there will be a cutscene skip mod coming in short order.

VRAM a little high as with most Nixxes releases, but not ridiculously so. 8GB cards will be fine, especially at the resolutions they will play at (once they fix that memory leak issue, that is).

Edit: Eh, maybe spoke too soon. Looks like it has a memory leak issue.

Techspot said:While the game menus and hardware config options are flawless—Nixxes knows what they are doing—I really hate the fact that you can't skip cutscenes or dialogues. "Compiling shaders" and stuttering has been a problem for many PC releases, it's a total non-issue in Ghost of Tsushima. While there's a short 10-second shader compilation stage when you first load into the map, this takes only a few seconds and the results are cached, so subsequent game loads are much faster, unless you change graphics card or the GPU driver.

I'm sure there will be a cutscene skip mod coming in short order.

Techspot said:Our VRAM testing shows that Ghost of Tsushima is demanding but reasonable with its memory requirements. While it allocates around 10 GB, 8 GB is enough even for 4K at highest settings, which is also confirmed by our RTX 4060 Ti 8 GB vs 16 GB results—there's no performance difference between both cards, even when maxed out. Once you turn on Frame Generation, the VRAM usage increases by 2-3 GB, DLSS Frame Gen does use a few hundred megs more than FSR Frame Gen. For lower resolutions, the VRAM requirements are a bit on the high side, because even 1080p at lowest settings reaches around 5 GB, which could make things difficult for older 4 GB-class cards.

VRAM a little high as with most Nixxes releases, but not ridiculously so. 8GB cards will be fine, especially at the resolutions they will play at (once they fix that memory leak issue, that is).

Last edited: