5700XT has 64 single ROPS. @1800 MhzIs that what a 5700XT/rx6700 etc is doing?

PS5 has 64 single ROPS. @2230 Mhz

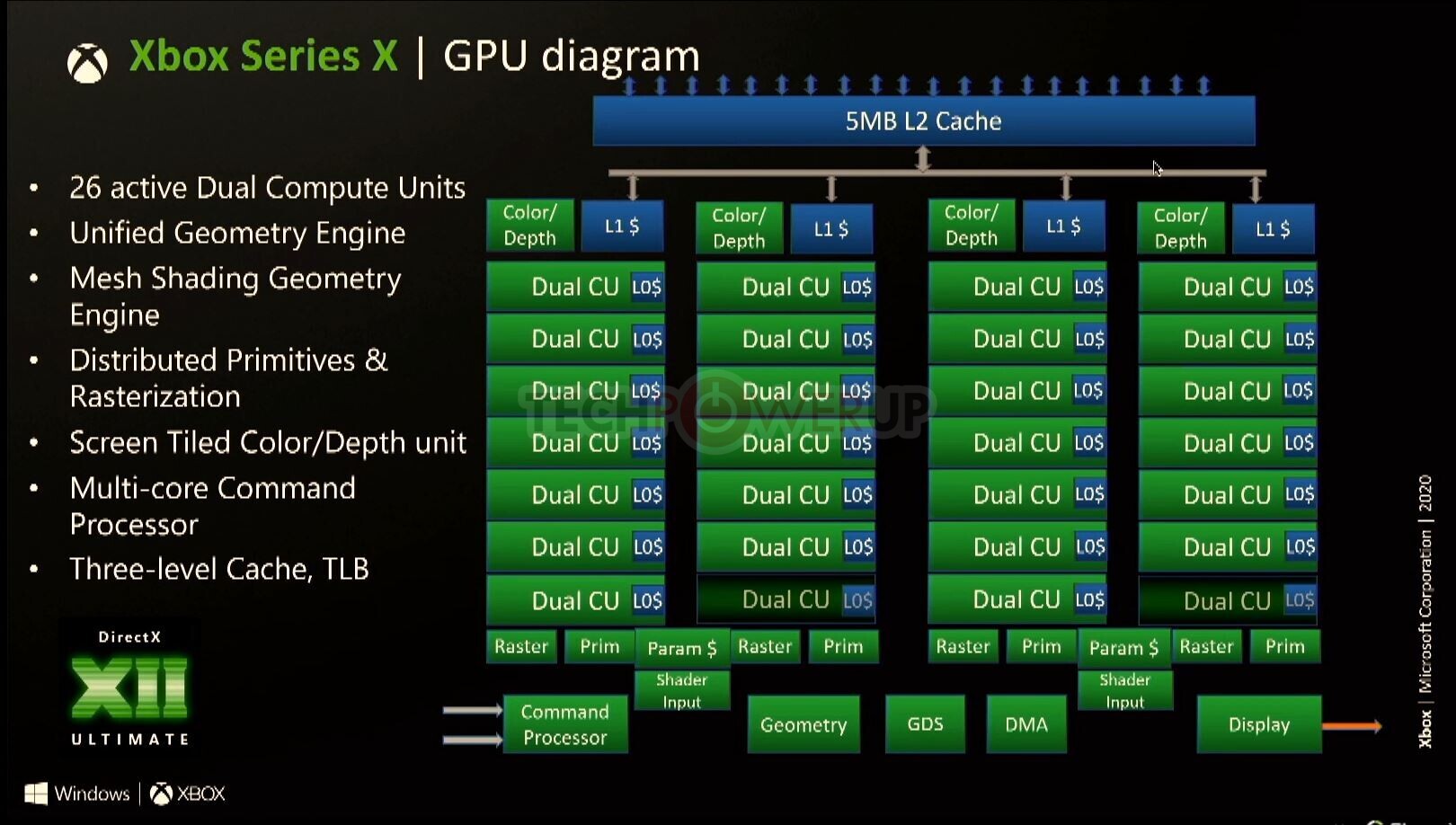

XSX has 32 double ROPS @1800 Mhz

6700XT has 64 double ROPS @2400 Mhz

The doubling only affects certain formats, not all. So having more is still advantageous. 64 single rops is better than 32 double rops.

Known Fixed Quantities

- When PS5 and XSX are using formats that can be doubled. PS5 still has up to +24% advantage on XSX. (pending clock speed variation)

- When they aren't using formats that can be doubled PS5 has up to 147% advantage. (pending clock speed variation)

- If Mesh Shaders aren't being used or micro geometry compute engines - PS5 has up to 24% triangle generation advantage (pending clock speed variation), possibly more if they support NGG pipeline on the driver side (likely imo based on Cerny talks)

- If Mesh Shaders aren't being used or micro geometry compute engines - It also has up to 24% more discard than XSX (pending clock speed variation), possibly significantly more if PS5 supports NGG pipeline on the driver side of things (likely imo based on Cerny talks)

- CPU is largely the same. DX12 is a heavier and clunkier API than GNM, and PS5 doesn't need to run games in a VM like Xbox does, so the clock speed differentials may be a wash.

- There is in general a lot less hiccupping/issues on PS5, likely due to API differences.

- Memory pool splitting on series consoles

- I/O latency / bandwidth

- Kraken decompressor / Oodle

- cache scrubbers

- VRS

- RT Units on XSX

TLDR:

If PS5 and XSX are performing relatively the same, then XSX is doing the catchup on the ALU side of things. Which depending on the sizing of the workload can expose up to a maximum of a 20% more TF advantage (there is better utilization of CUs in the compute pipeline over the 3D pipeline)

When you consider this, you can see how XSX has a wild time with consistency because developers are more than free and continue to make heavy usage of the 3D pipeline. Between PS5 and XSX, despite being up on ALU, XSX can run simply into more bottlenecks at the beginning or the end of the 3D pipeline that may not cap out PS5, and so the reliance on the compute differential is required to make up that shortfall.

RT Units on XSX there are more and that quantity is fixed, however the type of ray tracing will determine how well utilized these units are. The more incoherent the algorithm is, the less saturation of threads there will be. And it becomes a serialized race of actions pending how fast data is brought back from memory. Parallelization is very low in RT, so the advantages are not clear.

Last edited: