PS4 should be perfectly fine doing it too. 2011 GPU's should aswell (fermi?). Its a question of performance and theres where hardware acceleration provides. If musle deformation/DRS is enough then yeah, though i think AI/ML is able to offer much more than that.

Right, i thought it was Fermi. Still, thats over decade ago too.

Yes . After I expect to see ML use extensively in animation, VFX, post processing.

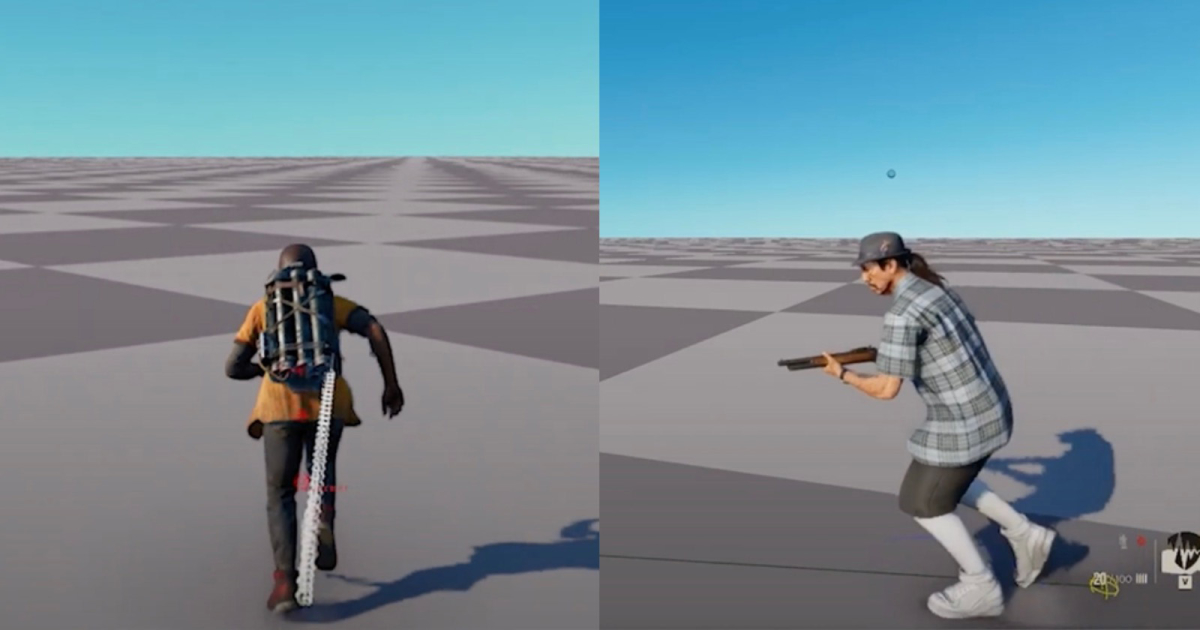

Ubisoft Explained AI-Driven Motion Matching Technique in Far Cry 6

The Choreograph tool helps make Ubisoft's games more immersive.