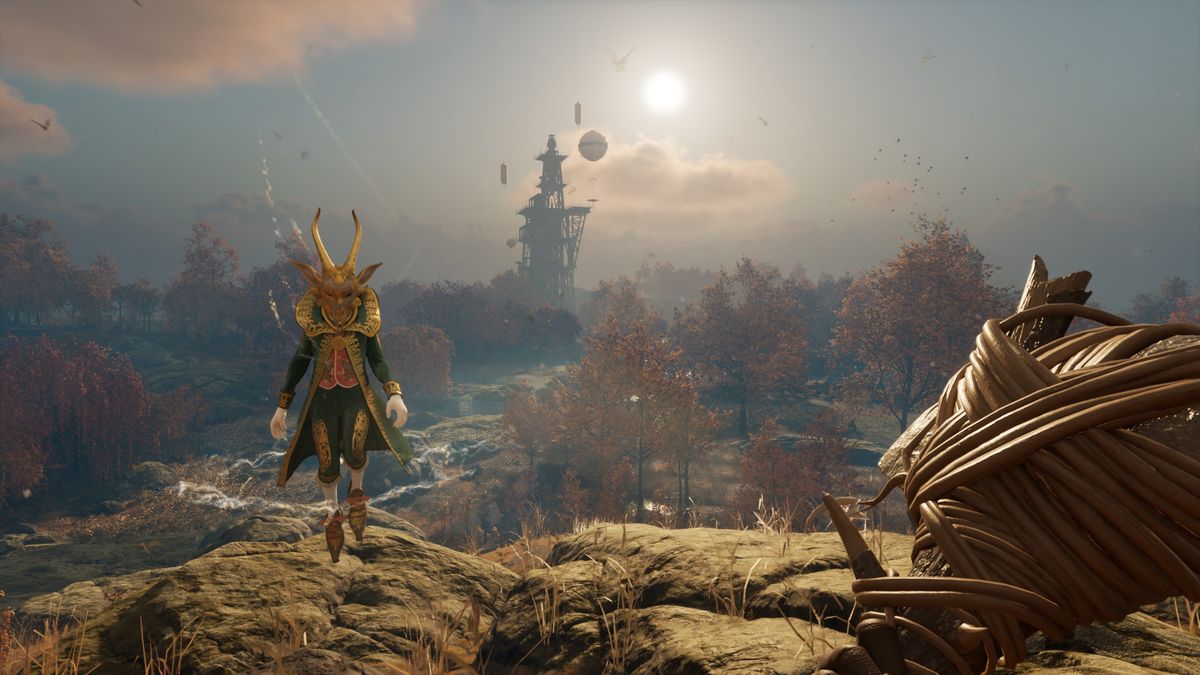

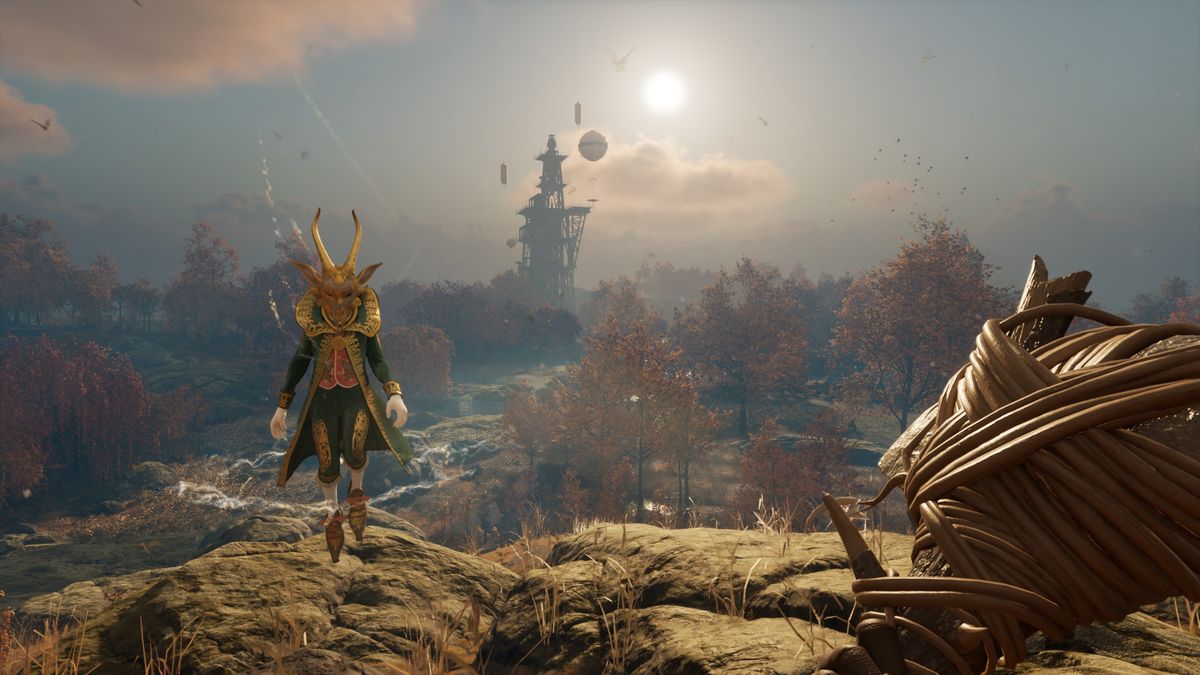

FSR 3 removed from Nightingale's launch plan with 'a significant number' of game crashes blamed on the AMD tech 'whether or not users had the setting turned on'

The bug affected everyone but Radeon owners are the ones left paying the price.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The only new major formats supported for GFX12 WMMA are FP8/BF8 but they also introduced the very same support for dot4 instructions so it makes one really wonder if they truly implemented a faster special hardware path for matrix computations ?I mean, they've got that double rate matrix multiplication pipe in RDNA4, and have had that huge wave matrix multiplication register in RDNA3 for a while, making use of those makes sense.

AI is better at figuring out things like cheap upscaling than humans are, given enough guidance. I suppose they could've just reverse engineered what the AI is doing (for those wondering how) but then again what the AI is doing is assumedly based on low bit depth matrix multiplication anyway since that's what it has access to and will be rewarded for optimizing for.

Either way, looking forward to more competition in upscalers. XESS is mostly good but has some ghosting problems on occasion.

Why can't it be both? Imagine how much more energy efficient a console will be when frame generation is fully available across all platforms? And then of course, developers may trade the recovered energy to spend on additional details or assets or physics or who-knows what.This point of view can be an important aspect for those who play on a PC that already consumes a lot of watts. However, for those who play on an energy-efficient console, the potential performance increase of the fixed hardware is a more important aspect.

I appreciate your attitude.Why can't it be both? Imagine how much more energy efficient a console will be when frame generation is fully available across all platforms? And then of course, developers may trade the recovered energy to spend on additional details or assets or physics or who-knows what.

It's literally two sides of the same coin. Use all your power for brute force, or let upscaling do a lot of the lifting, thus enabling even more interesting work to be done. The power budget is the power budget, might as well burn it all right?

I would hope so. That's a far more useful application of frame generation than 60fps->120fps, in my opinion. It could open up a huge amount more headroom for developers to play with, which will be important for next gen consoles in particular.It is almost certain that they will develop this until they achieve 30 to 60 FPS.

I didn't watch the whole video but i'm sure I didn't miss anything about methodology at the start but in case I did sorry in advance. Anyway did he try match any sharpening levels? I think some point in the past their tests were comparing fsr with sharpening vs dlss with none, it might explain the texture differences in cp2077.

I'm sure (but would need to check to be 100%) that FSR defaults with sharpening on but DLSS doesn't.I didn't watch the whole video but i'm sure I didn't miss anything about methodology at the start but in case I did sorry in advance. Anyway did he try match any sharpening levels? I think some point in the past their tests were comparing fsr with sharpening vs dlss with none, it might explain the texture differences in cp2077.