A bit slower in RT, a bit faster in rasterization."Overall performance" include RT performance though so this isn't likely.

Non-RT games performance I can see happening maybe.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Bondrewd

Veteran

?Yeah AMD starting to communicate on efficiency

They've said nothing about N3x parts so far, unfortunately for you.

Whoa champ, they can just sell N21 for that.It should be easy to position N31 against 4080 12GB and kill it in value for money

N31 is considerably higher perf and a cost bump on top of that.

D

Deleted member 2197

Guest

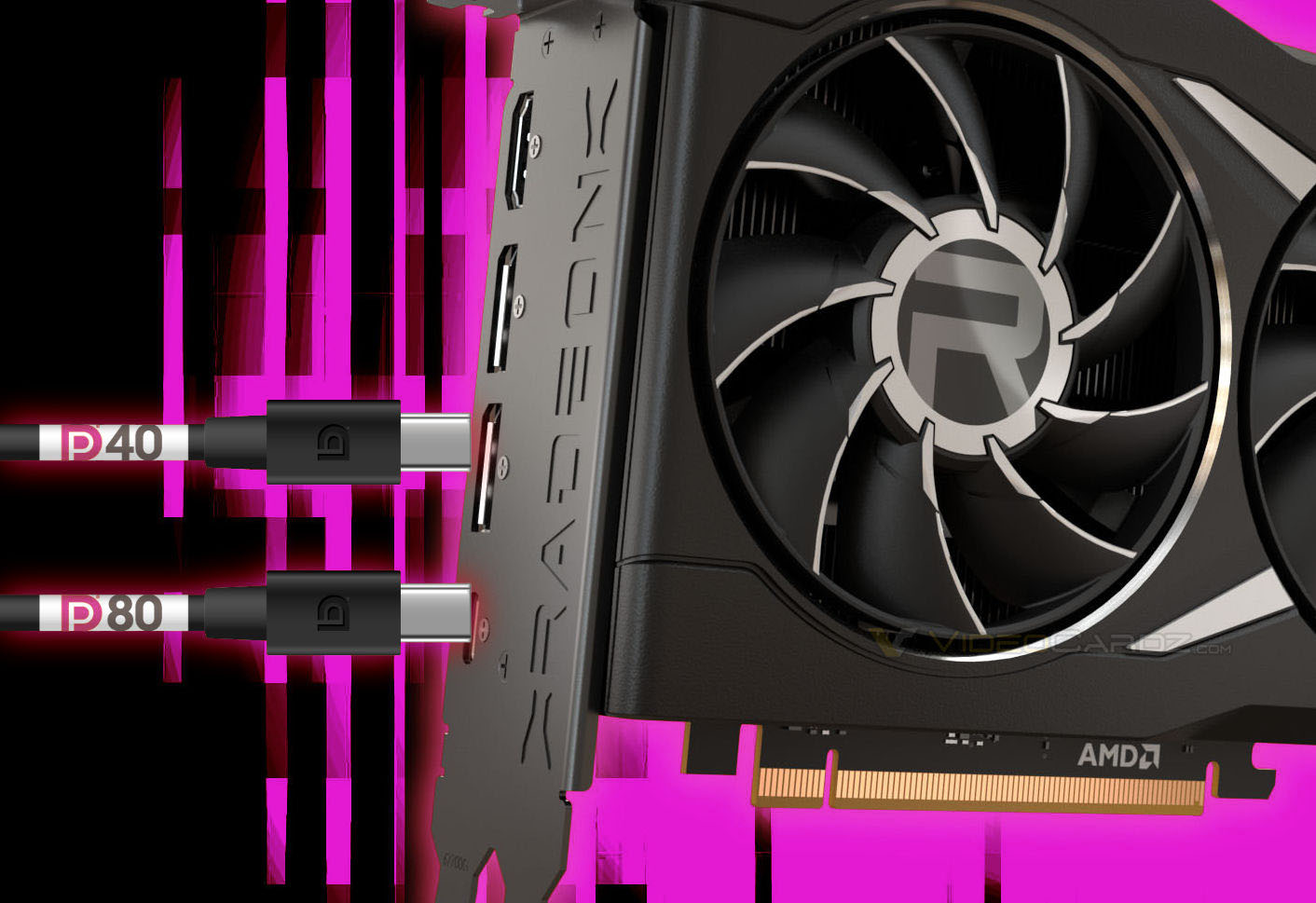

AMD Navi 31 GPU is rumored to support unannounced DisplayPort 2.1 interface - VideoCardz.com

AMD Navi 31 with DisplayPort 2.1? According to Kyle Bennett (HardOCP.com), next-gen AMD RDNA3 GPU could support DisplayPort 2.1. AMD Radeon RX 7000 (RDNA3) GPU, Source: AMD It came as a surprise to everyone that GeForce RTX 40 series did not support DisplayPort 2.0. This standard was formally...

DegustatoR

Legend

They really can't.Whoa champ, they can just sell N21 for that.

davis.anthony

Veteran

??? Doesn't AMD's RT scale linearly with compute?

Assuming they kept the same ratio of RT cores, it should be ~2.4x increase. Add in the ~1.3x clock increase(Navi31 @ 2.8ghz) and we are slightly above ~3x RT performance.

If AMD made any other changes to increase RT performance/efficiency, it could be +3x RT performance over Navi 21.

There are games where my 3060ti is faster than a 6900XT, AMD need a good 4x+ increase in RT performance to stay level with Nvidia which I can't see happening,

Tomshardware posted a rumour that the RX7700 was as fast with RT as a 6900XT.

Making AMD's next generation mid-range GPU on-par with or slower than Nvidia's last generation GPU when running RT.

AMD can somewhat get away with slower RT performance in the here and now as it's still early days for RT in games, but give it another couple of years and game adoption should be much better with much better RT implementations in which case AMD will no longer get away with being so far behind Nvidia with RT.

Is Radeon really chasing GeForce? They are not in the same league. AMD has been sitting in its niche of 10-20% market share for a decade. This makes one think Radeon's design goals are likely quite different from GeForce's.There are games where my 3060ti is faster than a 6900XT, AMD need a good 4x+ increase in RT performance to stay level with Nvidia which I can't see happening,

Tomshardware posted a rumour that the RX7700 was as fast with RT as a 6900XT.

Making AMD's next generation mid-range GPU on-par with or slower than Nvidia's last generation GPU when running RT.

AMD can somewhat get away with slower RT performance in the here and now as it's still early days for RT in games, but give it another couple of years and game adoption should be much better with much better RT implementations in which case AMD will no longer get away with being so far behind Nvidia with RT.

TopSpoiler

Regular

Definitely they've said.They've said nothing about N3x parts so far, unfortunately for you.

Contributing to this energy-conscious design, AMD RDNA™ 3 refines the AMD RDNA™ 2 adaptive power management technology to set workload-specific operating points, ensuring each component of the GPU uses only the power it requires for optimal performance. The new architecture also introduces a new generation of AMD Infinity Cache™, projected to offer even higher-density, lower-power caches to reduce the power needs of graphics memory, helping to cement AMD RDNA™ 3 and Radeon™ graphics as a true leader in efficiency.

We’re thrilled with the improvements we’re making with AMD RDNA™ 3 and its predecessors, and we believe there’s even more to be pulled from our architectures and advanced process technologies, delivering unmatched performance per watt across the stack as we continue our push for better gaming.

Advancing Performance-Per-Watt to Benefit Gamers

By: Sam Naffziger, Senior Vice President, Corporate Fellow, and Product Technology Architect The demand for immersive, realistic gaming experiences is constantly pushing the boundaries of technology, driving enhancements to support features like raytracing, variable rate shading, and advanced...

TESKATLIPOKA

Newcomer

AMD's main market is still CPUs. GPUs are not their top priority, yet RDNA2 was competitive If you ignore abysmal RT, which at launch was in only a few games.Is Radeon really chasing GeForce? They are not in the same league. AMD has been sitting in its niche of 10-20% market share for a decade. This makes one think Radeon's design goals are likely quite different from GeForce's.

I think raster performance will be pretty good with RDNA3.

RT performance is a big unknown, but considering Intel has a bit worse RT impact on performance than Ampere, I don't see why AMD wouldn't significantly improve It to be closer to the competition.

Last edited:

Bondrewd

Veteran

Of course they can.They really can't.

Oh they did?Definitely they've said.

Neat.

They're gonna show some hella numbers in a few weeks.

Not really.Is Radeon really chasing GeForce?

Yea, unlike NV, AMD has a shot at some real volume markets aka laptop APUs (RIP to those for now tho).They are not in the same league

Ugh no it's only past like 2 years that they've dipped to 20 aka right where they stopped spending much wafers on GPU.AMD has been sitting in its niche of 10-20% market share for a decade

Make the best PPA stick is the same for every GPU vendor.This makes one think Radeon's design goals are likely quite different from GeForce's.

AMD has been sitting in that niche for so long because they couldn't afford the R&D to compete with both Intel and nVidia, and chose to prioritize CPUs. R&D investment, and especially GPU R&D investment, has risen very dramatically because of their success on the CPU side. AMD spent more (even after inflation) on R&D last quarter than they spent in the entire year of 2016.Is Radeon really chasing GeForce? They are not in the same league. AMD has been sitting in its niche of 10-20% market share for a decade. This makes one think Radeon's design goals are likely quite different from GeForce's.

Their new products are soon going to be the ones that benefit from this increased investment.

Lets hope that this chiplet design is the start of the turn around. Its important to have multiple companies competitingAMD has been sitting in that niche for so long because they couldn't afford the R&D to compete with both Intel and nVidia, and chose to prioritize CPUs. R&D investment, and especially GPU R&D investment, has risen very dramatically because of their success on the CPU side. AMD spent more (even after inflation) on R&D last quarter than they spent in the entire year of 2016.

Their new products are soon going to be the ones that benefit from this increased investment.

Frenetic Pony

Veteran

No idea why people listen to leakers, they're about as accurate as stormtroopers.

GPUs are limited by bottlenecks. For Nvidia that's power, for AMD likely bandwidth. Assuming even linear bottleneck scaling AMD's top end will be better than Nvidias in most every current title, rt or not, if the bottleneck is 24gbps. If it's 20 then they'll be slower.

The interesting thing will be pitting AMD's unknown RT improvements against Nvidia's ray reordering(SER), which needs dev support that it'll likely get on future titles. That'll be fun to see.

GPUs are limited by bottlenecks. For Nvidia that's power, for AMD likely bandwidth. Assuming even linear bottleneck scaling AMD's top end will be better than Nvidias in most every current title, rt or not, if the bottleneck is 24gbps. If it's 20 then they'll be slower.

The interesting thing will be pitting AMD's unknown RT improvements against Nvidia's ray reordering(SER), which needs dev support that it'll likely get on future titles. That'll be fun to see.

Bondrewd

Veteran

Not really, no, the only thing this amounts to in client is NV margins tanking a bit.Its important to have multiple companies competiting

Not putting my head on the table, but pretty sure there's a jump from 18 straight to 24 Gbps in GDDR6 at the moment (of course Samsung might silent-release slower bins of the 24 Gbps chips but don't think they have yet anyway)if the bottleneck is 24gbps. If it's 20 then they'll be slower.

D

Deleted member 2197

Guest

I believe Intel also has a form of H/W ray reordering like Nvidia so I imagine it will need similar dev support.No idea why people listen to leakers, they're about as accurate as stormtroopers.

GPUs are limited by bottlenecks. For Nvidia that's power, for AMD likely bandwidth. Assuming even linear bottleneck scaling AMD's top end will be better than Nvidias in most every current title, rt or not, if the bottleneck is 24gbps. If it's 20 then they'll be slower.

The interesting thing will be pitting AMD's unknown RT improvements against Nvidia's ray reordering(SER), which needs dev support that it'll likely get on future titles. That'll be fun to see.

The GPU core also contains hardware for sorting and reordering the workload for ray-tracing tasks in an effort to improve execution performance and increase throughput.

Bondrewd

Veteran

Pretty sure it's 18 then 20 then 24 as far as G6 goes.but pretty sure there's a jump from 18 straight to 24 Gbps in GDDR6

20Gbps parts are even listed somewhere iirc.

AMD doesn't necessarily have to beat Nvidia, they just have to provide the right card for the right price.

Nvidia with the 4090 has essentially priced most people out of the highest end gaming GPUs.. The 4080 cards are a joke. If AMD can hit the right price, and beat that performance, they should do well.

Nvidia with the 4090 has essentially priced most people out of the highest end gaming GPUs.. The 4080 cards are a joke. If AMD can hit the right price, and beat that performance, they should do well.

I agree on Nvidia pricing but I don't see anyone buying a Radeon GPU above $1k, no matter how good is it. At least, not before AMD improve their ecosystem (Broadcast, TRX noise, RTX Remix now) and global offer for the target audience (performance and support on AI, ML, Compute, rendering, RT, video encoding workloads). Be only good at raster gaming is not enough in 2022AMD doesn't necessarily have to beat Nvidia, they just have to provide the right card for the right price.

Nvidia with the 4090 has essentially priced most people out of the highest end gaming GPUs.. The 4080 cards are a joke. If AMD can hit the right price, and beat that performance, they should do well.

However, in gaming mainstream value market (below $500), it's less of a problem even if mind share hurts a lot. AMD still needs a strong halo product for 2 or 3 generations to change their brand perception

itsmydamnation

Veteran

what a load of crap,I agree on Nvidia pricing but I don't see anyone buying a Radeon GPU above $1k, no matter how good is it. At least, not before AMD improve their ecosystem (Broadcast, TRX noise, RTX Remix now) and global offer for the target audience (performance and support on AI, ML, Compute, rendering, RT, video encoding workloads). Be only good at raster gaming is not enough in 2022

However, in gaming mainstream value market (below $500), it's less of a problem even if mind share hurts a lot. AMD still needs a strong halo product for 2 or 3 generations to change their brand perception

raster + RT + upscale , thats covers about 99% of the consumer GPU buying market.

by your logic not a single console would be sold..... but wait.......

Not really, no, the only thing this amounts to in client is NV margins tanking a bit.

IF AMD and Intel were competitive with Nvidia in terms of performance and features then the price of gpus would come down as they try and compete with each other .

- Status

- Not open for further replies.

Similar threads

- Replies

- 1K

- Views

- 189K

- Replies

- 15

- Views

- 2K

- Replies

- 7

- Views

- 3K

- Replies

- 85

- Views

- 12K