hurleybird

Newcomer

I guess the better question is "why wouldn't they"?

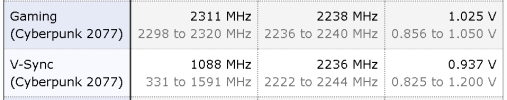

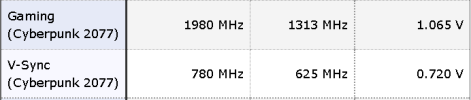

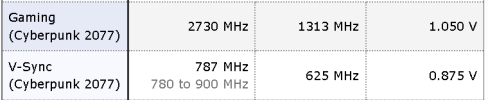

Performance wins benchmarks, and from the graph above, the difference between 100% power target and 60% power target is ~11%, or roughly the difference between the original 3080 10GB and the original 3090, to say nothing of the 3080 12GB and the 3080Ti wedged in between, you're basically giving up an entire product tier's worth of performance for free.

It's not free in terms of binning, board costs, or cooler costs. There's also a less tangible cost to brand perception.

There's a reason Maxwell operated in a high efficiency zone, and that's because it was still able to win there. The benefits of a ~10% bigger win did not justify the costs of attaining that performance.

If Nvidia expected that, say, the fastest Navi 31 would only be 40% faster than a 6950XT, then I expect we would have seen slightly lower performance, but also much lower TDPs, less robust power deliver, and saner cooling.

That the 4090 is rated for 450 watts means Nvidia expects a battle. That both the 4090 founders and AIB cards appear built to accommodate something closer 600W tells you that either at some point Nvidia expected AMD to be even more competitive but now believe they can win with 450W, or that even though they don't expect to win (at least not convincingly) at 450W, at the last minute they decided the market wouldn't accept a 600W card.

The fact that the 4090 often finds itself CPU limited and doesn't need to get to its "poor efficiency zone" is probably something of a blessing though. Nvidia gets to look more efficient now (at the expense of performance), and more performant later (at the expense of efficiency). But in terms of perception efficiency matters most on day 0, while performance is continually re-evaluated as new parts are released.