Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

They pushed into chiplets, I think the tech they are going for is cheaper silicon. If they don’t take advantage or can’t, that’s a little disappointing for me.Well, it looks like Navi 31 only needs to be 3x faster with ray tracing to be competitive with 4090...

Nvidia isn’t intel. There’s no shame in saying you can’t best a company that is constantly pushing the boundaries.

AMD will hopefully do it their own way.

DegustatoR

Legend

I doubt that Nvidia expects N31 to be competitive with 4090.Well, it looks like Navi 31 only needs to be 3x faster with ray tracing to be competitive with 4090...

But it may end up being pretty competitive with 4080s - which aren't exactly cheap this time around.

All in all this may be a better outcome for AMD's GPU ASPs than it was against Ampere where Nv has basically pushed them into $700 price range with 3080/10. (And if not for crypto this would likely be a sizeable issue for RDNA2 market positioning.)

I've not looked at anything yet, so is that including with DLSS3?Well, it looks like Navi 31 only needs to be 3x faster with ray tracing to be competitive with 4090...

As I expect most demanding games to include DLSS3, FSR2.x & XeSS

Leoneazzurro5

Regular

Well it seems we will have some answers soon

Before Nov 3?Well it seems we will have some answers soon

No, native, with DLSS they have no chance whatsoever.I've not looked at anything yet, so is that including with DLSS3?

As I expect most demanding games to include DLSS3, FSR2.x & XeSS

We will have to see how the other chips in the product line stack up. The I am still skeptical on how the 4080 runt performsWell, it looks like Navi 31 only needs to be 3x faster with ray tracing to be competitive with 4090...

The 4090 has 2.23ghz base/ 2.52ghz boost with 128 RT /512 tensor/ 16,384 cuda. The 4080 16GB is 2.21/2.51 76/304/9728 and the 4080 12 gig is 2.31/2.61 60/240/7680

So there is plenty of space for AMD to compete.

Leoneazzurro5

Regular

Probably no launch but some details.Before Nov 3?

Bondrewd

Veteran

Not this time, market conditions no allow the ultra-halo part.If they don’t take advantage or can’t, that’s a little disappointing for me.

Of course they do, their companal is nowhere near the naivete of Intel's.I doubt that Nvidia expects N31 to be competitive with 4090.

Those are the N32 grazing fields.But it may end up being pretty competitive with 4080s - which aren't exactly cheap this time around.

TESKATLIPOKA

Newcomer

He is talking about RT performance not pure raster.Not this time, market conditions no allow the ultra-halo part.

Of course they do, their companal is nowhere near the naivete of Intel's.

Those are the N32 grazing fields.

Although, I expect pretty good gains in RT for RDNA3 thanks to huge compute improvement.

I wonder what they've done to improve RT performance aside from that. Hopefully added BVH traversal in HW?He is talking about RT performance not pure raster.

Although, I expect pretty good gains in RT for RDNA3 thanks to huge compute improvement.

Just patents but maybe some of these describe posssible improvements for RDNA3

PARTIALLY RESIDENT BOUNDING VOLUME HIERARCHY

BOUNDING VOLUME HIERARCHY COMPRESSION

BOUNDING VOLUME HIERARCHY TRAVERSAL

EFFICIENT DATA PATH FOR RAY TRIANGLE INTERSECTION

MECHANISM FOR SUPPORTING DISCARD FUNCTIONALITY IN A RAY TRACING CONTEXT

EARLY CULLING FOR RAY TRACING

PARALLELIZATION FOR RAYTRACING

PARTIALLY RESIDENT BOUNDING VOLUME HIERARCHY

BOUNDING VOLUME HIERARCHY COMPRESSION

BOUNDING VOLUME HIERARCHY TRAVERSAL

EFFICIENT DATA PATH FOR RAY TRIANGLE INTERSECTION

MECHANISM FOR SUPPORTING DISCARD FUNCTIONALITY IN A RAY TRACING CONTEXT

EARLY CULLING FOR RAY TRACING

PARALLELIZATION FOR RAYTRACING

Jawed

Legend

It's been a very long time since GPUs didn't benefit massively from hand-tweaked under-volting and -clocking. Well over 10 years.AMD could have a winner if they are able to keep power requirements low.

Because some applications use that power in a way that's meaningful for "professionals" and "content creators" and NVidia can build a cooler that works nicely.I wonder why Nvidia set such high power targets

D

Deleted member 2197

Guest

AMD could have a winner if they are able to keep power requirements low. I wonder why Nvidia set such high power targets

Enermax PSU calculator already mentions unreleased Radeon RX 7000XT and GeForce RTX 40 GPUs - VideoCardz.com

Radeon RX 7950XT, 7900XT, 7800XT and 7700XT confirmed by Enermax? The power supply maker now has recommendations for next-gen GPU series. It is unclear if Enermax has confidential information on Radeon RX 7000 series or GeForce RTX 40 series, but it is a fact that as many as eight new graphics...

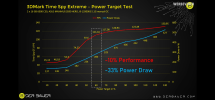

I guess the better question is "why wouldn't they"?View attachment 7182

AMD could have a winner if they are able to keep power requirements low. I wonder why Nvidia set such high power targets

Performance wins benchmarks, and from the graph above, the difference between 100% power target and 60% power target is ~11%, or roughly the difference between the original 3080 10GB and the original 3090, to say nothing of the 3080 12GB and the 3080Ti wedged in between, you're basically giving up an entire product tier's worth of performance for free.

People who are concerned about power efficiency can always reduce the power target if they want, but the default settings are the ones used for benchmark comparisons and reviews.

What I would love to see would be for AMD or NVidia to add a driver feature to write a new power target into NVRAM in the vBIOS. Rather than making people fight with tools that get wiped out with OS reinstall, etc., it would allow everyone to set a permanent but easily changeable power target with zero extra fuss.

It would even let both AMD and NVidia market their firebreathing top-end SKUs to people who don't *yet* have the PSUs to effectively run them.

"Only have a 650W power supply? Never fear! You can still buy a 7950xtx and with two clicks you can restrict it to only consuming 250W like your old RX6800 with no risk of damaging your PSU, and the instant you upgrade your PSU, two more clicks and you can unlock the rest of your performance!"

I guess the better question is "why wouldn't they"?

One thing I'll be really curious to see with RDNA3 is whether AMD will have fixed their 'scaling down' issue.

Nvidia cards tend to do exceedingly well when artificially limited by either a lowered power limit, or just low loads such as an older game running with Vsync on and a 60hz cap. Very few reviewers run tests like this, so I'm anxiously awaiting TPU's RDNA3 review to see where it sits in the stack.

Somewhat counterintuitively, the 3090Ti with its out of the box 450w power target is one of the most efficient ways to a 60hz VSync lock in that test, even better than wee GA106 in the RTX 3050. The 4090 is even better in this regard even with its 450w out of the box power target, it happily scales all the way down to 76w in the same test and is nearly the most efficient way to play that particular title.

RDNA2 struggles heavily there, with the higher-end cards drawing less than their maximum, but still quite a lot compared to everything else other than the Arc cards and poor Vega.

Historically power efficiency at low-to-medium loads higher than idle but lower than 100% have been one of the areas where chiplet based designs like Zen2-4 on the CPU side have struggled; you still need to have most if not all of the intra-chiplet communications links powered up, and those usually cost an order of magnitude more power per bit transferred than keeping everything on-die. Doesn't matter much at 100% load, but becomes a significant contribution to overall power draw at part load. Here's hoping RDNA3 has that part nailed down!

www.anandtech.com

www.anandtech.com

Performance wins benchmarks, and from the graph above, the difference between 100% power target and 60% power target is ~11%, or roughly the difference between the original 3080 10GB and the original 3090, to say nothing of the 3080 12GB and the 3080Ti wedged in between, you're basically giving up an entire product tier's worth of performance for free.

People who are concerned about power efficiency can always reduce the power target if they want, but the default settings are the ones used for benchmark comparisons and reviews.

What I would love to see would be for AMD or NVidia to add a driver feature to write a new power target into NVRAM in the vBIOS. Rather than making people fight with tools that get wiped out with OS reinstall, etc., it would allow everyone to set a permanent but easily changeable power target with zero extra fuss.

It would even let both AMD and NVidia market their firebreathing top-end SKUs to people who don't *yet* have the PSUs to effectively run them.

"Only have a 650W power supply? Never fear! You can still buy a 7950xtx and with two clicks you can restrict it to only consuming 250W like your old RX6800 with no risk of damaging your PSU, and the instant you upgrade your PSU, two more clicks and you can unlock the rest of your performance!"

One thing I'll be really curious to see with RDNA3 is whether AMD will have fixed their 'scaling down' issue.

Nvidia cards tend to do exceedingly well when artificially limited by either a lowered power limit, or just low loads such as an older game running with Vsync on and a 60hz cap. Very few reviewers run tests like this, so I'm anxiously awaiting TPU's RDNA3 review to see where it sits in the stack.

Somewhat counterintuitively, the 3090Ti with its out of the box 450w power target is one of the most efficient ways to a 60hz VSync lock in that test, even better than wee GA106 in the RTX 3050. The 4090 is even better in this regard even with its 450w out of the box power target, it happily scales all the way down to 76w in the same test and is nearly the most efficient way to play that particular title.

RDNA2 struggles heavily there, with the higher-end cards drawing less than their maximum, but still quite a lot compared to everything else other than the Arc cards and poor Vega.

Historically power efficiency at low-to-medium loads higher than idle but lower than 100% have been one of the areas where chiplet based designs like Zen2-4 on the CPU side have struggled; you still need to have most if not all of the intra-chiplet communications links powered up, and those usually cost an order of magnitude more power per bit transferred than keeping everything on-die. Doesn't matter much at 100% load, but becomes a significant contribution to overall power draw at part load. Here's hoping RDNA3 has that part nailed down!

AMD 3rd Gen EPYC Milan Review: A Peak vs Per Core Performance Balance

Attachments

- Status

- Not open for further replies.

Similar threads

- Replies

- 1K

- Views

- 179K

- Replies

- 15

- Views

- 2K

- Replies

- 7

- Views

- 2K

- Replies

- 85

- Views

- 10K