Digital Foundry vs console texture filtering

UPDATE 26/10/15 16:57: After publishing this feature over the weekend, Krzysztof Narkowicz, lead enginer programmer of …

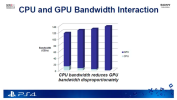

The issue isn't one of less BW for GPU as that BW is consumed by CPU, but that the proportion of BW reduction is greater than the CPU uses. It's an issue of RAM topology and access, seemingly with a high overhead when the CPU accesses the RAM. It's unclear whether the type of access - larger, less frequent accesses or frequent, small accesses - impact GPU-available BW differently, but I expect so.

Thus the question is more if there have been improvements so the overhead of a shared RAM pool on PS5 and going forwards is less? We've only seen this for PS4 AFAIK. Does that mean it was a PS4 specific problem, or have other consoles and shared-RAM devices experienced the same? Ideally the total BW will remain static and it'll yust be divide across accessing processors.

Is it the Onion/Garlic structure imposing this limit, and would other vegetables provide a better solution?An investigation into Ubisoft's The Crew in 2013 revealed there's a degree of allocation here; the graphics component has its own, faster 176GB/s memory bus labeled Garlic, while the CPU's Onion has a peak of 20GB/s via its caches. Even so, there's a tug-of-war at play here - a balancing act depending on the game.